A Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing.

CiteSeerX is a public search engine and digital library for scientific and academic papers, primarily in the fields of computer and information science.

A recommender system, or a recommendation system, is a subclass of information filtering system that provides suggestions for items that are most pertinent to a particular user. Recommender systems are particularly useful when an individual needs to choose an item from a potentially overwhelming number of items that a service may offer.

A Bloom filter is a space-efficient probabilistic data structure, conceived by Burton Howard Bloom in 1970, that is used to test whether an element is a member of a set. False positive matches are possible, but false negatives are not – in other words, a query returns either "possibly in set" or "definitely not in set". Elements can be added to the set, but not removed ; the more items added, the larger the probability of false positives.

In cryptography, a private information retrieval (PIR) protocol is a protocol that allows a user to retrieve an item from a server in possession of a database without revealing which item is retrieved. PIR is a weaker version of 1-out-of-n oblivious transfer, where it is also required that the user should not get information about other database items.

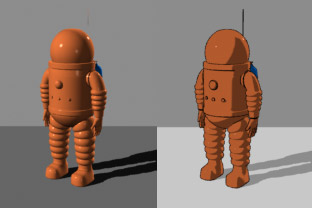

Non-photorealistic rendering (NPR) is an area of computer graphics that focuses on enabling a wide variety of expressive styles for digital art, in contrast to traditional computer graphics, which focuses on photorealism. NPR is inspired by other artistic modes such as painting, drawing, technical illustration, and animated cartoons. NPR has appeared in movies and video games in the form of cel-shaded animation as well as in scientific visualization, architectural illustration and experimental animation.

MonetDB is an open-source column-oriented relational database management system (RDBMS) originally developed at the Centrum Wiskunde & Informatica (CWI) in the Netherlands. It is designed to provide high performance on complex queries against large databases, such as combining tables with hundreds of columns and millions of rows. MonetDB has been applied in high-performance applications for online analytical processing, data mining, geographic information system (GIS), Resource Description Framework (RDF), text retrieval and sequence alignment processing.

Nearest neighbor search (NNS), as a form of proximity search, is the optimization problem of finding the point in a given set that is closest to a given point. Closeness is typically expressed in terms of a dissimilarity function: the less similar the objects, the larger the function values.

Cold start is a potential problem in computer-based information systems which involves a degree of automated data modelling. Specifically, it concerns the issue that the system cannot draw any inferences for users or items about which it has not yet gathered sufficient information.

A web query or web search query is a query that a user enters into a web search engine to satisfy their information needs. Web search queries are distinctive in that they are often plain text and boolean search directives are rarely used. They vary greatly from standard query languages, which are governed by strict syntax rules as command languages with keyword or positional parameters.

Collaborative search engines (CSE) are Web search engines and enterprise searches within company intranets that let users combine their efforts in information retrieval (IR) activities, share information resources collaboratively using knowledge tags, and allow experts to guide less experienced people through their searches. Collaboration partners do so by providing query terms, collective tagging, adding comments or opinions, rating search results, and links clicked of former (successful) IR activities to users having the same or a related information need.

Folksonomy is a classification system in which end users apply public tags to online items, typically to make those items easier for themselves or others to find later. Over time, this can give rise to a classification system based on those tags and how often they are applied or searched for, in contrast to a taxonomic classification designed by the owners of the content and specified when it is published. This practice is also known as collaborative tagging, social classification, social indexing, and social tagging. Folksonomy was originally "the result of personal free tagging of information [...] for one's own retrieval", but online sharing and interaction expanded it into collaborative forms. Social tagging is the application of tags in an open online environment where the tags of other users are available to others. Collaborative tagging is tagging performed by a group of users. This type of folksonomy is commonly used in cooperative and collaborative projects such as research, content repositories, and social bookmarking.

Learning to rank or machine-learned ranking (MLR) is the application of machine learning, typically supervised, semi-supervised or reinforcement learning, in the construction of ranking models for information retrieval systems. Training data may, for example, consist of lists of items with some partial order specified between items in each list. This order is typically induced by giving a numerical or ordinal score or a binary judgment for each item. The goal of constructing the ranking model is to rank new, unseen lists in a similar way to rankings in the training data.

Tomasz Imieliński is a Polish-American computer scientist, most known in the areas of data mining, mobile computing, data extraction, and search engine technology. He is currently a professor of computer science at Rutgers University in New Jersey, United States.

Patrick Eugene O'Neil was an American computer scientist, an expert on databases, and a professor of computer science at the University of Massachusetts Boston.

In computer science, the log-structured merge-tree is a data structure with performance characteristics that make it attractive for providing indexed access to files with high insert volume, such as transactional log data. LSM trees, like other search trees, maintain key-value pairs. LSM trees maintain data in two or more separate structures, each of which is optimized for its respective underlying storage medium; data is synchronized between the two structures efficiently, in batches.

Matrix factorization is a class of collaborative filtering algorithms used in recommender systems. Matrix factorization algorithms work by decomposing the user-item interaction matrix into the product of two lower dimensionality rectangular matrices. This family of methods became widely known during the Netflix prize challenge due to its effectiveness as reported by Simon Funk in his 2006 blog post, where he shared his findings with the research community. The prediction results can be improved by assigning different regularization weights to the latent factors based on items' popularity and users' activeness.

Gautam Das is a computer scientist in the field of databases research. He is an ACM Fellow and IEEE Fellow.

Wei Wang is a Chinese-born American computer scientist. She is the Leonard Kleinrock Chair Professor in Computer Science and Computational Medicine at University of California, Los Angeles and the director of the Scalable Analytics Institute (ScAi). Her research specializes in big data analytics and modeling, database systems, natural language processing, bioinformatics and computational biology, and computational medicine.

Click tracking is when user click behavior or user navigational behavior is collected in order to derive insights and fingerprint users. Click behavior is commonly tracked using server logs which encompass click paths and clicked URLs. This log is often presented in a standard format including information like the hostname, date, and username. However, as technology develops, new software allows for in depth analysis of user click behavior using hypervideo tools. Given that the internet can be considered a risky environment, research strives to understand why users click certain links and not others. Research has also been conducted to explore the user experience of privacy with making user personal identification information individually anonymized and improving how data collection consent forms are written and structured.