In computer science and information theory, a Huffman code is a particular type of optimal prefix code that is commonly used for lossless data compression. The process of finding or using such a code is Huffman coding, an algorithm developed by David A. Huffman while he was a Sc.D. student at MIT, and published in the 1952 paper "A Method for the Construction of Minimum-Redundancy Codes".

In geometry, a tetrahedron, also known as a triangular pyramid, is a polyhedron composed of four triangular faces, six straight edges, and four vertex corners. The tetrahedron is the simplest of all the ordinary convex polyhedra.

In information theory, the Hamming distance between two strings of equal length is the number of positions at which the corresponding symbols are different. In other words, it measures the minimum number of substitutions required to change one string into the other, or the minimum number of errors that could have transformed one string into the other. In a more general context, the Hamming distance is one of several string metrics for measuring the edit distance between two sequences. It is named after the American mathematician Richard Hamming.

In geometry, the snub cube, or snub cuboctahedron, is an Archimedean solid with 38 faces: 6 squares and 32 equilateral triangles. It has 60 edges and 24 vertices.

In computer science, the Floyd–Warshall algorithm is an algorithm for finding shortest paths in a directed weighted graph with positive or negative edge weights. A single execution of the algorithm will find the lengths of shortest paths between all pairs of vertices. Although it does not return details of the paths themselves, it is possible to reconstruct the paths with simple modifications to the algorithm. Versions of the algorithm can also be used for finding the transitive closure of a relation , or widest paths between all pairs of vertices in a weighted graph.

In geometry, a diagonal is a line segment joining two vertices of a polygon or polyhedron, when those vertices are not on the same edge. Informally, any sloping line is called diagonal. The word diagonal derives from the ancient Greek διαγώνιος diagonios, "from angle to angle" ; it was used by both Strabo and Euclid to refer to a line connecting two vertices of a rhombus or cuboid, and later adopted into Latin as diagonus.

In graph theory, graph coloring is a special case of graph labeling; it is an assignment of labels traditionally called "colors" to elements of a graph subject to certain constraints. In its simplest form, it is a way of coloring the vertices of a graph such that no two adjacent vertices are of the same color; this is called a vertex coloring. Similarly, an edge coloring assigns a color to each edge so that no two adjacent edges are of the same color, and a face coloring of a planar graph assigns a color to each face or region so that no two faces that share a boundary have the same color.

In mathematics, spectral graph theory is the study of the properties of a graph in relationship to the characteristic polynomial, eigenvalues, and eigenvectors of matrices associated with the graph, such as its adjacency matrix or Laplacian matrix.

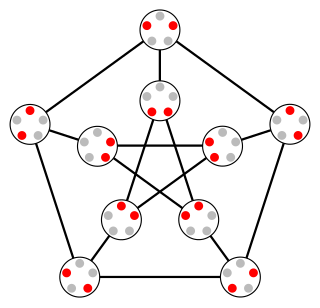

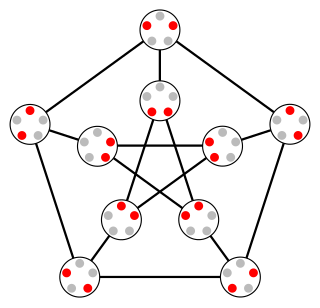

In graph theory, a strongly regular graph (SRG) is defined as follows. Let G = (V, E) be a regular graph with v vertices and degree k. G is said to be strongly regular if there are also integers λ and μ such that:

In mathematics and computer science, in the field of coding theory, the Hamming bound is a limit on the parameters of an arbitrary block code: it is also known as the sphere-packing bound or the volume bound from an interpretation in terms of packing balls in the Hamming metric into the space of all possible words. It gives an important limitation on the efficiency with which any error-correcting code can utilize the space in which its code words are embedded. A code that attains the Hamming bound is said to be a perfect code.

In graph theory, the Kneser graphK(n, k) (alternatively KGn,k) is the graph whose vertices correspond to the k-element subsets of a set of n elements, and where two vertices are adjacent if and only if the two corresponding sets are disjoint. Kneser graphs are named after Martin Kneser, who first investigated them in 1956.

The expander mixing lemma intuitively states that the edges of certain -regular graphs are evenly distributed throughout the graph. In particular, the number of edges between two vertex subsets and is always close to the expected number of edges between them in a random -regular graph, namely .

In coding theory, list decoding is an alternative to unique decoding of error-correcting codes for large error rates. The notion was proposed by Elias in the 1950s. The main idea behind list decoding is that the decoding algorithm instead of outputting a single possible message outputs a list of possibilities one of which is correct. This allows for handling a greater number of errors than that allowed by unique decoding.

In graph theory, the planar separator theorem is a form of isoperimetric inequality for planar graphs, that states that any planar graph can be split into smaller pieces by removing a small number of vertices. Specifically, the removal of vertices from an n-vertex graph can partition the graph into disjoint subgraphs each of which has at most vertices.

In graph theory, the Lovász number of a graph is a real number that is an upper bound on the Shannon capacity of the graph. It is also known as Lovász theta function and is commonly denoted by , using a script form of the Greek letter theta to contrast with the upright theta used for Shannon capacity. This quantity was first introduced by László Lovász in his 1979 paper On the Shannon Capacity of a Graph.

In coding theory, Zemor's algorithm, designed and developed by Gilles Zemor, is a recursive low-complexity approach to code construction. It is an improvement over the algorithm of Sipser and Spielman.

A hyperbolic geometric graph (HGG) or hyperbolic geometric network (HGN) is a special type of spatial network where (1) latent coordinates of nodes are sprinkled according to a probability density function into a hyperbolic space of constant negative curvature and (2) an edge between two nodes is present if they are close according to a function of the metric (typically either a Heaviside step function resulting in deterministic connections between vertices closer than a certain threshold distance, or a decaying function of hyperbolic distance yielding the connection probability). A HGG generalizes a random geometric graph (RGG) whose embedding space is Euclidean.

In mathematics, the incompressibility method is a proof method like the probabilistic method, the counting method or the pigeonhole principle. To prove that an object in a certain class satisfies a certain property, select an object of that class that is incompressible. If it does not satisfy the property, it can be compressed by computable coding. Since it can be generally proven that almost all objects in a given class are incompressible, the argument demonstrates that almost all objects in the class have the property involved. To select an incompressible object is ineffective, and cannot be done by a computer program. However, a simple counting argument usually shows that almost all objects of a given class can be compressed by only a few bits.

Quantum counting algorithm is a quantum algorithm for efficiently counting the number of solutions for a given search problem. The algorithm is based on the quantum phase estimation algorithm and on Grover's search algorithm.

In graph theory, a locally linear graph is an undirected graph in which every edge belongs to exactly one triangle. Equivalently, for each vertex of the graph, its neighbors are each adjacent to exactly one other neighbor, so the neighbors can be paired up into an induced matching. Locally linear graphs have also been called locally matched graphs. Their triangles form the hyperedges of triangle-free 3-uniform linear hypergraphs and the blocks of certain partial Steiner triple systems, and the locally linear graphs are exactly the Gaifman graphs of these hypergraphs or partial Steiner systems.