A robot is a machine—especially one programmable by a computer—capable of carrying out a complex series of actions automatically. A robot can be guided by an external control device, or the control may be embedded within. Robots may be constructed to evoke human form, but most robots are task-performing machines, designed with an emphasis on stark functionality, rather than expressive aesthetics.

An autonomous robot is a robot that acts without recourse to human control. The first autonomous robots environment were known as Elmer and Elsie, which were constructed in the late 1940s by W. Grey Walter. They were the first robots in history that were programmed to "think" the way biological brains do and meant to have free will. Elmer and Elsie were often labeled as tortoises because of how they were shaped and the manner in which they moved. They were capable of phototaxis which is the movement that occurs in response to light stimulus.

An unmanned aerial vehicle (UAV), commonly known as a drone, is an aircraft without any human pilot, crew, or passengers on board. UAVs were originally developed through the twentieth century for military missions too "dull, dirty or dangerous" for humans, and by the twenty-first, they had become essential assets to most militaries. As control technologies improved and costs fell, their use expanded to many non-military applications. These include aerial photography, precision agriculture, forest fire monitoring, river monitoring, environmental monitoring, policing and surveillance, infrastructure inspections, smuggling, product deliveries, entertainment, and drone racing.

Military robots are autonomous robots or remote-controlled mobile robots designed for military applications, from transport to search & rescue and attack.

An unmanned combat aerial vehicle (UCAV), also known as a combat drone, colloquially shortened as drone or battlefield UAV, is an unmanned aerial vehicle (UAV) that is used for intelligence, surveillance, target acquisition, and reconnaissance and carries aircraft ordnance such as missiles, ATGMs, and/or bombs in hardpoints for drone strikes. These drones are usually under real-time human control, with varying levels of autonomy. Unlike unmanned surveillance and reconnaissance aerial vehicles, UCAVs are used for both drone strikes and battlefield intelligence.

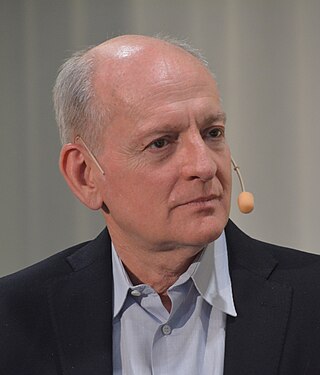

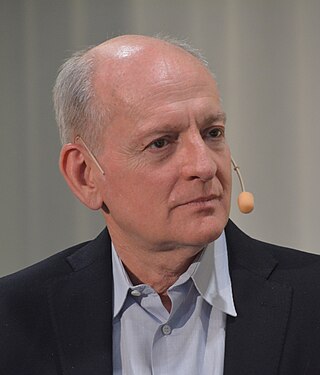

Stuart Jonathan Russell is a British computer scientist known for his contributions to artificial intelligence (AI). He is a professor of computer science at the University of California, Berkeley and was from 2008 to 2011 an adjunct professor of neurological surgery at the University of California, San Francisco. He holds the Smith-Zadeh Chair in Engineering at University of California, Berkeley. He founded and leads the Center for Human-Compatible Artificial Intelligence (CHAI) at UC Berkeley. Russell is the co-author with Peter Norvig of the authoritative textbook of the field of AI: Artificial Intelligence: A Modern Approach used in more than 1,500 universities in 135 countries.

Swarm robotics is an approach to the coordination of multiple robots as a system which consist of large numbers of mostly simple physical robots. ″In a robot swarm, the collective behavior of the robots results from local interactions between the robots and between the robots and the environment in which they act.″ It is supposed that a desired collective behavior emerges from the interactions between the robots and interactions of robots with the environment. This approach emerged on the field of artificial swarm intelligence, as well as the biological studies of insects, ants and other fields in nature, where swarm behaviour occurs.

A sentry gun is a weapon that is automatically aimed and fired at targets that are detected by sensors. The earliest functioning military sentry guns were the close-in weapon systems point-defense weapons, such as the Phalanx CIWS, used for detecting and destroying short range incoming missiles and enemy aircraft, first used exclusively on naval assets, and now also as land-based defenses.

The Modular Advanced Armed Robotic System (MAARS) is a robot that is being developed by Qinetiq. A member of the TALON family, it will be the successor to the armed SWORDS robot. It has a different, larger chassis than the SWORDS robot, so has little physically in common with the SWORDS and TALON

Lethal autonomous weapons (LAWs) are a type of autonomous military system that can independently search for and engage targets based on programmed constraints and descriptions. LAWs are also known as lethal autonomous weapon systems (LAWS), autonomous weapon systems (AWS), robotic weapons or killer robots. LAWs may operate in the air, on land, on water, underwater, or in space. The autonomy of current systems as of 2018 was restricted in the sense that a human gives the final command to attack—though there are exceptions with certain "defensive" systems.

Drone warfare is a form of aerial warfare using unmanned combat aerial vehicles (UCAV) or weaponized commercial unmanned aerial vehicles (UAV). The United States, United Kingdom, Israel, China, South Korea, Iran, Italy, France, India, Pakistan, Russia, Turkey, and Poland are known to have manufactured operational UCAVs as of 2019. As of 2022, the Ukrainian enterprise Ukroboronprom and NGO group Aerorozvidka have built strike-capable drones and used them in combat.

The Campaign to Stop Killer Robots is a coalition of non-governmental organizations who seek to pre-emptively ban lethal autonomous weapons.

An autonomous aircraft is an aircraft which flies under the control of automatic systems and needs no intervention from a human pilot. Most autonomous aircraft are unmanned aerial vehicle or drones. However, autonomous control systems are reaching a point where several air taxis and associated regulatory regimes are being developed.

Lily was a California-based drone brand that shut down after filing for bankruptcy in 2017. It is owned by Mota Group, Inc. and headquartered in San Jose, California.

A loitering munition is a kind of aerial weapon with a built-in munition (warhead), which can loiter around the target area until a target is located; it then attacks the target by crashing into it. Loitering munitions enable faster reaction times against hidden targets that emerge for short periods without placing high-value platforms near the target area, and also allow more selective targeting as the attack can be changed midflight or aborted.

The International Committee for Robot Arms Control (ICRAC) is a "not-for-profit association committed to the peaceful use of robotics in the service of humanity and the regulation of robot weapons." It is concerned about the dangers that autonomous military robots, or lethal autonomous weapons, pose to peace and international security and to civilians in war.

A military artificial intelligence arms race is an arms race between two or more states to develop and deploy lethal autonomous weapons systems (LAWS). Since the mid-2010s, many analysts have noted the emergence of such an arms race between global superpowers for better military AI, driven by increasing geopolitical and military tensions. An AI arms race is sometimes placed in the context of an AI Cold War between the US and China.

The HAL Combat Air Teaming System (CATS) is an Indian unmanned and manned combat aircraft air teaming system being developed by Hindustan Aeronautics Limited (HAL). The system will consist of a manned fighter aircraft acting as "mothership" of the system and a set of swarming UAVs and UCAVs governed by the mothership aircraft. A twin-seated HAL Tejas is likely to be the mothership aircraft. Various other sub components of the system are currently under development and will be jointly produced by HAL, National Aerospace Laboratories (NAL), Defence Research and Development Organisation (DRDO) and Newspace Research & Technologies.