The Semantic Web, sometimes known as Web 3.0, is an extension of the World Wide Web through standards set by the World Wide Web Consortium (W3C). The goal of the Semantic Web is to make Internet data machine-readable.

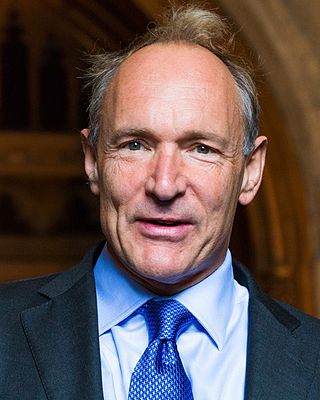

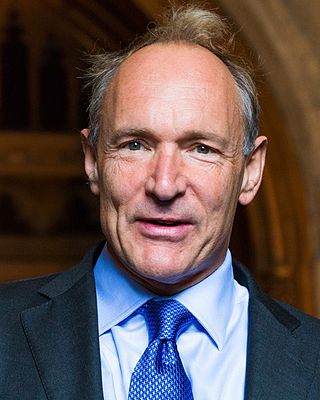

Sir Timothy John Berners-Lee, also known as TimBL, is an English computer scientist best known as the inventor of the World Wide Web, the HTML markup language, the URL system, and HTTP. He is a professorial research fellow at the University of Oxford and a professor emeritus at the Massachusetts Institute of Technology (MIT).

Text mining, text data mining (TDM) or text analytics is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as statistical pattern learning. According to Hotho et al. (2005) we can distinguish between three different perspectives of text mining: information extraction, data mining, and a knowledge discovery in databases (KDD) process. Text mining usually involves the process of structuring the input text, deriving patterns within the structured data, and finally evaluation and interpretation of the output. 'High quality' in text mining usually refers to some combination of relevance, novelty, and interest. Typical text mining tasks include text categorization, text clustering, concept/entity extraction, production of granular taxonomies, sentiment analysis, document summarization, and entity relation modeling.

Computational sociology is a branch of sociology that uses computationally intensive methods to analyze and model social phenomena. Using computer simulations, artificial intelligence, complex statistical methods, and analytic approaches like social network analysis, computational sociology develops and tests theories of complex social processes through bottom-up modeling of social interactions.

Nello Cristianini is a professor of Artificial Intelligence in the Department of Computer Science at the University of Bath.

James Alexander Hendler is an artificial intelligence researcher at Rensselaer Polytechnic Institute, United States, and one of the originators of the Semantic Web. He is a Fellow of the National Academy of Public Administration.

The ESP game is a human-based computation game developed to address the problem of creating difficult metadata. The idea behind the game is to use the computational power of humans to perform a task that computers cannot by packaging the task as a game. It was originally conceived by Luis von Ahn of Carnegie Mellon University. Google bought a license to create its own version of the game in 2006 in order to return better search results for its online images. The license of the data acquired by Ahn's ESP game, or the Google version, is not clear. Google's version was shut down on September 16, 2011, as part of the Google Labs closure in September 2011.

Semantic publishing on the Web, or semantic web publishing, refers to publishing information on the web as documents accompanied by semantic markup. Semantic publication provides a way for computers to understand the structure and even the meaning of the published information, making information search and data integration more efficient.

The Web Science Trust (WST) is a UK Charitable Trust with the aim of supporting the global development of Web science. It was originally started in 2006 as a joint effort between MIT and University of Southampton to formalise the social and technical aspects of the World Wide Web. The trust coordinates a set of international "WSTNet Laboratories" that include academic research groups in the emerging area of Web science.

Web science is an emerging interdisciplinary field concerned with the study of large-scale socio-technical systems, particularly the World Wide Web. It considers the relationship between people and technology, the ways that society and technology co-constitute one another and the impact of this co-constitution on broader society. Web Science combines research from disciplines as diverse as sociology, computer science, economics, and mathematics.

Frames are an artificial intelligence data structure used to divide knowledge into substructures by representing "stereotyped situations". They were proposed by Marvin Minsky in his 1974 article "A Framework for Representing Knowledge". Frames are the primary data structure used in artificial intelligence frame languages; they are stored as ontologies of sets.

Semantic HTML is the use of HTML markup to reinforce the semantics, or meaning, of the information in web pages and web applications rather than merely to define its presentation or look. Semantic HTML is processed by traditional web browsers as well as by many other user agents. CSS is used to suggest its presentation to human users.

Dame Wendy Hall is a British computer scientist. She is Regius Professor of Computer Science at the University of Southampton.

Sir Nigel Richard Shadbolt is Principal of Jesus College, Oxford, and Professorial Research Fellow in the Department of Computer Science, University of Oxford. He is chairman of the Open Data Institute which he co-founded with Tim Berners-Lee. He is also a visiting professor in the School of Electronics and Computer Science at the University of Southampton. Shadbolt is an interdisciplinary researcher, policy expert and commentator. His research focuses on understanding how intelligent behaviour is embodied and emerges in humans, machines and, most recently, on the Web, and has made contributions to the fields of Psychology, Cognitive science, Computational neuroscience, Artificial Intelligence (AI), Computer science and the emerging field of Web science.

David Charles De Roure is an English computer scientist who is a professor of e-Research at the University of Oxford, where he is responsible for Digital Humanities in The Oxford Research Centre in the Humanities (TORCH), and is a Turing Fellow at The Alan Turing Institute. He is a supernumerary Fellow of Wolfson College, Oxford, and Oxford Martin School Senior Alumni Fellow.

Computational social science is an interdisciplinary academic sub-field concerned with computational approaches to the social sciences. This means that computers are used to model, simulate, and analyze social phenomena. It has been applied in areas such as computational economics, computational sociology, computational media analysis, cliodynamics, culturomics, nonprofit studies. It focuses on investigating social and behavioral relationships and interactions using data science approaches, network analysis, social simulation and studies using interactive systems.

Jenifer Fays Alys Tennison is a British software engineer and consultant who co-chairs the data governance working group within the Global Partnership on Artificial Intelligence (GPAI). She also serves on the board of directors of Creative Commons, the Global Partnership for Sustainable Development Data (GPSDD) and the information law and policy centre of the School of Advanced Study (SAS) at the University of London. She was previously Chief Executive Officer (CEO) of the Open Data Institute (ODI).

In natural language processing (NLP), a word embedding is a representation of a word. The embedding is used in text analysis. Typically, the representation is a real-valued vector that encodes the meaning of the word in such a way that the words that are closer in the vector space are expected to be similar in meaning. Word embeddings can be obtained using language modeling and feature learning techniques, where words or phrases from the vocabulary are mapped to vectors of real numbers.

Health Web Science (HWS) is a sub-discipline of Web Science that examines the interplay between health sciences, health and well-being, and the World Wide Web. It assumes that each domain influences the others. HWS thus complements and overlaps with Medicine 2.0. Research has uncovered emergent properties that arise as individuals interact with each other, with healthcare providers and with the Web itself.

Daniel J. Weitzner is the director of the MIT Internet Policy Research Initiative and principal research scientist at the Computer Science and Artificial Intelligence Lab CSAIL. He teaches Internet public policy in MIT's Computer Science Department. His research includes development of accountable systems architectures to enable the Web to be more responsive to policy requirements.