A Markov chain or Markov process is a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event. Informally, this may be thought of as, "What happens next depends only on the state of affairs now." A countably infinite sequence, in which the chain moves state at discrete time steps, gives a discrete-time Markov chain (DTMC). A continuous-time process is called a continuous-time Markov chain (CTMC). It is named after the Russian mathematician Andrey Markov.

Automata theory is the study of abstract machines and automata, as well as the computational problems that can be solved using them. It is a theory in theoretical computer science with close connections to mathematical logic. The word automata comes from the Greek word αὐτόματος, which means "self-acting, self-willed, self-moving". An automaton is an abstract self-propelled computing device which follows a predetermined sequence of operations automatically. An automaton with a finite number of states is called a finite automaton (FA) or finite-state machine (FSM). The figure on the right illustrates a finite-state machine, which is a well-known type of automaton. This automaton consists of states and transitions. As the automaton sees a symbol of input, it makes a transition to another state, according to its transition function, which takes the previous state and current input symbol as its arguments.

In physics, a Langevin equation is a stochastic differential equation describing how a system evolves when subjected to a combination of deterministic and fluctuating ("random") forces. The dependent variables in a Langevin equation typically are collective (macroscopic) variables changing only slowly in comparison to the other (microscopic) variables of the system. The fast (microscopic) variables are responsible for the stochastic nature of the Langevin equation. One application is to Brownian motion, which models the fluctuating motion of a small particle in a fluid.

In mathematics, a stochastic matrix is a square matrix used to describe the transitions of a Markov chain. Each of its entries is a nonnegative real number representing a probability. It is also called a probability matrix, transition matrix, substitution matrix, or Markov matrix. The stochastic matrix was first developed by Andrey Markov at the beginning of the 20th century, and has found use throughout a wide variety of scientific fields, including probability theory, statistics, mathematical finance and linear algebra, as well as computer science and population genetics. There are several different definitions and types of stochastic matrices:

Grammar theory to model symbol strings originated from work in computational linguistics aiming to understand the structure of natural languages. Probabilistic context free grammars (PCFGs) have been applied in probabilistic modeling of RNA structures almost 40 years after they were introduced in computational linguistics.

In probability theory and statistics, a Gaussian process is a stochastic process, such that every finite collection of those random variables has a multivariate normal distribution. The distribution of a Gaussian process is the joint distribution of all those random variables, and as such, it is a distribution over functions with a continuous domain, e.g. time or space.

In probability and statistics, an exponential family is a parametric set of probability distributions of a certain form, specified below. This special form is chosen for mathematical convenience, including the enabling of the user to calculate expectations, covariances using differentiation based on some useful algebraic properties, as well as for generality, as exponential families are in a sense very natural sets of distributions to consider. The term exponential class is sometimes used in place of "exponential family", or the older term Koopman–Darmois family. Sometimes loosely referred to as "the" exponential family, this class of distributions is distinct because they all possess a variety of desirable properties, most importantly the existence of a sufficient statistic.

In mathematics, a Markov decision process (MDP) is a discrete-time stochastic control process. It provides a mathematical framework for modeling decision making in situations where outcomes are partly random and partly under the control of a decision maker. MDPs are useful for studying optimization problems solved via dynamic programming. MDPs were known at least as early as the 1950s; a core body of research on Markov decision processes resulted from Ronald Howard's 1960 book, Dynamic Programming and Markov Processes. They are used in many disciplines, including robotics, automatic control, economics and manufacturing. The name of MDPs comes from the Russian mathematician Andrey Markov as they are an extension of Markov chains.

In mathematics, the Ornstein–Uhlenbeck process is a stochastic process with applications in financial mathematics and the physical sciences. Its original application in physics was as a model for the velocity of a massive Brownian particle under the influence of friction. It is named after Leonard Ornstein and George Eugene Uhlenbeck.

In quantum computing, quantum finite automata (QFA) or quantum state machines are a quantum analog of probabilistic automata or a Markov decision process. They provide a mathematical abstraction of real-world quantum computers. Several types of automata may be defined, including measure-once and measure-many automata. Quantum finite automata can also be understood as the quantization of subshifts of finite type, or as a quantization of Markov chains. QFAs are, in turn, special cases of geometric finite automata or topological finite automata.

In mathematics and computer science, the probabilistic automaton (PA) is a generalization of the nondeterministic finite automaton; it includes the probability of a given transition into the transition function, turning it into a transition matrix. Thus, the probabilistic automaton also generalizes the concepts of a Markov chain and of a subshift of finite type. The languages recognized by probabilistic automata are called stochastic languages; these include the regular languages as a subset. The number of stochastic languages is uncountable.

Mixed logit is a fully general statistical model for examining discrete choices. It overcomes three important limitations of the standard logit model by allowing for random taste variation across choosers, unrestricted substitution patterns across choices, and correlation in unobserved factors over time. Mixed logit can choose any distribution for the random coefficients, unlike probit which is limited to the normal distribution. It is called "mixed logit" because the choice probability is a mixture of logits, with as the mixing distribution. It has been shown that a mixed logit model can approximate to any degree of accuracy any true random utility model of discrete choice, given appropriate specification of variables and the coefficient distribution.

In filtering theory the Kushner equation is an equation for the conditional probability density of the state of a stochastic non-linear dynamical system, given noisy measurements of the state. It therefore provides the solution of the nonlinear filtering problem in estimation theory. The equation is sometimes referred to as the Stratonovich–Kushnerequation. However, the correct equation in terms of Itō calculus was first derived by Kushner although a more heuristic Stratonovich version of it appeared already in Stratonovich's works in late fifties. However, the derivation in terms of Itō calculus is due to Richard Bucy.

The Generalized Additive Model for Location, Scale and Shape (GAMLSS) is an approach to statistical modelling and learning. GAMLSS is a modern distribution-based approach to (semiparametric) regression. A parametric distribution is assumed for the response (target) variable but the parameters of this distribution can vary according to explanatory variables using linear, nonlinear or smooth functions. In machine learning parlance, GAMLSS is a form of supervised machine learning.

In probability theory, reflected Brownian motion is a Wiener process in a space with reflecting boundaries. In the physical literature, this process describes diffusion in a confined space and it is often called confined Brownian motion. For example it can describe the motion of hard spheres in water confined between two walls.

In probability theory, an interacting particle system (IPS) is a stochastic process on some configuration space given by a site space, a countably-infinite-order graph and a local state space, a compact metric space . More precisely IPS are continuous-time Markov jump processes describing the collective behavior of stochastically interacting components. IPS are the continuous-time analogue of stochastic cellular automata.

Toom's rule is a 2-dimensional cellular automaton model created by Andrei Toom in 1978. It is a modification of the 2-dimensional majority vote rule and can have more robust memory when considered as a thermal physical system in statistical field theory. The model also has a noise-dependent phase transition.

In queueing theory, a discipline within the mathematical theory of probability, the G/M/1 queue represents the queue length in a system where interarrival times have a general distribution and service times for each job have an exponential distribution. The system is described in Kendall's notation where the G denotes a general distribution, M the exponential distribution for service times and the 1 that the model has a single server.

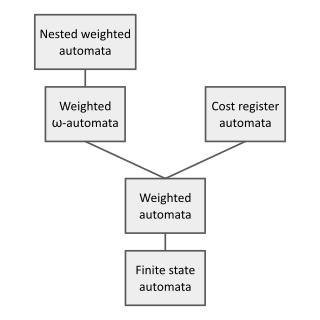

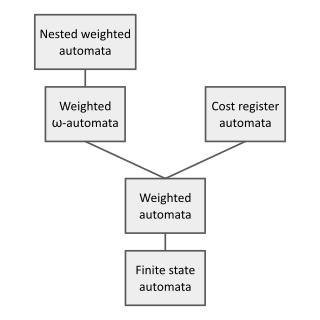

In theoretical computer science and formal language theory, a weighted automaton or weighted finite-state machine is a generalization of a finite-state machine in which the edges have weights, for example real numbers or integers. Finite-state machines are only capable of answering decision problems; they take as input a string and produce a Boolean output, i.e. either "accept" or "reject". In contrast, weighted automata produce a quantitative output, for example a count of how many answers are possible on a given input string, or a probability of how likely the input string is according to a probability distribution. They are one of the simplest studied models of quantitative automata.

Mean-field particle methods are a broad class of interacting type Monte Carlo algorithms for simulating from a sequence of probability distributions satisfying a nonlinear evolution equation. These flows of probability measures can always be interpreted as the distributions of the random states of a Markov process whose transition probabilities depends on the distributions of the current random states. A natural way to simulate these sophisticated nonlinear Markov processes is to sample a large number of copies of the process, replacing in the evolution equation the unknown distributions of the random states by the sampled empirical measures. In contrast with traditional Monte Carlo and Markov chain Monte Carlo methods these mean-field particle techniques rely on sequential interacting samples. The terminology mean-field reflects the fact that each of the samples interacts with the empirical measures of the process. When the size of the system tends to infinity, these random empirical measures converge to the deterministic distribution of the random states of the nonlinear Markov chain, so that the statistical interaction between particles vanishes. In other words, starting with a chaotic configuration based on independent copies of initial state of the nonlinear Markov chain model, the chaos propagates at any time horizon as the size the system tends to infinity; that is, finite blocks of particles reduces to independent copies of the nonlinear Markov process. This result is called the propagation of chaos property. The terminology "propagation of chaos" originated with the work of Mark Kac in 1976 on a colliding mean-field kinetic gas model.