Biography

Geman was born and raised in Chicago. He was educated at the University of Michigan (B.S., Physics, 1971), Dartmouth Medical College (MS, Neurophysiology, 1973), and the Massachusetts Institute of Technology (Ph.D, Applied Mathematics, 1977).

Since 1977, he has been a member of the faculty at Brown University, where he has worked in the Pattern Theory group, and is currently the James Manning Professor of Applied Mathematics. He has received many honors and awards, including selection as a Presidential Young Investigator and as an ISI Highly Cited researcher. He is an elected member of the International Statistical Institute, and a fellow of the Institute of Mathematical Statistics and of the American Mathematical Society. [7] He was elected to the US National Academy of Sciences in 2011.

A hidden Markov model (HMM) is a statistical Markov model in which the system being modeled is assumed to be a Markov process — call it — with unobservable ("hidden") states. As part of the definition, HMM requires that there be an observable process whose outcomes are "influenced" by the outcomes of in a known way. Since cannot be observed directly, the goal is to learn about by observing HMM has an additional requirement that the outcome of at time must be "influenced" exclusively by the outcome of at and that the outcomes of and at must not affect the outcome of at

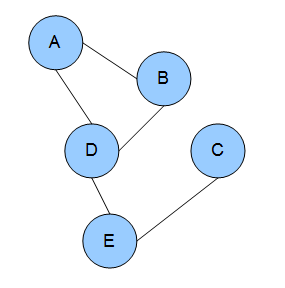

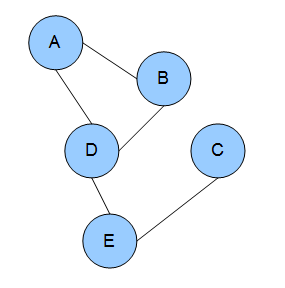

A Bayesian network is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). Bayesian networks are ideal for taking an event that occurred and predicting the likelihood that any one of several possible known causes was the contributing factor. For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases.

In statistics, Markov chain Monte Carlo (MCMC) methods comprise a class of algorithms for sampling from a probability distribution. By constructing a Markov chain that has the desired distribution as its equilibrium distribution, one can obtain a sample of the desired distribution by recording states from the chain. The more steps that are included, the more closely the distribution of the sample matches the actual desired distribution. Various algorithms exist for constructing chains, including the Metropolis–Hastings algorithm.

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a graph expresses the conditional dependence structure between random variables. They are commonly used in probability theory, statistics—particularly Bayesian statistics—and machine learning.

In statistics, Gibbs sampling or a Gibbs sampler is a Markov chain Monte Carlo (MCMC) algorithm for obtaining a sequence of observations which are approximated from a specified multivariate probability distribution, when direct sampling is difficult. This sequence can be used to approximate the joint distribution ; to approximate the marginal distribution of one of the variables, or some subset of the variables ; or to compute an integral. Typically, some of the variables correspond to observations whose values are known, and hence do not need to be sampled.

A Boltzmann machine is a stochastic spin-glass model with an external field, i.e., a Sherrington–Kirkpatrick model, that is a stochastic Ising Model. It is a statistical physics technique applied in the context of cognitive science. It is also classified as Markov random field.

In the domain of physics and probability, a Markov random field (MRF), Markov network or undirected graphical model is a set of random variables having a Markov property described by an undirected graph. In other words, a random field is said to be a Markov random field if it satisfies Markov properties. The concept originates from the Sherrington–Kirkpatrick model.

Conditional random fields (CRFs) are a class of statistical modeling methods often applied in pattern recognition and machine learning and used for structured prediction. Whereas a classifier predicts a label for a single sample without considering "neighbouring" samples, a CRF can take context into account. To do so, the predictions are modelled as a graphical model, which represents the presence of dependencies between the predictions. What kind of graph is used depends on the application. For example, in natural language processing, "linear chain" CRFs are popular, for which each prediction is dependent only on its immediate neighbours. In image processing, the graph typically connects locations to nearby and/or similar locations to enforce that they receive similar predictions.

As applied in the field of computer vision, graph cut optimization can be employed to efficiently solve a wide variety of low-level computer vision problems, such as image smoothing, the stereo correspondence problem, image segmentation, object co-segmentation, and many other computer vision problems that can be formulated in terms of energy minimization. Many of these energy minimization problems can be approximated by solving a maximum flow problem in a graph. Under most formulations of such problems in computer vision, the minimum energy solution corresponds to the maximum a posteriori estimate of a solution. Although many computer vision algorithms involve cutting a graph, the term "graph cuts" is applied specifically to those models which employ a max-flow/min-cut optimization.

Donald Jay Geman is an American applied mathematician and a leading researcher in the field of machine learning and pattern recognition. He and his brother, Stuart Geman, are very well known for proposing the Gibbs sampler and for the first proof of the convergence of the simulated annealing algorithm, in an article that became a highly cited reference in engineering. He is a professor at the Johns Hopkins University and simultaneously a visiting professor at École Normale Supérieure de Cachan.

Probabilistic programming (PP) is a programming paradigm in which probabilistic models are specified and inference for these models is performed automatically. It represents an attempt to unify probabilistic modeling and traditional general purpose programming in order to make the former easier and more widely applicable. It can be used to create systems that help make decisions in the face of uncertainty.

Stan is a probabilistic programming language for statistical inference written in C++. The Stan language is used to specify a (Bayesian) statistical model with an imperative program calculating the log probability density function.

Bruce Edward Hajek is a Professor in the Coordinated Science Laboratory, the head of the Department of Electrical and Computer Engineering, and the Leonard C. and Mary Lou Hoeft Chair in Engineering at the University of Illinois at Urbana-Champaign. He does research in communication networking, auction theory, stochastic analysis, combinatorial optimization, machine learning, information theory, and bioinformatics.

Michael Ira Miller is an American-born biomedical engineer and data scientist, and the Bessie Darling Massey Professor and Director of the Johns Hopkins University Department of Biomedical Engineering. He worked with Ulf Grenander in the field of Computational Anatomy as it pertains to neuroscience, specializing in mapping the brain under various states of health and disease by applying data derived from medical imaging. Miller is the director of the Johns Hopkins Center for Imaging Science, Whiting School of Engineering and codirector of Johns Hopkins Kavli Neuroscience Discovery Institute. Miller is also a Johns Hopkins University Gilman Scholar.

The following outline is provided as an overview of and topical guide to machine learning. Machine learning is a subfield of soft computing within computer science that evolved from the study of pattern recognition and computational learning theory in artificial intelligence. In 1959, Arthur Samuel defined machine learning as a "field of study that gives computers the ability to learn without being explicitly programmed". Machine learning explores the study and construction of algorithms that can learn from and make predictions on data. Such algorithms operate by building a model from an example training set of input observations in order to make data-driven predictions or decisions expressed as outputs, rather than following strictly static program instructions.

Song-Chun Zhu is a Chinese computer scientist and applied mathematician known for his work in computer vision, cognitive artificial intelligence and robotics. Zhu currently works at Peking University and was previously a professor in the Departments of Statistics and Computer Science at the University of California, Los Angeles. Zhu also previously served as Director of the UCLA Center for Vision, Cognition, Learning and Autonomy (VCLA).

In network theory, collective classification is the simultaneous prediction of the labels for multiple objects, where each label is predicted using information about the object's observed features, the observed features and labels of its neighbors, and the unobserved labels of its neighbors. Collective classification problems are defined in terms of networks of random variables, where the network structure determines the relationship between the random variables. Inference is performed on multiple random variables simultaneously, typically by propagating information between nodes in the network to perform approximate inference. Approaches that use collective classification can make use of relational information when performing inference. Examples of collective classification include predicting attributes of individuals in a social network, classifying webpages in the World Wide Web, and inferring the research area of a paper in a scientific publication dataset.

In the domain of physics and probability, the filters, random fields, and maximum entropy (FRAME) model is a Markov random field model of stationary spatial processes, in which the energy function is the sum of translation-invariant potential functions that are one-dimensional non-linear transformations of linear filter responses. The FRAME model was originally developed by Song-Chun Zhu, Ying Nian Wu, and David Mumford for modeling stochastic texture patterns, such as grasses, tree leaves, brick walls, water waves, etc. This model is the maximum entropy distribution that reproduces the observed marginal histograms of responses from a bank of filters, where for each filter tuned to a specific scale and orientation, the marginal histogram is pooled over all the pixels in the image domain. The FRAME model is also proved to be equivalent to the micro-canonical ensemble, which was named the Julesz ensemble. Gibbs sampler is adopted to synthesize texture images by drawing samples from the FRAME model.

Probabilistic numerics is a scientific field at the intersection of statistics, machine learning and applied mathematics, where tasks in numerical analysis including finding numerical solutions for integration, linear algebra, optimisation and differential equations are seen as problems of statistical, probabilistic, or Bayesian inference.