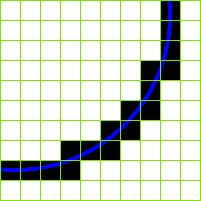

In digital image processing, sub-pixel resolution can be obtained in images constructed from sources with information exceeding the nominal pixel resolution of said images.

In digital image processing, sub-pixel resolution can be obtained in images constructed from sources with information exceeding the nominal pixel resolution of said images.

| | This section needs expansion. You can help by adding to it. (January 2011) |

For example, if the image of a ship of length 50 metres (160 ft), viewed side-on, is 500 pixels long, the nominal resolution (pixel size) on the side of the ship facing the camera is 0.1 metres (3.9 in). Now sub-pixel resolution of well resolved features can measure ship movements which are an order of magnitude (10×) smaller. Movement is specifically mentioned here because measuring absolute positions requires an accurate lens model and known reference points within the image to achieve sub-pixel position accuracy. Small movements can however be measured (down to 1 cm) with simple calibration procedures.[ citation needed ] Specific fit functions often suffer specific bias with respect to image pixel boundaries. Users should therefore take care to avoid these "pixel locking" (or "peak locking") effects. [1]

Whether features in a digital image are sharp enough to achieve sub-pixel resolution can be quantified by measuring the point spread function (PSF) of an isolated point in the image. If the image does not contain isolated points, similar methods can be applied to edges in the image. It is also important when attempting sub-pixel resolution to keep image noise to a minimum. This, in the case of a stationary scene, can be measured from a time series of images. Appropriate pixel averaging, through both time (for stationary images) and space (for uniform regions of the image) is often used to prepare the image for sub-pixel resolution measurements.

ClearType is Microsoft's implementation of subpixel rendering technology in rendering text in a font system. ClearType attempts to improve the appearance of text on certain types of computer display screens by sacrificing color fidelity for additional intensity variation. This trade-off is asserted to work well on LCD flat panel monitors.

In digital imaging, a pixel, pel, or picture element is the smallest addressable element in a raster image, or the smallest addressable element in a dot matrix display device. In most digital display devices, pixels are the smallest element that can be manipulated through software.

Dot pitch is a specification for a computer display, computer printer, image scanner, or other pixel-based devices that describe the distance, for example, between dots (sub-pixels) on a display screen. In the case of an RGB color display, the derived unit of pixel pitch is a measure of the size of a triad plus the distance between triads.

In computer vision and image processing, motion estimation is the process of determining motion vectors that describe the transformation from one 2D image to another; usually from adjacent frames in a video sequence. It is an ill-posed problem as the motion happens in three dimensions (3D) but the images are a projection of the 3D scene onto a 2D plane. The motion vectors may relate to the whole image or specific parts, such as rectangular blocks, arbitrary shaped patches or even per pixel. The motion vectors may be represented by a translational model or many other models that can approximate the motion of a real video camera, such as rotation and translation in all three dimensions and zoom.

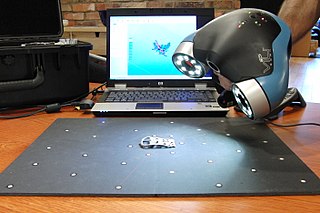

3D scanning is the process of analyzing a real-world object or environment to collect three dimensional data of its shape and possibly its appearance. The collected data can then be used to construct digital 3D models.

Structure from motion (SfM) is a photogrammetric range imaging technique for estimating three-dimensional structures from two-dimensional image sequences that may be coupled with local motion signals. It is studied in the fields of computer vision and visual perception.

Image rectification is a transformation process used to project images onto a common image plane. This process has several degrees of freedom and there are many strategies for transforming images to the common plane. Image rectification is used in computer stereo vision to simplify the problem of finding matching points between images, and in geographic information systems to merge images taken from multiple perspectives into a common map coordinate system.

In computer vision, the bag-of-words model sometimes called bag-of-visual-words model can be applied to image classification or retrieval, by treating image features as words. In document classification, a bag of words is a sparse vector of occurrence counts of words; that is, a sparse histogram over the vocabulary. In computer vision, a bag of visual words is a vector of occurrence counts of a vocabulary of local image features.

A structured-light 3D scanner is a 3D scanning device for measuring the three-dimensional shape of an object using projected light patterns and a camera system.

In robotics and computer vision, visual odometry is the process of determining the position and orientation of a robot by analyzing the associated camera images. It has been used in a wide variety of robotic applications, such as on the Mars Exploration Rovers.

A digital microscope is a variation of a traditional optical microscope that uses optics and a digital camera to output an image to a monitor, sometimes by means of software running on a computer. A digital microscope often has its own in-built LED light source, and differs from an optical microscope in that there is no provision to observe the sample directly through an eyepiece. Since the image is focused on the digital circuit, the entire system is designed for the monitor image. The optics for the human eye are omitted.

A time-of-flight camera, also known as time-of-flight sensor, is a range imaging camera system for measuring distances between the camera and the subject for each point of the image based on time-of-flight, the round trip time of an artificial light signal, as provided by a laser or an LED. Laser-based time-of-flight cameras are part of a broader class of scannerless LIDAR, in which the entire scene is captured with each laser pulse, as opposed to point-by-point with a laser beam such as in scanning LIDAR systems. Time-of-flight camera products for civil applications began to emerge around 2000, as the semiconductor processes allowed the production of components fast enough for such devices. The systems cover ranges of a few centimeters up to several kilometers.

PenTile matrix is a family of patented subpixel matrix schemes used in electronic device displays. PenTile is a trademark of Samsung. PenTile matrices are used in AMOLED and LCD displays.

Document mosaicing is a process that stitches multiple, overlapping snapshot images of a document together to produce one large, high resolution composite. The document is slid under a stationary, over-the-desk camera by hand until all parts of the document are snapshotted by the camera's field of view. As the document slid under the camera, all motion of the document is coarsely tracked by the vision system. The document is periodically snapshotted such that the successive snapshots are overlap by about 50%. The system then finds the overlapped pairs and stitches them together repeatedly until all pairs are stitched together as one piece of document.

Features from accelerated segment test (FAST) is a corner detection method, which could be used to extract feature points and later used to track and map objects in many computer vision tasks. The FAST corner detector was originally developed by Edward Rosten and Tom Drummond, and was published in 2006. The most promising advantage of the FAST corner detector is its computational efficiency. Referring to its name, it is indeed faster than many other well-known feature extraction methods, such as difference of Gaussians (DoG) used by the SIFT, SUSAN and Harris detectors. Moreover, when machine learning techniques are applied, superior performance in terms of computation time and resources can be realised. The FAST corner detector is very suitable for real-time video processing application because of this high-speed performance.

In computer vision, rigid motion segmentation is the process of separating regions, features, or trajectories from a video sequence into coherent subsets of space and time. These subsets correspond to independent rigidly moving objects in the scene. The goal of this segmentation is to differentiate and extract the meaningful rigid motion from the background and analyze it. Image segmentation techniques labels the pixels to be a part of pixels with certain characteristics at a particular time. Here, the pixels are segmented depending on its relative movement over a period of time i.e. the time of the video sequence.

Dynamic texture is the texture with motion which can be found in videos of sea-waves, fire, smoke, wavy trees, etc. Dynamic texture has a spatially repetitive pattern with time-varying visual pattern. Modeling and analyzing dynamic texture is a topic of images processing and pattern recognition in computer vision.

An event camera, also known as a neuromorphic camera, silicon retina or dynamic vision sensor, is an imaging sensor that responds to local changes in brightness. Event cameras do not capture images using a shutter as conventional (frame) cameras do. Instead, each pixel inside an event camera operates independently and asynchronously, reporting changes in brightness as they occur, and staying silent otherwise.

Michal Irani is a professor in the Department of Computer Science and Applied Mathematics at the Weizmann Institute of Science, Israel.

Video super-resolution (VSR) is the process of generating high-resolution video frames from the given low-resolution video frames. Unlike single-image super-resolution (SISR), the main goal is not only to restore more fine details while saving coarse ones, but also to preserve motion consistency.