In linguistics, syntax is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). There are numerous approaches to syntax that differ in their central assumptions and goals.

A syntactic category is a syntactic unit that theories of syntax assume. Word classes, largely corresponding to traditional parts of speech, are syntactic categories. In phrase structure grammars, the phrasal categories are also syntactic categories. Dependency grammars, however, do not acknowledge phrasal categories.

In grammar, a phrase—called expression in some contexts—is a group of words or singular word acting as a grammatical unit. For instance, the English expression "the very happy squirrel" is a noun phrase which contains the adjective phrase "very happy". Phrases can consist of a single word or a complete sentence. In theoretical linguistics, phrases are often analyzed as units of syntactic structure such as a constituent.

Phrase structure rules are a type of rewrite rule used to describe a given language's syntax and are closely associated with the early stages of transformational grammar, proposed by Noam Chomsky in 1957. They are used to break down a natural language sentence into its constituent parts, also known as syntactic categories, including both lexical categories and phrasal categories. A grammar that uses phrase structure rules is a type of phrase structure grammar. Phrase structure rules as they are commonly employed operate according to the constituency relation, and a grammar that employs phrase structure rules is therefore a constituency grammar; as such, it stands in contrast to dependency grammars, which are based on the dependency relation.

A noun phrase, or nominal (phrase), is a phrase that has a noun or pronoun as its head or performs the same grammatical function as a noun. Noun phrases are very common cross-linguistically, and they may be the most frequently occurring phrase type.

A parse tree or parsing tree or derivation tree or concrete syntax tree is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term parse tree itself is used primarily in computational linguistics; in theoretical syntax, the term syntax tree is more common.

In linguistics, X-bar theory is a model of phrase-structure grammar and a theory of syntactic category formation that was first proposed by Noam Chomsky in 1970 reformulating the ideas of Zellig Harris (1951,) and further developed by Ray Jackendoff, along the lines of the theory of generative grammar put forth in the 1950s by Chomsky. It attempts to capture the structure of phrasal categories with a single uniform structure called the X-bar schema, basing itself on the assumption that any phrase in natural language is an XP that is headed by a given syntactic category X. It played a significant role in resolving issues that phrase structure rules had, representative of which is the proliferation of grammatical rules, which is against the thesis of generative grammar.

In linguistics, a determiner phrase (DP) is a type of phrase headed by a determiner such as many. Controversially, many approaches, take a phrase like not very many apples to be a DP, headed, in this case, by the determiner many. This is called the DP analysis or the DP hypothesis. Others reject this analysis in favor of the more traditional NP analysis where apples would be the head of the phrase in which the DP not very many is merely a dependent. Thus, there are competing analyses concerning heads and dependents in nominal groups. The DP analysis developed in the late 1970s and early 1980s, and it is the majority view in generative grammar today.

A dependent-marking language has grammatical markers of agreement and case government between the words of phrases that tend to appear more on dependents than on heads. The distinction between head-marking and dependent-marking was first explored by Johanna Nichols in 1986, and has since become a central criterion in language typology in which languages are classified according to whether they are more head-marking or dependent-marking. Many languages employ both head and dependent-marking, but some employ double-marking, and yet others employ zero-marking. However, it is not clear that the head of a clause has anything to do with the head of a noun phrase, or even what the head of a clause is.

In linguistics, the head or nucleus of a phrase is the word that determines the syntactic category of that phrase. For example, the head of the noun phrase boiling hot water is the noun water. Analogously, the head of a compound is the stem that determines the semantic category of that compound. For example, the head of the compound noun handbag is bag, since a handbag is a bag, not a hand. The other elements of the phrase or compound modify the head, and are therefore the head's dependents. Headed phrases and compounds are called endocentric, whereas exocentric ("headless") phrases and compounds lack a clear head. Heads are crucial to establishing the direction of branching. Head-initial phrases are right-branching, head-final phrases are left-branching, and head-medial phrases combine left- and right-branching.

Dependency grammar (DG) is a class of modern grammatical theories that are all based on the dependency relation and that can be traced back primarily to the work of Lucien Tesnière. Dependency is the notion that linguistic units, e.g. words, are connected to each other by directed links. The (finite) verb is taken to be the structural center of clause structure. All other syntactic units (words) are either directly or indirectly connected to the verb in terms of the directed links, which are called dependencies. Dependency grammar differs from phrase structure grammar in that while it can identify phrases it tends to overlook phrasal nodes. A dependency structure is determined by the relation between a word and its dependents. Dependency structures are flatter than phrase structures in part because they lack a finite verb phrase constituent, and they are thus well suited for the analysis of languages with free word order, such as Czech or Warlpiri.

The term phrase structure grammar was originally introduced by Noam Chomsky as the term for grammar studied previously by Emil Post and Axel Thue. Some authors, however, reserve the term for more restricted grammars in the Chomsky hierarchy: context-sensitive grammars or context-free grammars. In a broader sense, phrase structure grammars are also known as constituency grammars. The defining trait of phrase structure grammars is thus their adherence to the constituency relation, as opposed to the dependency relation of dependency grammars.

A sentence diagram is a pictorial representation of the grammatical structure of a sentence. The term "sentence diagram" is used more when teaching written language, where sentences are diagrammed. The model shows the relations between words and the nature of sentence structure and can be used as a tool to help recognize which potential sentences are actual sentences.

In linguistics, wh-movement is the formation of syntactic dependencies involving interrogative words. An example in English is the dependency formed between what and the object position of doing in "What are you doing?" Interrogative forms are sometimes known within English linguistics as wh-words, such as what, when, where, who, and why, but also include other interrogative words, such as how. This dependency has been used as a diagnostic tool in syntactic studies as it can be observed to interact with other grammatical constraints.

In theoretical linguistics, a distinction is made between endocentric and exocentric constructions. A grammatical construction is said to be endocentric if it fulfils the same linguistic function as one of its parts, and exocentric if it does not. The distinction reaches back at least to Bloomfield's work of the 1930s, who based it on terms by Pāṇini and Patañjali in Sanskrit grammar. Such a distinction is possible only in phrase structure grammars, since in dependency grammars all constructions are necessarily endocentric.

Topicalization is a mechanism of syntax that establishes an expression as the sentence or clause topic by having it appear at the front of the sentence or clause. This involves a phrasal movement of determiners, prepositions, and verbs to sentence-initial position. Topicalization often results in a discontinuity and is thus one of a number of established discontinuity types, the other three being wh-fronting, scrambling, and extraposition. Topicalization is also used as a constituency test; an expression that can be topicalized is deemed a constituent. The topicalization of arguments in English is rare, whereas circumstantial adjuncts are often topicalized. Most languages allow topicalization, and in some languages, topicalization occurs much more frequently and/or in a much less marked manner than in English. Topicalization in English has also received attention in the pragmatics literature.

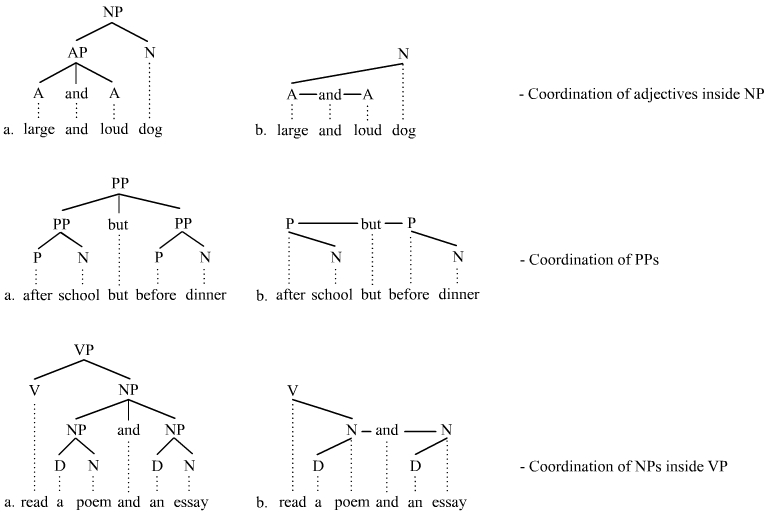

In linguistics, coordination is a complex syntactic structure that links together two or more elements; these elements are called conjuncts or conjoins. The presence of coordination is often signaled by the appearance of a coordinator, e.g. and, or, but. The totality of coordinator(s) and conjuncts forming an instance of coordination is called a coordinate structure. The unique properties of coordinate structures have motivated theoretical syntax to draw a broad distinction between coordination and subordination. It is also one of the many constituency tests in linguistics. Coordination is one of the most studied fields in theoretical syntax, but despite decades of intensive examination, theoretical accounts differ significantly and there is no consensus on the best analysis.

In linguistics, immediate constituent analysis or IC analysis is a method of sentence analysis that was first mentioned by Leonard Bloomfield and developed further by Rulon Wells. The process reached a full-blown strategy for analyzing sentence structure in the early works of Noam Chomsky. The practice is now widespread. Most tree structures employed to represent the syntactic structure of sentences are products of some form of IC-analysis. The process and result of IC-analysis can, however, vary greatly based upon whether one chooses the constituency relation of phrase structure grammars or the dependency relation of dependency grammars as the underlying principle that organizes constituents into hierarchical structures.

Pseudogapping is an ellipsis mechanism that elides most but not all of a non-finite verb phrase; at least one part of the verb phrase remains, which is called the remnant. Pseudogapping occurs in comparative and contrastive contexts, so it appears often after subordinators and coordinators such as if, although, but, than, etc. It is similar to verb phrase ellipsis (VP-ellipsis) insofar as the ellipsis is introduced by an auxiliary verb, and many grammarians take it to be a particular type of VP-ellipsis. The distribution of pseudogapping is more restricted than that of VP-ellipsis, however, and in this regard, it has some traits in common with gapping. But unlike gapping, pseudogapping occurs in English but not in closely related languages. The analysis of pseudogapping can vary greatly depending in part on whether the analysis is based in a phrase structure grammar or a dependency grammar. Pseudogapping was first identified, named, and explored by Stump (1977) and has since been studied in detail by Levin (1986) among others, and now enjoys a firm position in the canon of acknowledged ellipsis mechanisms of English.

This article describes the syntax of clauses in the English language, chiefly in Modern English. A clause is often said to be the smallest grammatical unit that can express a complete proposition. But this semantic idea of a clause leaves out much of English clause syntax. For example, clauses can be questions, but questions are not propositions. A syntactic description of an English clause is that it is a subject and a verb. But this too fails, as a clause need not have a subject, as with the imperative, and, in many theories, an English clause may be verbless. The idea of what qualifies varies between theories and has changed over time.