Swarm intelligence (SI) is the collective behavior of decentralized, self-organized systems, natural or artificial. The concept is employed in work on artificial intelligence. The expression was introduced by Gerardo Beni and Jing Wang in 1989, in the context of cellular robotic systems.

Simultaneous localization and mapping (SLAM) is the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an agent's location within it. While this initially appears to be a chicken or the egg problem, there are several algorithms known to solve it in, at least approximately, tractable time for certain environments. Popular approximate solution methods include the particle filter, extended Kalman filter, covariance intersection, and GraphSLAM. SLAM algorithms are based on concepts in computational geometry and computer vision, and are used in robot navigation, robotic mapping and odometry for virtual reality or augmented reality.

Swarm robotics is an approach to the coordination of multiple robots as a system which consist of large numbers of mostly simple physical robots. ″In a robot swarm, the collective behavior of the robots results from local interactions between the robots and between the robots and the environment in which they act.″ It is supposed that a desired collective behavior emerges from the interactions between the robots and interactions of robots with the environment. This approach emerged on the field of artificial swarm intelligence, as well as the biological studies of insects, ants and other fields in nature, where swarm behaviour occurs.

RAVON is a robot being developed at the Robotics Research Lab at University of Kaiserslautern, Germany. The vehicle is used as a testbed to investigate behaviour-based strategies on motion adaptation, localization and navigation in rough outdoor terrain. The basis vehicle was produced by Robosoft.

Connected-component labeling (CCL), connected-component analysis (CCA), blob extraction, region labeling, blob discovery, or region extraction is an algorithmic application of graph theory, where subsets of connected components are uniquely labeled based on a given heuristic. Connected-component labeling is not to be confused with segmentation.

A virtual fixture is an overlay of augmented sensory information upon a user's perception of a real environment in order to improve human performance in both direct and remotely manipulated tasks. Developed in the early 1990s by Louis Rosenberg at the U.S. Air Force Research Laboratory (AFRL), Virtual Fixtures was a pioneering platform in virtual reality and augmented reality technologies.

In robotics and computer vision, visual odometry is the process of determining the position and orientation of a robot by analyzing the associated camera images. It has been used in a wide variety of robotic applications, such as on the Mars Exploration Rovers.

Dmitri Dolgov is a Russian-American engineer who is the co-chief executive officer of Waymo. Previously, he worked on self-driving cars at Toyota and Stanford University for the DARPA Grand Challenge (2007). Dolgov then joined Waymo's predecessor, Google's Self-Driving Car Project, where he served as an engineer and head of software. He has also been Google X's lead scientist.

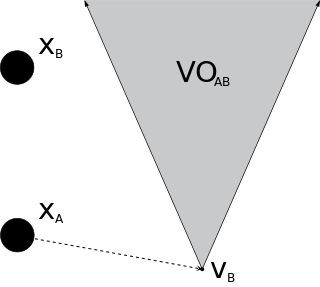

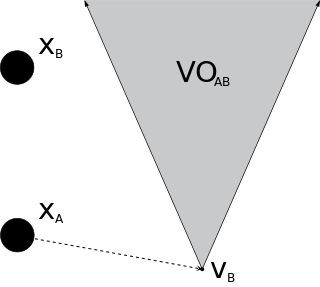

In robotics and motion planning, a velocity obstacle, commonly abbreviated VO, is the set of all velocities of a robot that will result in a collision with another robot at some moment in time, assuming that the other robot maintains its current velocity. If the robot chooses a velocity inside the velocity obstacle then the two robots will eventually collide, if it chooses a velocity outside the velocity obstacle, such a collision is guaranteed not to occur.

Kanguera is a robot hand developed by the University of São Paulo. It runs the VxWorks operating system. The goal of this research project is to model the kinematic properties of a human hand so that better anthropomorphic robotic grippers or manipulators can be developed. The name, Kanguera, is an ancient indigenous word for "bones outside the body".

MiroSurge is a presently prototypic robotic system designed mainly for research in minimally invasive telesurgery. In the described configuration, the system is designed according to the master slave principle and enables the operator to remotely control minimally invasive surgical instruments including force/torque feedback. The scenario is developed at the Institute of Robotics and Mechatronics within the German Aerospace Center (DLR).

Dlib is a general purpose cross-platform software library written in the programming language C++. Its design is heavily influenced by ideas from design by contract and component-based software engineering. Thus it is, first and foremost, a set of independent software components. It is open-source software released under a Boost Software License.

3D sound localization refers to an acoustic technology that is used to locate the source of a sound in a three-dimensional space. The source location is usually determined by the direction of the incoming sound waves and the distance between the source and sensors. It involves the structure arrangement design of the sensors and signal processing techniques.

Nikolaus Correll is a roboticist and an associate professor at the University of Colorado at Boulder in the Department of Computer Science with courtesy appointments in the departments of Aerospace, Electrical and Materials Engineering. Nikolaus is the faculty director of the Interdisciplinary Research Theme on Multi-functional Materials at the College of Engineering and Applied Science, and the founder and CTO of Robotic Materials Inc.

Alcherio Martinoli is a roboticist and an associate professor at the École polytechnique fédérale de Lausanne (EPFL) in the School of Architecture, Civil and Environmental Engineering where he heads the Distributed Systems and Algorithms Laboratory.

Robotic governance provides a regulatory framework to deal with autonomous and intelligent machines. This includes research and development activities as well as handling of these machines. The idea is related to the concepts of corporate governance, technology governance and IT-governance, which provide a framework for the management of organizations or the focus of a global IT infrastructure.

An event camera, also known as a neuromorphic camera, silicon retina or dynamic vision sensor, is an imaging sensor that responds to local changes in brightness. Event cameras do not capture images using a shutter as conventional (frame) cameras do. Instead, each pixel inside an event camera operates independently and asynchronously, reporting changes in brightness as they occur, and staying silent otherwise.

Margarita Chli is an assistant professor and leader of the Vision for Robotics Lab at ETH Zürich in Switzerland. Chli is a leader in the field of computer vision and robotics and was on the team of researchers to develop the first fully autonomous helicopter with onboard localization and mapping. Chli is also the Vice Director of the Institute of Robotics and Intelligent Systems and an Honorary Fellow of the University of Edinburgh in the United Kingdom. Her research currently focuses on developing visual perception and intelligence in flying autonomous robotic systems.

Asynchronous multi-body framework (AMBF) is an open-source 3D versatile simulator for robots developed in April 2019. This multi-body framework provides a real-time dynamic simulation of multi-bodies such as robots, free bodies, and multi-link puzzles, paired with real-time haptic interaction with various input devices. The framework integrates a real surgeon master console, haptic or not, to control simulated robots in real-time. This feature results in the simulator being used in real-time training applications for surgical and non-surgical tasks. It offers the possibility to interact with soft bodies to simulate surgical tasks where tissues are subject to deformations. It also provides a Python Client to interact easily with the simulated bodies and train neural networks on real-time data with in-loop simulation. It includes a wide range of robots, grippers, sensors, puzzles, and soft bodies. Each simulated object is represented as an afObject; likewise, the simulation world is represented as an afWorld. Both utilize two communication interfaces: state and command. Through the State command, the object can send data outside the simulation environment, while the Command allows to apply commands to the underlying afObject.

A continuum robot is a type of robot that is characterised by infinite degrees of freedom and number of joints. These characteristics allow continuum manipulators to adjust and modify their shape at any point along their length, granting them the possibility to work in confined spaces and complex environments where standard rigid-link robots cannot operate. In particular, we can define a continuum robot as an actuatable structure whose constitutive material forms curves with continuous tangent vectors. This is a fundamental definition that allows to distinguish between continuum robots and snake-arm robots or hyper-redundant manipulators: the presence of rigid links and joints allows them to only approximately perform curves with continuous tangent vectors.