Related Research Articles

Facial perception is an individual's understanding and interpretation of the face. Here, perception implies the presence of consciousness and hence excludes automated facial recognition systems. Although facial recognition is found in other species, this article focuses on facial perception in humans.

Neuroesthetics is a relatively recent sub-discipline of applied aesthetics. Empirical aesthetics takes a scientific approach to the study of aesthetic experience of art, music, or any object that can give rise to aesthetic judgments. Neuroesthetics is a term coined by Semir Zeki in 1999 and received its formal definition in 2002 as the scientific study of the neural bases for the contemplation and creation of a work of art. Anthropologists and evolutionary biologists alike have accumulated evidence suggesting that human interest in, and creation of, art evolved as an evolutionarily necessary mechanism for survival as early as the 9th and 10th century in Gregorian monks and Native Americans. Neuroesthetics uses neuroscience to explain and understand the aesthetic experiences at the neurological level. The topic attracts scholars from many disciplines including neuroscientists, art historians, artists, art therapists and psychologists.

Associative visual agnosia is a form of visual agnosia. It is an impairment in recognition or assigning meaning to a stimulus that is accurately perceived and not associated with a generalized deficit in intelligence, memory, language or attention. The disorder appears to be very uncommon in a "pure" or uncomplicated form and is usually accompanied by other complex neuropsychological problems due to the nature of the etiology. Affected individuals can accurately distinguish the object, as demonstrated by the ability to draw a picture of it or categorize accurately, yet they are unable to identify the object, its features or its functions.

The inferior temporal gyrus is one of three gyri of the temporal lobe and is located below the middle temporal gyrus, connected behind with the inferior occipital gyrus; it also extends around the infero-lateral border on to the inferior surface of the temporal lobe, where it is limited by the inferior sulcus. This region is one of the higher levels of the ventral stream of visual processing, associated with the representation of objects, places, faces, and colors. It may also be involved in face perception, and in the recognition of numbers and words.

Categorical perception is a phenomenon of perception of distinct categories when there is gradual change in a variable along a continuum. It was originally observed for auditory stimuli but now found to be applicable to other perceptual modalities.

The fusiform face area is a part of the human visual system that is specialized for facial recognition. It is located in the inferior temporal cortex (IT), in the fusiform gyrus.

Emotional responsivity is the ability to acknowledge an affective stimuli by exhibiting emotion. It is a sharp change of emotion according to a person's emotional state. Increased emotional responsivity refers to demonstrating more response to a stimulus. Reduced emotional responsivity refers to demonstrating less response to a stimulus. Any response exhibited after exposure to the stimulus, whether it is appropriate or not, would be considered as an emotional response. Although emotional responsivity applies to nonclinical populations, it is more typically associated with individuals with schizophrenia and autism.

Prosopamnesia is a selective neurological impairment in the ability to learn new faces. There is a special neural circuit for the processing of faces as opposed to other non-face objects. Prosopamnesia is a deficit in the part of this circuit responsible for encoding perceptions as memories.

In the human brain, the superior temporal sulcus (STS) is the sulcus separating the superior temporal gyrus from the middle temporal gyrus in the temporal lobe of the brain. A sulcus is a deep groove that curves into the largest part of the brain, the cerebrum, and a gyrus is a ridge that curves outward of the cerebrum.

In neuroscience, the visual P200 or P2 is a waveform component or feature of the event-related potential (ERP) measured at the human scalp. Like other potential changes measurable from the scalp, this effect is believed to reflect the post-synaptic activity of a specific neural process. The P2 component, also known as the P200, is so named because it is a positive going electrical potential that peaks at about 200 milliseconds after the onset of some external stimulus. This component is often distributed around the centro-frontal and the parieto-occipital areas of the scalp. It is generally found to be maximal around the vertex of the scalp, however there have been some topographical differences noted in ERP studies of the P2 in different experimental conditions.

The visual N1 is a visual evoked potential, a type of event-related electrical potential (ERP), that is produced in the brain and recorded on the scalp. The N1 is so named to reflect the polarity and typical timing of the component. The "N" indicates that the polarity of the component is negative with respect to an average mastoid reference. The "1" originally indicated that it was the first negative-going component, but it now better indexes the typical peak of this component, which is around 150 to 200 milliseconds post-stimulus. The N1 deflection may be detected at most recording sites, including the occipital, parietal, central, and frontal electrode sites. Although, the visual N1 is widely distributed over the entire scalp, it peaks earlier over frontal than posterior regions of the scalp, suggestive of distinct neural and/or cognitive correlates. The N1 is elicited by visual stimuli, and is part of the visual evoked potential – a series of voltage deflections observed in response to visual onsets, offsets, and changes. Both the right and left hemispheres generate an N1, but the laterality of the N1 depends on whether a stimulus is presented centrally, laterally, or bilaterally. When a stimulus is presented centrally, the N1 is bilateral. When presented laterally, the N1 is larger, earlier, and contralateral to the visual field of the stimulus. When two visual stimuli are presented, one in each visual field, the N1 is bilateral. In the latter case, the N1's asymmetrical skewedness is modulated by attention. Additionally, its amplitude is influenced by selective attention, and thus it has been used to study a variety of attentional processes.

The C1 and P1 are two human scalp-recorded event-related brain potential components, collected by means of a technique called electroencephalography (EEG). The C1 is named so because it was the first component in a series of components found to respond to visual stimuli when it was first discovered. It can be a negative-going component or a positive going component with its peak normally observed in the 65–90 ms range post-stimulus onset. The P1 is called the P1 because it is the first positive-going component and its peak is normally observed in around 100 ms. Both components are related to processing of visual stimuli and are under the category of potentials called visually evoked potentials (VEPs). Both components are theorized to be evoked within the visual cortices of the brain with C1 being linked to the primary visual cortex of the human brain and the P1 being linked to other visual areas. One of the primary distinctions between these two components is that, whereas the P1 can be modulated by attention, the C1 has been typically found to be invariable to different levels of attention.

The N170 is a component of the event-related potential (ERP) that reflects the neural processing of faces, familiar objects or words. Furthermore, the N170 is modulated by prediction error processes.

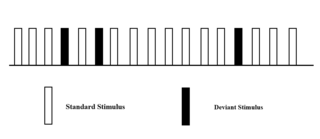

The oddball paradigm is an experimental design used within psychology research. The oddball paradigm relies on the brain's sensitivity to rare deviant stimuli presented pseudo-randomly in a series of repeated standard stimuli. The oddball paradigm has a wide selection of stimulus types, including stimuli such as sound duration, frequency, intensity, phonetic features, complex music, or speech sequences. The reaction of the participant to this "oddball" stimulus is recorded.

Beatrice M. L. de Gelder is a cognitive neuroscientist and neuropsychologist. She is professor of Cognitive Neuroscience and director of the Cognitive and Affective Neuroscience Laboratory at the Tilburg University (Netherlands), and was senior scientist at the Martinos Center for Biomedical Imaging, Harvard Medical School, Boston (USA). She joined the Department of Cognitive Neuroscince at Maastricht University in 2012. Her research interests include behavioral and neural emotion processing from facial and bodily expressions, multisensory perception and interaction between auditory and visual processes, and nonconscious perception in neurological patients. She is author of books and publications. She was a Fellow of the Netherlands Institute for Advanced Study, and an elected member of the International Neuropsychological Symposia since 1999. She is currently the editor-in-chief of the journal Frontiers in Emotion Science and associate editor for Frontiers in Perception Science.

Emotional lateralization is the asymmetrical representation of emotional control and processing in the brain. There is evidence for the lateralization of other brain functions as well.

Visual processing abnormalities in schizophrenia are commonly found, and contribute to poor social function.

Many experiments have been done to find out how the brain interprets stimuli and how animals develop fear responses. The emotion, fear, has been hard-wired into almost every individual, due to its vital role in the survival of the individual. Researchers have found that fear is established unconsciously and that the amygdala is involved with fear conditioning.

Emotion perception refers to the capacities and abilities of recognizing and identifying emotions in others, in addition to biological and physiological processes involved. Emotions are typically viewed as having three components: subjective experience, physical changes, and cognitive appraisal; emotion perception is the ability to make accurate decisions about another's subjective experience by interpreting their physical changes through sensory systems responsible for converting these observed changes into mental representations. The ability to perceive emotion is believed to be both innate and subject to environmental influence and is also a critical component in social interactions. How emotion is experienced and interpreted depends on how it is perceived. Likewise, how emotion is perceived is dependent on past experiences and interpretations. Emotion can be accurately perceived in humans. Emotions can be perceived visually, audibly, through smell and also through bodily sensations and this process is believed to be different from the perception of non-emotional material.

The occipital face area (OFA) is a region of the human cerebral cortex which is specialised for face perception. The OFA is located on the lateral surface of the occipital lobe adjacent to the inferior occipital gyrus. The OFA comprises a network of brain regions including the fusiform face area (FFA) and posterior superior temporal sulcus (STS) which support facial processing.

References

- ↑ Rainer, G. (2008). Visual neuroscience: computational brain dynamics of face processing. Current Biology, 17(21), R933-R934.

- ↑ Zhaoping, L. (2014). Understanding vision: theory, models, and data. Oxford University Press.

- 1 2 3 Schyns, P.G., Petro, L.S., and Smith, M.L. (2007). Dynamics of visual information integration in the brain to categorize facial expressions. Current Biology 17, 1580–1585.

- ↑ Eimer, M., and Holmes, A. (2007). Event-related brain potential correlates of emotional face processing. Neuropsychologia 45, 15–31.

- ↑ Vuilleumier, P., & Pourtois, G. (2007). Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia 45, 174–194.

- ↑ Gosselin, F., and Schyns, P.G. (2001). Bubbles: a technique to reveal the use of information in recognition tasks. Vision Res. 41, 2261–2271.

- 1 2 Paradiso, M. (2000). Visual Neuroscience: illuminating the dark corners. Current Biology 10(1), R15–R18.

- ↑ Logvinenko AD: Lightness induction revisited. Perception 1999, 28:803-816.

- ↑ Schwartz, S. H. (2010). Visual Perception a clinical orientation(fourth edition). New York: The McGraw-Hill Companies.