Related Research Articles

Computing is any goal-oriented activity requiring, benefiting from, or creating computing machinery. It includes the study and experimentation of algorithmic processes, and the development of both hardware and software. Computing has scientific, engineering, mathematical, technological, and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology, and software engineering.

Distributed computing is a field of computer science that studies distributed systems, defined as computer systems whose inter-communicating components are located on different networked computers.

In computing, time-sharing is the sharing of a computing resource among many tasks or users. It enables multi-tasking by a single user or enables multiple-user sessions.

In computing, a process is the instance of a computer program that is being executed by one or many threads. There are many different process models, some of which are light weight, but almost all processes are rooted in an operating system (OS) process which comprises the program code, assigned system resources, physical and logical access permissions, and data structures to initiate, control and coordinate execution activity. Depending on the OS, a process may be made up of multiple threads of execution that execute instructions concurrently.

Grid computing is the use of widely distributed computer resources to reach a common goal. A computing grid can be thought of as a distributed system with non-interactive workloads that involve many files. Grid computing is distinguished from conventional high-performance computing systems such as cluster computing in that grid computers have each node set to perform a different task/application. Grid computers also tend to be more heterogeneous and geographically dispersed than cluster computers. Although a single grid can be dedicated to a particular application, commonly a grid is used for a variety of purposes. Grids are often constructed with general-purpose grid middleware software libraries. Grid sizes can be quite large.

In computing, load balancing is the process of distributing a set of tasks over a set of resources, with the aim of making their overall processing more efficient. Load balancing can optimize the response time and avoid unevenly overloading some compute nodes while other compute nodes are left idle.

RSX-11 is a discontinued family of multi-user real-time operating systems for PDP-11 computers created by Digital Equipment Corporation. In widespread use through the late 1970s and early 1980s, RSX-11 was influential in the development of later operating systems such as VMS and Windows NT.

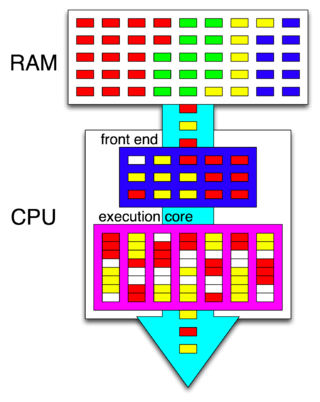

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

Hyper-threading is Intel's proprietary simultaneous multithreading (SMT) implementation used to improve parallelization of computations performed on x86 microprocessors. It was introduced on Xeon server processors in February 2002 and on Pentium 4 desktop processors in November 2002. Since then, Intel has included this technology in Itanium, Atom, and Core 'i' Series CPUs, among others.

In computer engineering, instruction pipelining is a technique for implementing instruction-level parallelism within a single processor. Pipelining attempts to keep every part of the processor busy with some instruction by dividing incoming instructions into a series of sequential steps performed by different processor units with different parts of instructions processed in parallel.

Robert "Bob" Melancton Metcalfe is an American engineer and entrepreneur who contributed to the development of the internet in the 1970s. He co-invented Ethernet, co-founded 3Com, and formulated Metcalfe's law, which describes the effect of a telecommunications network. Metcalfe has also made several predictions which failed to come to pass, including forecasting the demise of the internet during the 1990s.

Speculative execution is an optimization technique where a computer system performs some task that may not be needed. Work is done before it is known whether it is actually needed, so as to prevent a delay that would have to be incurred by doing the work after it is known that it is needed. If it turns out the work was not needed after all, most changes made by the work are reverted and the results are ignored.

Multiuser DOS is a real-time multi-user multi-tasking operating system for IBM PC-compatible microcomputers.

InfoWorld (IW) is an American information technology media business. Founded in 1978, it began as a monthly magazine. In 2007, it transitioned to a web-only publication. Its parent company today is International Data Group, and its sister publications include Macworld and PC World. InfoWorld is based in San Francisco, with contributors and supporting staff based across the U.S..

A computer network is a set of computers sharing resources located on or provided by network nodes. Computers use common communication protocols over digital interconnections to communicate with each other. These interconnections are made up of telecommunication network technologies based on physically wired, optical, and wireless radio-frequency methods that may be arranged in a variety of network topologies.

A computer is a machine that can be programmed to automatically carry out sequences of arithmetic or logical operations (computation). Modern digital electronic computers can perform generic sets of operations known as programs. These programs enable computers to perform a wide range of tasks. The term computer system may refer to a nominally complete computer that includes the hardware, operating system, software, and peripheral equipment needed and used for full operation; or to a group of computers that are linked and function together, such as a computer network or computer cluster.

Xgrid is a proprietary grid computing program and protocol developed by the Advanced Computation Group subdivision of Apple Inc.

Apache Hama is a distributed computing framework based on bulk synchronous parallel computing techniques for massive scientific computations e.g., matrix, graph and network algorithms. Originally a sub-project of Hadoop, it became an Apache Software Foundation top level project in 2012. It was created by Edward J. Yoon, who named it, and Hama also means hippopotamus in Yoon's native Korean language (하마), following the trend of naming Apache projects after animals and zoology. Hama was inspired by Google's Pregel large-scale graph computing framework described in 2010. When executing graph algorithms, Hama showed a fifty-fold performance increase relative to Hadoop.

BatteryMAX is an idle detection system used for computer power management under operating system control developed at Digital Research, Inc.'s European Development Centre (EDC) in Hungerford, UK. It was created to address the new genre of portable personal computers (laptops) which ran from battery power. As such, it was also an integral part of Novell's PalmDOS 1.0 operating system tailored for early palmtops in 1992.

In parallel computing, work stealing is a scheduling strategy for multithreaded computer programs. It solves the problem of executing a dynamically multithreaded computation, one that can "spawn" new threads of execution, on a statically multithreaded computer, with a fixed number of processors. It does so efficiently in terms of execution time, memory usage, and inter-processor communication.

References

- ↑ "Tired of waiting for your computer to do its job? Say hello to anticiparallelism". InfoWorld. 20 (32). August 10, 1998. Retrieved 2011-02-14.

- ↑ Anthes, Gary (January 7, 2002). "Anticiparallelism". ComputerWorld. International Data Group Inc.

- ↑ Hilary W. Poole; Laura Lambert; Chris Woodford; Christos J. P. Moschovitis, eds. (2005). The Internet: a historical encyclopedia. Vol. 1. ABC-CLIO. p. 178. ISBN 1-85109-659-0.

- ↑ "Managing information". ASLIB. 17. Association for Information Management: 333.