Related Research Articles

A hard disk drive (HDD), hard disk, hard drive, or fixed disk, is an electro-mechanical data storage device that stores and retrieves digital data using magnetic storage with one or more rigid rapidly rotating platters coated with magnetic material. The platters are paired with magnetic heads, usually arranged on a moving actuator arm, which read and write data to the platter surfaces. Data is accessed in a random-access manner, meaning that individual blocks of data can be stored and retrieved in any order. HDDs are a type of non-volatile storage, retaining stored data when powered off. Modern HDDs are typically in the form of a small rectangular box.

Small Computer System Interface is a set of standards for physically connecting and transferring data between computers and peripheral devices, best known for its use with storage devices such as hard disk drives. SCSI was introduced in the 1980s and has seen widespread use on servers and high-end workstations, with new SCSI standards being published as recently as SAS-4 in 2017.

RAID is a data storage virtualization technology that combines multiple physical disk drive components into one or more logical units for the purposes of data redundancy, performance improvement, or both. This is in contrast to the previous concept of highly reliable mainframe disk drives referred to as "single large expensive disk" (SLED).

Disk formatting is the process of preparing a data storage device such as a hard disk drive, solid-state drive, floppy disk, memory card or USB flash drive for initial use. In some cases, the formatting operation may also create one or more new file systems. The first part of the formatting process that performs basic medium preparation is often referred to as "low-level formatting". Partitioning is the common term for the second part of the process, dividing the device into several sub-devices and, in some cases, writing information to the device allowing an operating system to be booted from it. The third part of the process, usually termed "high-level formatting" most often refers to the process of generating a new file system. In some operating systems all or parts of these three processes can be combined or repeated at different levels and the term "format" is understood to mean an operation in which a new disk medium is fully prepared to store files. Some formatting utilities allow distinguishing between a quick format, which does not erase all existing data and a long option that does erase all existing data.

In the maintenance of file systems, defragmentation is a process that reduces the degree of fragmentation. It does this by physically organizing the contents of the mass storage device used to store files into the smallest number of contiguous regions. It also attempts to create larger regions of free space using compaction to impede the return of fragmentation. Some defragmentation utilities try to keep smaller files within a single directory together, as they are often accessed in sequence.

dd is a command-line utility for Unix, Plan 9, Inferno, and Unix-like operating systems and beyond, the primary purpose of which is to convert and copy files. On Unix, device drivers for hardware and special device files appear in the file system just like normal files; dd can also read and/or write from/to these files, provided that function is implemented in their respective driver. As a result, dd can be used for tasks such as backing up the boot sector of a hard drive, and obtaining a fixed amount of random data. The dd program can also perform conversions on the data as it is copied, including byte order swapping and conversion to and from the ASCII and EBCDIC text encodings.

Self-Monitoring, Analysis, and Reporting Technology is a monitoring system included in computer hard disk drives (HDDs) and solid-state drives (SSDs). Its primary function is to detect and report various indicators of drive reliability with the intent of anticipating imminent hardware failures.

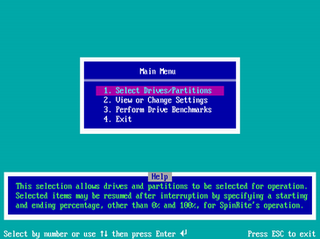

SpinRite is a computer program for scanning RAS Random Access Storage devices such as hard disks, reading and rewriting data using proprietary programming methods to resolve and retrieve data that is unreadable by DOS or Windows. The first version was released in 1987 by Steve Gibson. The current version, 6.0, was released in 2004., with ongoing development open to the public at https://www.grc.com/dev/spinrite/

The USB mass storage device class is a set of computing communications protocols, specifically a USB Device Class, defined by the USB Implementers Forum that makes a USB device accessible to a host computing device and enables file transfers between the host and the USB device. To a host, the USB device acts as an external hard drive; the protocol set interfaces with a number of storage devices.

Data scrubbing is an error correction technique that uses a background task to periodically inspect main memory or storage for errors, then corrects detected errors using redundant data in the form of different checksums or copies of data. Data scrubbing reduces the likelihood that single correctable errors will accumulate, leading to reduced risks of uncorrectable errors.

sync is a standard system call in the Unix operating system, which commits all data from the kernel filesystem buffers to non-volatile storage, i.e., data which has been scheduled for writing via low-level I/O system calls. Higher-level I/O layers such as stdio may maintain separate buffers of their own.

The host protected area (HPA) is an area of a hard drive or solid-state drive that is not normally visible to an operating system. It was first introduced in the ATA-4 standard CXV (T13) in 2001.

In computing, error recovery control (ERC) is a feature of hard disks which allow a system administrator to configure the amount of time a drive's firmware is allowed to spend recovering from a read or write error. Limiting the recovery time allows for improved error handling in hardware or software RAID environments. In some cases, there is a conflict as to whether error handling should be undertaken by the hard drive or by the RAID implementation, which leads to drives being marked as unusable and significant performance degradation, when this could otherwise have been avoided.

In computer storage, disk buffer is the embedded memory in a hard disk drive (HDD) or solid state drive (SSD) acting as a buffer between the rest of the computer and the physical hard disk platter or flash memory that is used for storage. Modern hard disk drives come with 8 to 256 MiB of such memory, and solid-state drives come with up to 4 GB of cache memory.

badblocks is a Linux utility to check for bad sectors on a disk drive. It can create a text file with list of these sectors that can be used with other programs, like mkfs, so that they are not used in the future and thus do not cause corruption of data. It is part of the e2fsprogs project, and a port is available for BSD operating systems.

A forensic disk controller or hardware write-block device is a specialized type of computer hard disk controller made for the purpose of gaining read-only access to computer hard drives without the risk of damaging the drive's contents. The device is named forensic because its most common application is for use in investigations where a computer hard drive may contain evidence. Such a controller historically has been made in the form of a dongle that fits between a computer and an IDE or SCSI hard drive, but with the advent of USB and SATA, forensic disk controllers supporting these newer technologies have become widespread. Steve Bress and Mark Menz invented hard drive write blocking.

SCSI / ATA Translation (SAT) is a set of standards developed by the T10 subcommittee, defining how to communicate with ATA devices through a SCSI application layer. The standard attempts to be consistent with the SCSI architectural model, the SCSI Primary Commands, and the SCSI Block Commands standards.

A trim command allows an operating system to inform a solid-state drive (SSD) which blocks of data are no longer considered to be "in use" and therefore can be erased internally.

Shingled magnetic recording (SMR) is a magnetic storage data recording technology used in hard disk drives (HDDs) to increase storage density and overall per-drive storage capacity. Conventional hard disk drives record data by writing non-overlapping magnetic tracks parallel to each other, while shingled recording writes new tracks that overlap part of the previously written magnetic track, leaving the previous track narrower and allowing higher track density. Thus, the tracks partially overlap similar to roof shingles. This approach was selected because, if the writing head is made too narrow, it cannot provide the very high fields required in the recording layer of the disk.

ATATool is freeware software that is used to display and modify ATA disk information from a Microsoft Windows environment. The software is typically used to manage host protected area (HPA) and device configuration overlay (DCO) features and is broadly similar to the hdparm for Linux. The software can also be used to generate and sometimes repair bad sectors. Recent versions include support for DCO restore and freeze operations, HPA security (password) operations and simulated bad sectors.

References

- 1 2 Zhang (2 March 2018). "Hard vs Soft Bad Sectors in HDD: Different Causes and Solutions". Data Recovery Blog.

- ↑ Chris Hoffman (2017-07-05). "Bad Sectors Explained: Why Hard Drives Get Bad Sectors and What You Can Do About It". How-To Geek.

- 1 2 "Bad Sector Remapping". mjm.co.uk.

- ↑ "badblocks - Can btrfs track / avoid bad blocks?". Unix & Linux Stack Exchange.

- 1 2 – Linux Programmer's Manual – Administration and Privileged Commands. "--make-bad-sector Deliberately create a bad sector (aka. "media error") on the disk. [...] Note also that the --repair-sector option can be used to restore (any) bad sectors when they are no longer needed, including sectors that were genuinely bad (the drive will likely remap those to a fresh area on the media). --write-sector: This can be used to force a drive to repair a bad sector (media error)."

- ↑ Monitoring Hard Disks with SMART.Linux Journal, 2004.

- ↑ "Encyclopedia". PCMag.com. Ziff Davis.

- ↑ Stephens, Curtis E, ed. (December 11, 2006), Information technology - AT Attachment 8 - ATA/ATAPI Command Set (ATA8-ACS), working draft revision 3f (PDF), ANSI INCITS, pp. 198–213, 327–344, archived from the original (PDF) on 2007-07-30

- ↑ "INCITS 506-202x - Information technology - SCSI Block Commands - 4 (SBC-4) draft revision 22". 15 September 2020. Retrieved 22 May 2023.

- ↑ Lakshmi N. Bairavasundaram; Garth R. Goodson; Shankar Pasupathy; Jiri Schindler (June 2007). "An analysis of latent sector errors in disk drives". Proceedings of the 2007 ACM SIGMETRICS international conference on Measurement and modeling of computer systems. San Diego, California, United States: ACM. pp. 289–300. CiteSeerX 10.1.1.63.1412 . doi:10.1145/1254882.1254917. ISBN 9781595936394. S2CID 14164251 . Retrieved 9 June 2012.