The Capability Maturity Model (CMM) is a development model created in 1986 after a study of data collected from organizations that contracted with the U.S. Department of Defense, who funded the research. The term "maturity" relates to the degree of formality and optimization of processes, from ad hoc practices, to formally defined steps, to managed result metrics, to active optimization of the processes.

Business intelligence (BI) comprises the strategies and technologies used by enterprises for the data analysis and management of business information. Common functions of business intelligence technologies include reporting, online analytical processing, analytics, dashboard development, data mining, process mining, complex event processing, business performance management, benchmarking, text mining, predictive analytics, and prescriptive analytics.

Information technology (IT)governance is a subset discipline of corporate governance, focused on information technology (IT) and its performance and risk management. The interest in IT governance is due to the ongoing need within organizations to focus value creation efforts on an organization's strategic objectives and to better manage the performance of those responsible for creating this value in the best interest of all stakeholders. It has evolved from The Principles of Scientific Management, Total Quality Management and ISO 9001 Quality management system.

ISO/IEC 15504Information technology – Process assessment, also termed Software Process Improvement and Capability Determination (SPICE), is a set of technical standards documents for the computer software development process and related business management functions. It is one of the joint International Organization for Standardization (ISO) and International Electrotechnical Commission (IEC) standards, which was developed by the ISO and IEC joint subcommittee, ISO/IEC JTC 1/SC 7.

Capability Maturity Model Integration (CMMI) is a process level improvement training and appraisal program. Administered by the CMMI Institute, a subsidiary of ISACA, it was developed at Carnegie Mellon University (CMU). It is required by many U.S. Government contracts, especially in software development. CMU claims CMMI can be used to guide process improvement across a project, division, or an entire organization. CMMI defines the following maturity levels for processes: Initial, Managed, Defined, Quantitatively Managed, and Optimizing. Version 2.0 was published in 2018. CMMI is registered in the U.S. Patent and Trademark Office by CMU.

Enterprise risk management (ERM) in business includes the methods and processes used by organizations to manage risks and seize opportunities related to the achievement of their objectives. ERM provides a framework for risk management, which typically involves identifying particular events or circumstances relevant to the organization's objectives, assessing them in terms of likelihood and magnitude of impact, determining a response strategy, and monitoring process. By identifying and proactively addressing risks and opportunities, business enterprises protect and create value for their stakeholders, including owners, employees, customers, regulators, and society overall.

Microsoft Solutions Framework (MSF) is a set of principles, models, disciplines, concepts, and guidelines for delivering information technology services from Microsoft. MSF is not limited to developing applications only; it is also applicable to other IT projects like deployment, networking or infrastructure projects. MSF does not force the developer to use a specific methodology.

The implementation maturity model (IMM) is an instrument to help an organization in assessing and determining the degree of maturity of its implementation processes.

The Portfolio, Programme and Project Management Maturity Model (P3M3) is a management maturity model looking across an organization at how it delivers its projects, programmes and portfolio(s). P3M3 looks at the whole system and not just one processes. The hierarchical approach enables organisations to assess their capability and then plot a roadmap for improvement prioritised by those key process areas (KPAs) which will make the biggest impact on performance.

The Trillium Model, created by a collaborative team from Bell Canada, Northern Telecom and Bell Northern Research combines requirements from the ISO 9000 series, the Capability Maturity Model (CMM) for software, and the Baldrige Criteria for Performance Excellence, with software quality standards from the IEEE. Trillium has a telecommunications orientation and provides customer focus. The practices in the Trillium Model are derived from a benchmarking exercise which focused on all practices that would contribute to an organization's product development and support capability. The Trillium Model covers all aspects of the software development life-cycle, most system and product development and support activities, and a significant number of related marketing activities. Many of the practices described in the model can be applied directly to hardware development.

An operating model is both an abstract and visual representation (model) of how an organization delivers value to its customers or beneficiaries as well as how an organization actually runs itself.

Information governance, or IG, is the overall strategy for information at an organization. Information governance balances the risk that information presents with the value that information provides. Information governance helps with legal compliance, operational transparency, and reducing expenditures associated with legal discovery. An organization can establish a consistent and logical framework for employees to handle data through their information governance policies and procedures. These policies guide proper behavior regarding how organizations and their employees handle information whether it is physically or electronically created (ESI).

In software engineering, a software development process is a process of dividing software development work into smaller, parallel, or sequential steps or sub-processes to improve design, product management. It is also known as a software development life cycle (SDLC). The methodology may include the pre-definition of specific deliverables and artifacts that are created and completed by a project team to develop or maintain an application.

IT risk management is the application of risk management methods to information technology in order to manage IT risk, i.e.:

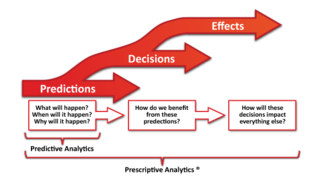

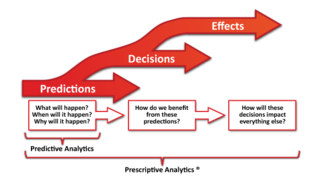

Prescriptive analytics is the third and final phase of business analytics, which also includes descriptive and predictive analytics.

Tudor IT Process Assessment (TIPA) is a methodological framework for process assessment. Its first version was published in 2003 by the Public Research Centre Henri Tudor based in Luxembourg. TIPA is now a registered trademark of the Luxembourg Institute of Science and Technology (LIST). TIPA offers a structured approach to determine process capability compared to recognized best practices. TIPA also supports process improvement by providing a gap analysis and proposing improvement recommendations.

Software Intelligence is insight into the structural condition of software assets produced by software designed to analyze database structure, software framework and source code to better understand and control complex software systems in Information Technology environments. Similarly to Business Intelligence (BI), Software Intelligence is produced by a set of software tools and techniques for the mining of data and software inner-structure. End results are information used by business and software stakeholders to make informed decisions, measure the efficiency of software development organizations, communicate about software health, prevent software catastrophes.

Innovation management measurement helps companies in understanding the current status of their innovation capabilities and practices. Throughout this control areas of strength and weakness are identified and the organizations get a clue where they have to concentrate on to maximize the future success of their innovation procedures. Furthermore, the measurement of innovation assists firms in fostering an innovation culture within the organization and in spreading the awareness of the importance of innovation. It also discloses the restrictions for creativity and opportunity for innovation. Because of all these arguments it is very important to measure the degree of innovation in the company, also in comparison with other companies. On the other hand, firms have to be careful not to misapply the wrong metrics, because they could threaten innovation and influence thinking in the wrong way.

Technology readiness levels (TRLs) are a method for estimating the maturity of technologies during the acquisition phase of a program. TRLs enable consistent and uniform discussions of technical maturity across different types of technology. TRL is determined during a technology readiness assessment (TRA) that examines program concepts, technology requirements, and demonstrated technology capabilities. TRLs are based on a scale from 1 to 9 with 9 being the most mature technology.

Cybersecurity Capacity Maturity Model for Nations (CMM) is a framework developed to review the cybersecurity capacity maturity of a country across five dimensions. The five dimensions covers the capacity area required by a country to improve its cybersecurity posture. It was designed by Global Cyber Security Capacity Centre (GCSCC) of University of Oxford and first of its kind framework for countries to review their cybersecurity capacity, benchmark it and receive recommendation for improvement. Each dimension is divided into factors and the factors broken down into aspects. The review process includes rating each factor or aspect along five stages that represents the how well a country is doing in respect to that factor or aspect. The recommendations includes guidance on areas of cybersecurity that needs improvement and thus will require more focus and investment. As at June, 2021, the framework has been adopted and implemented in over 80 countries worldwide. Its' deployment has been catalyzed by the involvement of international organizations such as the Organization of American States (OAS), the World Bank (WB), the International Telecommunication Union (ITU) and the Commonwealth Telecommunications Union (CTO) and Global Forum on Cyber Expertise (GFCE).