In quantum mechanics, bra–ket notation, or Dirac notation, is used ubiquitously to denote quantum states. The notation uses angle brackets, and , and a vertical bar , to construct "bras" and "kets".

In mathematics, any vector space has a corresponding dual vector space consisting of all linear forms on , together with the vector space structure of pointwise addition and scalar multiplication by constants.

In mathematics, a geometric algebra is an extension of elementary algebra to work with geometrical objects such as vectors. Geometric algebra is built out of two fundamental operations, addition and the geometric product. Multiplication of vectors results in higher-dimensional objects called multivectors. Compared to other formalisms for manipulating geometric objects, geometric algebra is noteworthy for supporting vector division and addition of objects of different dimensions.

In mathematics, an inner product space is a real vector space or a complex vector space with an operation called an inner product. The inner product of two vectors in the space is a scalar, often denoted with angle brackets such as in . Inner products allow formal definitions of intuitive geometric notions, such as lengths, angles, and orthogonality of vectors. Inner product spaces generalize Euclidean vector spaces, in which the inner product is the dot product or scalar product of Cartesian coordinates. Inner product spaces of infinite dimension are widely used in functional analysis. Inner product spaces over the field of complex numbers are sometimes referred to as unitary spaces. The first usage of the concept of a vector space with an inner product is due to Giuseppe Peano, in 1898.

Linear algebra is the branch of mathematics concerning linear equations such as:

In mathematical physics and mathematics, the Pauli matrices are a set of three 2 × 2 complex matrices which are Hermitian, involutory and unitary. Usually indicated by the Greek letter sigma, they are occasionally denoted by tau when used in connection with isospin symmetries.

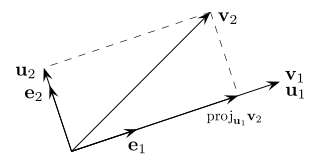

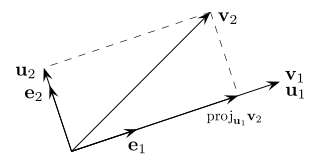

In mathematics, particularly linear algebra and numerical analysis, the Gram–Schmidt process is a method for orthonormalizing a set of vectors in an inner product space, most commonly the Euclidean space Rn equipped with the standard inner product. The Gram–Schmidt process takes a finite, linearly independent set of vectors S = {v1, ..., vk} for k ≤ n and generates an orthogonal set S′ = {u1, ..., uk} that spans the same k-dimensional subspace of Rn as S.

In linear algebra, the outer product of two coordinate vectors is a matrix. If the two vectors have dimensions n and m, then their outer product is an n × m matrix. More generally, given two tensors, their outer product is a tensor. The outer product of tensors is also referred to as their tensor product, and can be used to define the tensor algebra.

In mathematics, particularly in linear algebra, a skew-symmetricmatrix is a square matrix whose transpose equals its negative. That is, it satisfies the condition

In multilinear algebra, a tensor contraction is an operation on a tensor that arises from the natural pairing of a finite-dimensional vector space and its dual. In components, it is expressed as a sum of products of scalar components of the tensor(s) caused by applying the summation convention to a pair of dummy indices that are bound to each other in an expression. The contraction of a single mixed tensor occurs when a pair of literal indices of the tensor are set equal to each other and summed over. In Einstein notation this summation is built into the notation. The result is another tensor with order reduced by 2.

In mathematics, a linear form is a linear map from a vector space to its field of scalars.

In mathematics, an eigenfunction of a linear operator D defined on some function space is any non-zero function in that space that, when acted upon by D, is only multiplied by some scaling factor called an eigenvalue. As an equation, this condition can be written as

In physics, an operator is a function over a space of physical states onto another space of physical states. The simplest example of the utility of operators is the study of symmetry. Because of this, they are very useful tools in classical mechanics. Operators are even more important in quantum mechanics, where they form an intrinsic part of the formulation of the theory.

In linear algebra, a QR decomposition, also known as a QR factorization or QU factorization, is a decomposition of a matrix A into a product A = QR of an orthogonal matrix Q and an upper triangular matrix R. QR decomposition is often used to solve the linear least squares problem and is the basis for a particular eigenvalue algorithm, the QR algorithm.

In mathematics, the Hodge star operator or Hodge star is a linear map defined on the exterior algebra of a finite-dimensional oriented vector space endowed with a nondegenerate symmetric bilinear form. Applying the operator to an element of the algebra produces the Hodge dual of the element. This map was introduced by W. V. D. Hodge.

In linear algebra and functional analysis, a projection is a linear transformation from a vector space to itself such that . That is, whenever is applied twice to any vector, it gives the same result as if it were applied once. It leaves its image unchanged. This definition of "projection" formalizes and generalizes the idea of graphical projection. One can also consider the effect of a projection on a geometrical object by examining the effect of the projection on points in the object.

In linear algebra, the Gram matrix of a set of vectors in an inner product space is the Hermitian matrix of inner products, whose entries are given by the inner product . If the vectors are the columns of matrix then the Gram matrix is in the general case that the vector coordinates are complex numbers, which simplifies to for the case that the vector coordinates are real numbers.

In linear algebra, given a vector space V with a basis B of vectors indexed by an index set I, the dual set of B is a set B∗ of vectors in the dual space V∗ with the same index set I such that B and B∗ form a biorthogonal system. The dual set is always linearly independent but does not necessarily span V∗. If it does span V∗, then B∗ is called the dual basis or reciprocal basis for the basis B.

In quantum mechanics, a two-state system is a quantum system that can exist in any quantum superposition of two independent quantum states. The Hilbert space describing such a system is two-dimensional. Therefore, a complete basis spanning the space will consist of two independent states. Any two-state system can also be seen as a qubit.

In linear algebra, a frame of an inner product space is a generalization of a basis of a vector space to sets that may be linearly dependent. In the terminology of signal processing, a frame provides a redundant, stable way of representing a signal. Frames are used in error detection and correction and the design and analysis of filter banks and more generally in applied mathematics, computer science, and engineering.