Related Research Articles

A central processing unit (CPU) is the most important processor in a given computer. Its electronic circuitry executes instructions of a computer program, such as arithmetic, logic, controlling, and input/output (I/O) operations. This role contrasts with that of external components, such as main memory and I/O circuitry, and specialized coprocessors such as graphics processing units (GPUs).

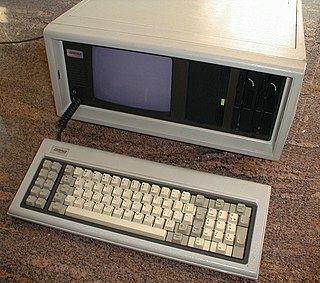

The IBM Personal Computer is the first microcomputer released in the IBM PC model line and the basis for the IBM PC compatible de facto standard. Released on August 12, 1981, it was created by a team of engineers and designers directed by William C. Lowe and Philip Don Estridge in Boca Raton, Florida.

A microprocessor is a computer processor for which the data processing logic and control is included on a single integrated circuit (IC), or a small number of ICs. The microprocessor contains the arithmetic, logic, and control circuitry required to perform the functions of a computer's central processing unit (CPU). The IC is capable of interpreting and executing program instructions and performing arithmetic operations. The microprocessor is a multipurpose, clock-driven, register-based, digital integrated circuit that accepts binary data as input, processes it according to instructions stored in its memory, and provides results as output. Microprocessors contain both combinational logic and sequential digital logic, and operate on numbers and symbols represented in the binary number system.

A motherboard is the main printed circuit board (PCB) in general-purpose computers and other expandable systems. It holds and allows communication between many of the crucial electronic components of a system, such as the central processing unit (CPU) and memory, and provides connectors for other peripherals. Unlike a backplane, a motherboard usually contains significant sub-systems, such as the central processor, the chipset's input/output and memory controllers, interface connectors, and other components integrated for general use.

A mainframe computer, informally called a mainframe or big iron, is a computer used primarily by large organizations for critical applications like bulk data processing for tasks such as censuses, industry and consumer statistics, enterprise resource planning, and large-scale transaction processing. A mainframe computer is large but not as large as a supercomputer and has more processing power than some other classes of computers, such as minicomputers, servers, workstations, and personal computers. Most large-scale computer-system architectures were established in the 1960s, but they continue to evolve. Mainframe computers are often used as servers.

A minicomputer, or colloquially mini, is a type of smaller general-purpose computer developed in the mid-1960s and sold at a much lower price than mainframe and mid-size computers from IBM and its direct competitors. In a 1970 survey, The New York Times suggested a consensus definition of a minicomputer as a machine costing less than US$25,000, with an input-output device such as a teleprinter and at least four thousand words of memory, that is capable of running programs in a higher level language, such as Fortran or BASIC.

A microcomputer is a small, relatively inexpensive computer having a central processing unit (CPU) made out of a microprocessor. The computer also includes memory and input/output (I/O) circuitry together mounted on a printed circuit board (PCB). Microcomputers became popular in the 1970s and 1980s with the advent of increasingly powerful microprocessors. The predecessors to these computers, mainframes and minicomputers, were comparatively much larger and more expensive. Many microcomputers are also personal computers. An early use of the term "personal computer" in 1962 predates microprocessor-based designs. (See "Personal Computer: Computers at Companies" reference below). A "microcomputer" used as an embedded control system may have no human-readable input and output devices. "Personal computer" may be used generically or may denote an IBM PC compatible machine.

IBM PC compatible computers are similar to the original IBM PC, XT, and AT, all from computer giant IBM, that are able to use the same software and expansion cards. Such computers were referred to as PC clones, IBM clones or IBM PC clones. The term "IBM PC compatible" is now a historical description only, since IBM no longer sells personal computers after it sold its personal computer division in 2005 to Chinese technology company Lenovo. The designation "PC", as used in much of personal computer history, has not meant "personal computer" generally, but rather an x86 computer capable of running the same software that a contemporary IBM PC could. The term was initially in contrast to the variety of home computer systems available in the early 1980s, such as the Apple II, TRS-80, and Commodore 64. Later, the term was primarily used in contrast to Apple's Macintosh computers.

A desktop computer is a personal computer designed for regular use at a stationary location on or near a desk due to its size and power requirements. The most common configuration has a case that houses the power supply, motherboard, disk storage ; a keyboard and mouse for input; and a monitor, speakers, and, often, a printer for output. The case may be oriented horizontally or vertically and placed either underneath, beside, or on top of a desk.

A workstation is a special computer designed for technical or scientific applications. Intended primarily to be used by a single user, they are commonly connected to a local area network and run multi-user operating systems. The term workstation has been used loosely to refer to everything from a mainframe computer terminal to a PC connected to a network, but the most common form refers to the class of hardware offered by several current and defunct companies such as Sun Microsystems, Silicon Graphics, Apollo Computer, DEC, HP, NeXT, and IBM which powered the 3D computer graphics revolution of the late 1990s.

The history of computing hardware starting at 1960 is marked by the conversion from vacuum tube to solid-state devices such as transistors and then integrated circuit (IC) chips. Around 1953 to 1959, discrete transistors started being considered sufficiently reliable and economical that they made further vacuum tube computers uncompetitive. Metal–oxide–semiconductor (MOS) large-scale integration (LSI) technology subsequently led to the development of semiconductor memory in the mid-to-late 1960s and then the microprocessor in the early 1970s. This led to primary computer memory moving away from magnetic-core memory devices to solid-state static and dynamic semiconductor memory, which greatly reduced the cost, size, and power consumption of computers. These advances led to the miniaturized personal computer (PC) in the 1970s, starting with home computers and desktop computers, followed by laptops and then mobile computers over the next several decades.

Project Athena was a joint project of MIT, Digital Equipment Corporation, and IBM to produce a campus-wide distributed computing environment for educational use. It was launched in 1983, and research and development ran until June 30, 1991. As of 2023, Athena is still in production use at MIT. It works as software that makes a machine a thin client, that will download educational applications from the MIT servers on demand.

A blade server is a stripped-down server computer with a modular design optimized to minimize the use of physical space and energy. Blade servers have many components removed to save space, minimize power consumption and other considerations, while still having all the functional components to be considered a computer. Unlike a rack-mount server, a blade server fits inside a blade enclosure, which can hold multiple blade servers, providing services such as power, cooling, networking, various interconnects and management. Together, blades and the blade enclosure form a blade system, which may itself be rack-mounted. Different blade providers have differing principles regarding what to include in the blade itself, and in the blade system as a whole.

Midrange computers, or midrange systems, were a class of computer systems that fell in between mainframe computers and microcomputers.

The history of general-purpose CPUs is a continuation of the earlier history of computing hardware.

Following the introduction of the IBM Personal Computer, or IBM PC, many other personal computer architectures became extinct within just a few years. It led to a wave of IBM PC compatible systems being released.

The history of the personal computer as a mass-market consumer electronic device began with the microcomputer revolution of the 1970s. A personal computer is one intended for interactive individual use, as opposed to a mainframe computer where the end user's requests are filtered through operating staff, or a time-sharing system in which one large processor is shared by many individuals. After the development of the microprocessor, individual personal computers were low enough in cost that they eventually became affordable consumer goods. Early personal computers – generally called microcomputers – were sold often in electronic kit form and in limited numbers, and were of interest mostly to hobbyists and technicians.

A personal computer, often referred to as a PC, is a computer designed for individual use. It is typically used for tasks such as word processing, internet browsing, email, multimedia playback, and gaming. Personal computers are intended to be operated directly by an end user, rather than by a computer expert or technician. Unlike large, costly minicomputers and mainframes, time-sharing by many people at the same time is not used with personal computers. The term home computer has also been used, primarily in the late 1970s and 1980s. The advent of personal computers and the concurrent Digital Revolution have significantly affected the lives of people in all countries.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing.

Computers can be classified, or typed, in many ways. Some common classifications of computers are given below.

References

- ↑ John E. Dorband; Josephine Palencia Raytheon; Udaya Ranawake. "Commodity Computing Clusters at Goddard Space Flight Center" (PDF). Goddard Space Flight Center. Retrieved 2010-03-07.

The purpose of commodity cluster computing is to utilize large numbers of readily available computing components for parallel computing to obtaining the greatest amount of useful computations for the least cost. The issue of the cost of a computational resource is key to computational science and data processing at GSFC as it is at most other places, the difference being that the need at GSFC far exceeds any expectation of meeting that need.

- ↑ "IBM, HP servers won't stop x86 onslaught on Unix". 9 February 2010.

- ↑ "Publications – Google Research".

- ↑ ftp://ftp.software.ibm.com/common/ssi/pm/rg/n/poo03017usen/POO03017USEN.PDF%5B%5D

- ↑ Barroso, Luiz André; Hölzle, Urs (2009). "The Datacenter as a Computer: An Introduction to the Design of Warehouse-Scale Machines". Synthesis Lectures on Computer Architecture. 4: 1–108. doi: 10.2200/S00193ED1V01Y200905CAC006 .

- ↑ "Google Fellow sheds some light on infrastructure, robustness in face of failure | insideHPC.com". Archived from the original on 2011-08-10. Retrieved 2010-03-06.