Related Research Articles

In computer networking, a thin client, sometimes called slim client or lean client, is a simple (low-performance) computer that has been optimized for establishing a remote connection with a server-based computing environment. They are sometimes known as network computers, or in their simplest form as zero clients. The server does most of the work, which can include launching software programs, performing calculations, and storing data. This contrasts with a rich client or a conventional personal computer; the former is also intended for working in a client–server model but has significant local processing power, while the latter aims to perform its function mostly locally.

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, supercomputers have existed which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

A workstation is a special computer designed for technical or scientific applications. Intended primarily to be used by a single user, they are commonly connected to a local area network and run multi-user operating systems. The term workstation has been used loosely to refer to everything from a mainframe computer terminal to a PC connected to a network, but the most common form refers to the class of hardware offered by several current and defunct companies such as Sun Microsystems, Silicon Graphics, Apollo Computer, DEC, HP, NeXT, and IBM which powered the 3D computer graphics revolution of the late 1990s.

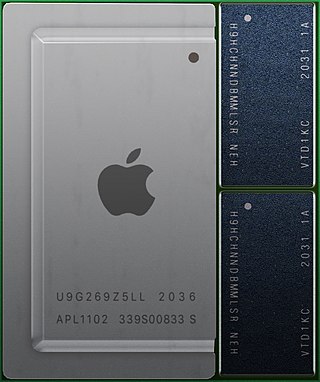

A system on a chip or system-on-chip is an integrated circuit that integrates most or all components of a computer or other electronic system. These components almost always include on-chip central processing unit (CPU), memory interfaces, input/output devices and interfaces, and secondary storage interfaces, often alongside other components such as radio modems and a graphics processing unit (GPU) – all on a single substrate or microchip. SoCs may contain digital and also analog, mixed-signal and often radio frequency signal processing functions.

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

Reconfigurable computing is a computer architecture combining some of the flexibility of software with the high performance of hardware by processing with flexible hardware platforms like field-programmable gate arrays (FPGAs). The principal difference when compared to using ordinary microprocessors is the ability to add custom computational blocks using FPGAs. On the other hand, the main difference from custom hardware, i.e. application-specific integrated circuits (ASICs) is the possibility to adapt the hardware during runtime by "loading" a new circuit on the reconfigurable fabric, thus providing new computational blocks without the need to manufacture and add new chips to the existing system.

A coprocessor is a computer processor used to supplement the functions of the primary processor. Operations performed by the coprocessor may be floating-point arithmetic, graphics, signal processing, string processing, cryptography or I/O interfacing with peripheral devices. By offloading processor-intensive tasks from the main processor, coprocessors can accelerate system performance. Coprocessors allow a line of computers to be customized, so that customers who do not need the extra performance do not need to pay for it.

A graphics processing unit (GPU) is a specialized electronic circuit initially designed to accelerate computer graphics and image processing. After their initial design, GPUs were found to be useful for non-graphic calculations involving embarrassingly parallel problems due to their parallel structure. Other non-graphical uses include the training of neural networks and cryptocurrency mining.

General-purpose computing on graphics processing units is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the central processing unit (CPU). The use of multiple video cards in one computer, or large numbers of graphics chips, further parallelizes the already parallel nature of graphics processing.

Hardware acceleration is the use of computer hardware designed to perform specific functions more efficiently when compared to software running on a general-purpose central processing unit (CPU). Any transformation of data that can be calculated in software running on a generic CPU can also be calculated in custom-made hardware, or in some mix of both.

Processor may refer to:

In computer science, stream processing is a programming paradigm which views streams, or sequences of events in time, as the central input and output objects of computation. Stream processing encompasses dataflow programming, reactive programming, and distributed data processing. Stream processing systems aim to expose parallel processing for data streams and rely on streaming algorithms for efficient implementation. The software stack for these systems includes components such as programming models and query languages, for expressing computation; stream management systems, for distribution and scheduling; and hardware components for acceleration including floating-point units, graphics processing units, and field-programmable gate arrays.

The Texas Advanced Computing Center (TACC) at the University of Texas at Austin, United States, is an advanced computing research center that is based on comprehensive advanced computing resources and supports services to researchers in Texas and across the U.S. The mission of TACC is to enable discoveries that advance science and society through the application of advanced computing technologies. Specializing in high performance computing, scientific visualization, data analysis & storage systems, software, research & development and portal interfaces, TACC deploys and operates advanced computational infrastructure to enable the research activities of faculty, staff, and students of UT Austin. TACC also provides consulting, technical documentation, and training to support researchers who use these resources. TACC staff members conduct research and development in applications and algorithms, computing systems design/architecture, and programming tools and environments.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing.

Computer hardware includes the physical parts of a computer, such as the central processing unit (CPU), random access memory (RAM), motherboard, computer data storage, graphics card, sound card, and computer case. It includes external devices such as a monitor, mouse, keyboard, and speakers.

This glossary of computer hardware terms is a list of definitions of terms and concepts related to computer hardware, i.e. the physical and structural components of computers, architectural issues, and peripheral devices.

Heterogeneous System Architecture (HSA) is a cross-vendor set of specifications that allow for the integration of central processing units and graphics processors on the same bus, with shared memory and tasks. The HSA is being developed by the HSA Foundation, which includes AMD and ARM. The platform's stated aim is to reduce communication latency between CPUs, GPUs and other compute devices, and make these various devices more compatible from a programmer's perspective, relieving the programmer of the task of planning the moving of data between devices' disjoint memories.

Heterogeneous computing refers to systems that use more than one kind of processor or core. These systems gain performance or energy efficiency not just by adding the same type of processors, but by adding dissimilar coprocessors, usually incorporating specialized processing capabilities to handle particular tasks.

TensorFlow is a free and open-source software library for machine learning and artificial intelligence. It can be used across a range of tasks but has a particular focus on training and inference of deep neural networks.

An AI accelerator, deep learning processor, or neural processing unit (NPU) is a class of specialized hardware accelerator or computer system designed to accelerate artificial intelligence and machine learning applications, including artificial neural networks and machine vision. Typical applications include algorithms for robotics, Internet of Things, and other data-intensive or sensor-driven tasks. They are often manycore designs and generally focus on low-precision arithmetic, novel dataflow architectures or in-memory computing capability. As of 2024, a typical AI integrated circuit chip contains tens of billions of MOSFETs.

References

- ↑ Foote, Keith D. (2017-06-22). "A Brief History of Cloud Computing". DATAVERSITY. Retrieved 2019-10-17.

- 1 2 Yeo, Chin; Buyya, Rajkumar; Pourreza, Hossien; Eskicioglu, Rasit; Peter, Graham; Sommers, Frank (2006-01-10). Cluster Computing: High-Performance, High-Availability, and High-Throughput Processing on a Network of Computers. Boston, MA: Springer. ISBN 978-0-387-40532-2.

- ↑ "CPU Frequency". www.cpu-world.com. Retrieved 2019-10-16.

- ↑ Cardoso, Joao; Gabriel, Jose; Pedro, Diniz (June 15, 2017). Embedded Computing for High Performance. Morgan Kaufmann. ISBN 9780128041994.

- ↑ Armbrust, Michael; Fox, Armondo; Griffith, Rean; Joseph, Anthony; Katz, Randy; Konwinski, Andrew; Lee, Gunho; Patterson, David; Rabkin, Ariel (February 10, 2009). Above the Clouds: A Berkeley View of Cloud Computing.

- 1 2 "How Does Cloud Computing Work? | Cloud Academy Blog". Cloud Academy. 2019-03-25. Retrieved 2019-10-22.

- 1 2 "Introduction to Cluster Computing — Distributed Computing Fundamentals". selkie.macalester.edu. Retrieved 2019-10-22.

- ↑ "What is Grid Computing - Definition | Microsoft Azure". azure.microsoft.com. Retrieved 2019-10-22.

- ↑ Akherfi, Khadija; Gerndt, Micheal; Harroud, Hamid (2018-01-01). "Mobile cloud computing for computation offloading: Issues and challenges". Applied Computing and Informatics. 14 (1): 1–16. doi: 10.1016/j.aci.2016.11.002 . ISSN 2210-8327.

- ↑ Ananthanarayanan, Ganesh; Bahl, Paramvir; Bodik, Peter; Chintalapudi, Krishna; Philipose, Matthai; Ravindranath, Lenin; Sinha, Sudipta (2017). "Real-Time Video Analytics: The Killer App for Edge Computing". Computer. 50 (10): 58–67. doi:10.1109/mc.2017.3641638. ISSN 0018-9162. S2CID 206449115.

- ↑ Lin, L.; Liao, X.; Jin, H.; Li, P. (August 2019). "Computation Offloading Toward Edge Computing". Proceedings of the IEEE. 107 (8): 1584–1607. doi:10.1109/JPROC.2019.2922285. S2CID 199017142.

- ↑ Ma, X.; Zhao, Y.; Zhang, L.; Wang, H.; Peng, L. (September 2013). "When mobile terminals meet the cloud: computation offloading as the bridge". IEEE Network. 27 (5): 28–33. doi:10.1109/MNET.2013.6616112. S2CID 16674645.

- ↑ "How Graphics Cards Work - ExtremeTech". www.extremetech.com. Retrieved 2019-11-11.