In computer science, an array is a data structure consisting of a collection of elements, of same memory size, each identified by at least one array index or key. An array is stored such that the position of each element can be computed from its index tuple by a mathematical formula. The simplest type of data structure is a linear array, also called one-dimensional array.

In computing, multitasking is the concurrent execution of multiple tasks over a certain period of time. New tasks can interrupt already started ones before they finish, instead of waiting for them to end. As a result, a computer executes segments of multiple tasks in an interleaved manner, while the tasks share common processing resources such as central processing units (CPUs) and main memory. Multitasking automatically interrupts the running program, saving its state and loading the saved state of another program and transferring control to it. This "context switch" may be initiated at fixed time intervals, or the running program may be coded to signal to the supervisory software when it can be interrupted.

In computing, a context switch is the process of storing the state of a process or thread, so that it can be restored and resume execution at a later point, and then restoring a different, previously saved, state. This allows multiple processes to share a single central processing unit (CPU), and is an essential feature of a multiprogramming or multitasking operating system. In a traditional CPU, each process - a program in execution - utilizes the various CPU registers to store data and hold the current state of the running process. However, in a multitasking operating system, the operating system switches between processes or threads to allow the execution of multiple processes simultaneously. For every switch, the operating system must save the state of the currently running process, followed by loading the next process state, which will run on the CPU. This sequence of operations that stores the state of the running process and the loading of the following running process is called a context switch.

In computer science, a data structure is a data organization, management, and storage format that is usually chosen for efficient access to data. More precisely, a data structure is a collection of data values, the relationships among them, and the functions or operations that can be applied to the data, i.e., it is an algebraic structure about data.

Forth is a procedural, concatenative, stack-oriented programming language and interactive development environment designed by Charles H. "Chuck" Moore and first used by other programmers in 1970. Although not an acronym, the language's name in its early years was often spelled in all capital letters as FORTH. The FORTH-79 and FORTH-83 implementations, which were not written by Moore, became de facto standards, and an official standardization of the language was published in 1994 as ANS Forth. A wide range of Forth derivatives existed before and after ANS Forth. The free software Gforth implementation is actively maintained, as are several commercially supported systems.

An operating system (OS) is system software that manages computer hardware and software resources, and provides common services for computer programs.

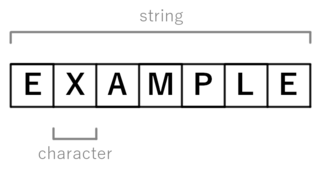

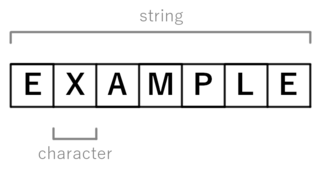

In computer programming, a string is traditionally a sequence of characters, either as a literal constant or as some kind of variable. The latter may allow its elements to be mutated and the length changed, or it may be fixed. A string is generally considered as a data type and is often implemented as an array data structure of bytes that stores a sequence of elements, typically characters, using some character encoding. String may also denote more general arrays or other sequence data types and structures.

A memory management unit (MMU), sometimes called paged memory management unit (PMMU), is a computer hardware unit that examines all memory references on the memory bus, translating these requests, known as virtual memory addresses, into physical addresses in main memory.

In computer science, primitive data types are a set of basic data types from which all other data types are constructed. Specifically it often refers to the limited set of data representations in use by a particular processor, which all compiled programs must use. Most processors support a similar set of primitive data types, although the specific representations vary. More generally, "primitive data types" may refer to the standard data types built into a programming language. Data types which are not primitive are referred to as derived or composite.

In computer programming, the stride of an array is the number of locations in memory between beginnings of successive array elements, measured in bytes or in units of the size of the array's elements. The stride cannot be smaller than the element size but can be larger, indicating extra space between elements.

Execution in computer and software engineering is the process by which a computer or virtual machine reads and acts on the instructions of a computer program. Each instruction of a program is a description of a particular action which must be carried out, in order for a specific problem to be solved. Execution involves repeatedly following a 'fetch–decode–execute' cycle for each instruction done by control unit. As the executing machine follows the instructions, specific effects are produced in accordance with the semantics of those instructions.

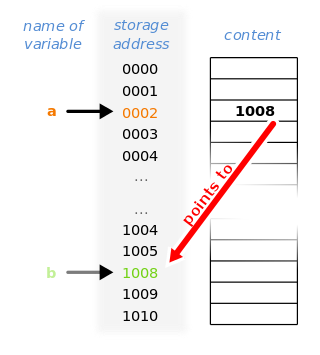

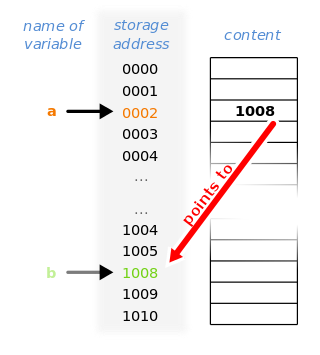

In computer science, a pointer is an object in many programming languages that stores a memory address. This can be that of another value located in computer memory, or in some cases, that of memory-mapped computer hardware. A pointer references a location in memory, and obtaining the value stored at that location is known as dereferencing the pointer. As an analogy, a page number in a book's index could be considered a pointer to the corresponding page; dereferencing such a pointer would be done by flipping to the page with the given page number and reading the text found on that page. The actual format and content of a pointer variable is dependent on the underlying computer architecture.

Addressing modes are an aspect of the instruction set architecture in most central processing unit (CPU) designs. The various addressing modes that are defined in a given instruction set architecture define how the machine language instructions in that architecture identify the operand(s) of each instruction. An addressing mode specifies how to calculate the effective memory address of an operand by using information held in registers and/or constants contained within a machine instruction or elsewhere.

The TMS9900 was one of the first commercially available, single-chip 16-bit microprocessors. Introduced in June 1976, it implemented Texas Instruments' TI-990 minicomputer architecture in a single-chip format, and was initially used for low-end models of that lineup.

In computing, a word is the natural unit of data used by a particular processor design. A word is a fixed-sized datum handled as a unit by the instruction set or the hardware of the processor. The number of bits or digits in a word is an important characteristic of any specific processor design or computer architecture.

In computer science, a call stack is a stack data structure that stores information about the active subroutines of a computer program. This type of stack is also known as an execution stack, program stack, control stack, run-time stack, or machine stack, and is often shortened to simply "the stack". Although maintenance of the call stack is important for the proper functioning of most software, the details are normally hidden and automatic in high-level programming languages. Many computer instruction sets provide special instructions for manipulating stacks.

Data structure alignment is the way data is arranged and accessed in computer memory. It consists of three separate but related issues: data alignment, data structure padding, and packing.

A bit field is a data structure that consists of one or more adjacent bits which have been allocated for specific purposes, so that any single bit or group of bits within the structure can be set or inspected. A bit field is most commonly used to represent integral types of known, fixed bit-width, such as single-bit Booleans.

In computer programming, a branch table or jump table is a method of transferring program control (branching) to another part of a program using a table of branch or jump instructions. It is a form of multiway branch. The branch table construction is commonly used when programming in assembly language but may also be generated by compilers, especially when implementing optimized switch statements whose values are densely packed together.

This glossary of computer science is a list of definitions of terms and concepts used in computer science, its sub-disciplines, and related fields, including terms relevant to software, data science, and computer programming.