An adiabatic process is a type of thermodynamic process that occurs without transferring heat or mass between the thermodynamic system and its environment. Unlike an isothermal process, an adiabatic process transfers energy to the surroundings only as work. As a key concept in thermodynamics, the adiabatic process supports the theory that explains the first law of thermodynamics.

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder.

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved compression rates.

Signal-to-noise ratio is a measure used in science and engineering that compares the level of a desired signal to the level of background noise. SNR is defined as the ratio of signal power to noise power, often expressed in decibels. A ratio higher than 1:1 indicates more signal than noise.

A discrete cosine transform (DCT) expresses a finite sequence of data points in terms of a sum of cosine functions oscillating at different frequencies. The DCT, first proposed by Nasir Ahmed in 1972, is a widely used transformation technique in signal processing and data compression. It is used in most digital media, including digital images, digital video, digital audio, digital television, digital radio, and speech coding. DCTs are also important to numerous other applications in science and engineering, such as digital signal processing, telecommunication devices, reducing network bandwidth usage, and spectral methods for the numerical solution of partial differential equations.

In computing, Deflate is a lossless data compression file format that uses a combination of LZ77 and Huffman coding. It was designed by Phil Katz, for version 2 of his PKZIP archiving tool. Deflate was later specified in RFC 1951 (1996).

Golomb coding is a lossless data compression method using a family of data compression codes invented by Solomon W. Golomb in the 1960s. Alphabets following a geometric distribution will have a Golomb code as an optimal prefix code, making Golomb coding highly suitable for situations in which the occurrence of small values in the input stream is significantly more likely than large values.

In telecommunications and computing, bit rate is the number of bits that are conveyed or processed per unit of time.

Quantization, in mathematics and digital signal processing, is the process of mapping input values from a large set to output values in a (countable) smaller set, often with a finite number of elements. Rounding and truncation are typical examples of quantization processes. Quantization is involved to some degree in nearly all digital signal processing, as the process of representing a signal in digital form ordinarily involves rounding. Quantization also forms the core of essentially all lossy compression algorithms.

The Lempel–Ziv–Markov chain algorithm (LZMA) is an algorithm used to perform lossless data compression. It has been under development since either 1996 or 1998 by Igor Pavlov and was first used in the 7z format of the 7-Zip archiver. This algorithm uses a dictionary compression scheme somewhat similar to the LZ77 algorithm published by Abraham Lempel and Jacob Ziv in 1977 and features a high compression ratio and a variable compression-dictionary size, while still maintaining decompression speed similar to other commonly used compression algorithms.

A compressor is a mechanical device that increases the pressure of a gas by reducing its volume. An air compressor is a specific type of gas compressor.

In computer science, a suffix tree is a compressed trie containing all the suffixes of the given text as their keys and positions in the text as their values. Suffix trees allow particularly fast implementations of many important string operations.

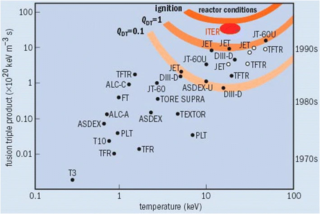

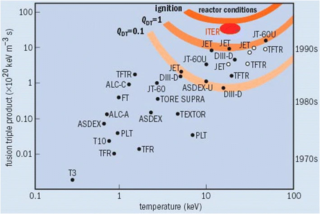

The Lawson criterion is a figure of merit used in nuclear fusion research. It compares the rate of energy being generated by fusion reactions within the fusion fuel to the rate of energy losses to the environment. When the rate of production is higher than the rate of loss, the system will produce net energy. If enough of that energy is captured by the fuel, the system will become self-sustaining and is said to be ignited.

Generation loss is the loss of quality between subsequent copies or transcodes of data. Anything that reduces the quality of the representation when copying, and would cause further reduction in quality on making a copy of the copy, can be considered a form of generation loss. File size increases are a common result of generation loss, as the introduction of artifacts may actually increase the entropy of the data through each generation.

In statistics, the k-nearest neighbors algorithm (k-NN) is a non-parametric supervised learning method first developed by Evelyn Fix and Joseph Hodges in 1951, and later expanded by Thomas Cover. It is used for classification and regression. In both cases, the input consists of the k closest training examples in a data set. The output depends on whether k-NN is used for classification or regression:

In information theory, redundancy measures the fractional difference between the entropy H(X) of an ensemble X, and its maximum possible value . Informally, it is the amount of wasted "space" used to transmit certain data. Data compression is a way to reduce or eliminate unwanted redundancy, while forward error correction is a way of adding desired redundancy for purposes of error detection and correction when communicating over a noisy channel of limited capacity.

In mathematics, a wavelet series is a representation of a square-integrable function by a certain orthonormal series generated by a wavelet. This article provides a formal, mathematical definition of an orthonormal wavelet and of the integral wavelet transform.

In cryptography, a one-way compression function is a function that transforms two fixed-length inputs into a fixed-length output. The transformation is "one-way", meaning that it is difficult given a particular output to compute inputs which compress to that output. One-way compression functions are not related to conventional data compression algorithms, which instead can be inverted exactly or approximately to the original data.

In signal processing, multidimensional empirical mode decomposition is an extension of the one-dimensional (1-D) EMD algorithm to a signal encompassing multiple dimensions. The Hilbert–Huang empirical mode decomposition (EMD) process decomposes a signal into intrinsic mode functions combined with the Hilbert spectral analysis, known as the Hilbert–Huang transform (HHT). The multidimensional EMD extends the 1-D EMD algorithm into multiple-dimensional signals. This decomposition can be applied to image processing, audio signal processing, and various other multidimensional signals.

A crack growth equation is used for calculating the size of a fatigue crack growing from cyclic loads. The growth of a fatigue crack can result in catastrophic failure, particularly in the case of aircraft. When many growing fatigue cracks interact with one another it is known as widespread fatigue damage. A crack growth equation can be used to ensure safety, both in the design phase and during operation, by predicting the size of cracks. In critical structure, loads can be recorded and used to predict the size of cracks to ensure maintenance or retirement occurs prior to any of the cracks failing. Safety factors are used to reduce the predicted fatigue life to a service fatigue life because of the sensitivity of the fatigue life to the size and shape of crack initiating defects and the variability between assumed loading and actual loading experienced by a component.