Related Research Articles

A head-related transfer function (HRTF), also known as anatomical transfer function (ATF), is a response that characterizes how an ear receives a sound from a point in space. As sound strikes the listener, the size and shape of the head, ears, ear canal, density of the head, size and shape of nasal and oral cavities, all transform the sound and affect how it is perceived, boosting some frequencies and attenuating others. Generally speaking, the HRTF boosts frequencies from 2–5 kHz with a primary resonance of +17 dB at 2,700 Hz. But the response curve is more complex than a single bump, affects a broad frequency spectrum, and varies significantly from person to person.

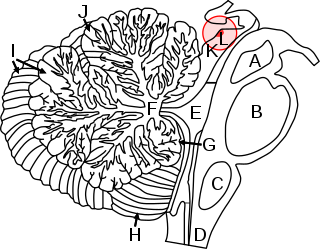

Neuroethology is the evolutionary and comparative approach to the study of animal behavior and its underlying mechanistic control by the nervous system. It is an interdisciplinary science that combines both neuroscience and ethology. A central theme of neuroethology, which differentiates it from other branches of neuroscience, is its focus on behaviors that have been favored by natural selection rather than on behaviors that are specific to a particular disease state or laboratory experiment.

Sound localization is a listener's ability to identify the location or origin of a detected sound in direction and distance.

The superior colliculus is a structure lying on the roof of the mammalian midbrain. In non-mammalian vertebrates, the homologous structure is known as the optic tectum, or optic lobe. The adjective form tectal is commonly used for both structures.

Multisensory integration, also known as multimodal integration, is the study of how information from the different sensory modalities may be integrated by the nervous system. A coherent representation of objects combining modalities enables animals to have meaningful perceptual experiences. Indeed, multisensory integration is central to adaptive behavior because it allows animals to perceive a world of coherent perceptual entities. Multisensory integration also deals with how different sensory modalities interact with one another and alter each other's processing.

Virtual acoustic space (VAS), also known as virtual auditory space, is a technique in which sounds presented over headphones appear to originate from any desired direction in space. The illusion of a virtual sound source outside the listener's head is created.

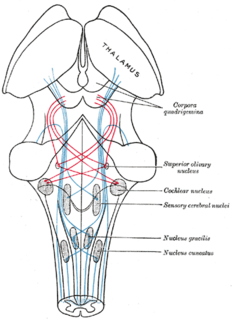

The superior olivary complex (SOC) or superior olive is a collection of brainstem nuclei that functions in multiple aspects of hearing and is an important component of the ascending and descending auditory pathways of the auditory system. The SOC is intimately related to the trapezoid body: most of the cell groups of the SOC are dorsal to this axon bundle while a number of cell groups are embedded in the trapezoid body. Overall, the SOC displays a significant interspecies variation, being largest in bats and rodents and smaller in primates.

The interaural time difference when concerning humans or animals, is the difference in arrival time of a sound between two ears. It is important in the localization of sounds, as it provides a cue to the direction or angle of the sound source from the head. If a signal arrives at the head from one side, the signal has further to travel to reach the far ear than the near ear. This pathlength difference results in a time difference between the sound's arrivals at the ears, which is detected and aids the process of identifying the direction of sound source.

A sensory cue is a statistic or signal that can be extracted from the sensory input by a perceiver, that indicates the state of some property of the world that the perceiver is interested in perceiving.

Binaural fusion or binaural integration is a cognitive process that involves the combination of different auditory information presented binaurally, or to each ear. In humans, this process is essential in understanding speech as one ear may pick up more information about the speech stimuli than the other.

Coincidence detection in the context of neurobiology is a process by which a neuron or a neural circuit can encode information by detecting the occurrence of temporally close but spatially distributed input signals. Coincidence detectors influence neuronal information processing by reducing temporal jitter, reducing spontaneous activity, and forming associations between separate neural events. This concept has led to a greater understanding of neural processes and the formation of computational maps in the brain.

Ambiophonics is a method in the public domain that employs digital signal processing (DSP) and two loudspeakers directly in front of the listener in order to improve reproduction of stereophonic and 5.1 surround sound for music, movies, and games in home theaters, gaming PCs, workstations, or studio monitoring applications. First implemented using mechanical means in 1986, today a number of hardware and VST plug-in makers offer Ambiophonic DSP. Ambiophonics eliminates crosstalk inherent in the conventional stereo triangle speaker placement, and thereby generates a speaker-binaural soundfield that emulates headphone-binaural sound, and creates for the listener improved perception of reality of recorded auditory scenes. A second speaker pair can be added in back in order to enable 360° surround sound reproduction. Additional surround speakers may be used for hall ambience, including height, if desired.

Sensory maps are areas of the brain which respond to sensory stimulation, and are spatially organized according to some feature of the sensory stimulation. In some cases the sensory map is simply a topographic representation of a sensory surface such as the skin, cochlea, or retina. In other cases it represents other stimulus properties resulting from neuronal computation and is generally ordered in a manner that reflects the periphery. An example is the somatosensory map which is a projection of the skin's surface in the brain that arranges the processing of tactile sensation. This type of somatotopic map is the most common, possibly because it allows for physically neighboring areas of the brain to react to physically similar stimuli in the periphery or because it allows for greater motor control.

Spatial hearing loss refers to a form of deafness that is an inability to use spatial cues about where a sound originates from in space. This in turn affects the ability to understand speech in the presence of background noise.

Sensory maps and brain development is a concept in neuroethology that links the development of the brain over an animal’s lifetime with the fact that there is spatial organization and pattern to an animal’s sensory processing. Sensory maps are the representations of sense organs as organized maps in the brain, and it is the fundamental organization of processing. Sensory maps are not always close to an exact topographic projection of the senses. The fact that the brain is organized into sensory maps has wide implications for processing, such as that lateral inhibition and coding for space are byproducts of mapping. The developmental process of an organism guides sensory map formation; the details are yet unknown. The development of sensory maps requires learning, long term potentiation, experience-dependent plasticity, and innate characteristics. There is significant evidence for experience-dependent development and maintenance of sensory maps, and there is growing evidence on the molecular basis, synaptic basis and computational basis of experience-dependent development.

3D sound localization refers to an acoustic technology that is used to locate the source of a sound in a three-dimensional space. The source location is usually determined by the direction of the incoming sound waves and the distance between the source and sensors. It involves the structure arrangement design of the sensors and signal processing techniques.

Perceptual-based 3D sound localization is the application of knowledge of the human auditory system to develop 3D sound localization technology.

Most owls are nocturnal or crepuscular birds of prey. Because they hunt at night, they must rely on non-visual senses. Experiments by Roger Payne have shown that owls are sensitive to the sounds made by their prey, not the heat or the smell. In fact, the sound cues are both necessary and sufficient for localization of mice from a distant location where they are perched. For this to work, the owls must be able to accurately localize both the azimuth and the elevation of the sound source.

Binaural unmasking is phenomenon of auditory perception discovered by Ira Hirsh. In binaural unmasking, the brain combines information from the two ears in order to improve signal detection and identification in noise. The phenomenon is most commonly observed when there is a difference between the interaural phase of the signal and the interaural phase of the noise. When such a difference is present there is an improvement in masking threshold compared to a reference situation in which the interaural phases are the same, or when the stimulus has been presented monaurally. Those two cases usually give very similar thresholds. The size of the improvement is known as the "binaural masking level difference" (BMLD), or simply as the "masking level difference".

Ilana B. Witten is an American neuroscientist and professor of psychology and neuroscience at Princeton University. Witten studies the mesolimbic pathway, with a focus on the striatal neural circuit mechanisms driving reward learning and decision making.

References

- Knudsen, E. I. and Konishi, M. “A neural map of auditory space in the owl.” Science 200: 795-797, 1978.

- Knudsen, E. I, and Konishi, M. “Mechanisms of sound localization in the barn owl (Tyto alba).” Journal of Comparative Physiology 133: 13-21, 1979.

- Olsen, J.F., Knudsen, E.I. and Esterly, S.D. “Neural maps of interaural time and intensity differences in the optic tectum of the barn owl.” Journal of Neuroscience 9: 2591-2605, 1989.

- Knudsen, E.I. and Knudsen, P.F. “Sensitive and critical periods for visual calibration of sound localization by barn owls.” Journal of Neuroscience 63: 131-149, 1990.

- Mogdans, J. and Knudsen, E.I. “Early monaural occlusion alters the neural map of interaural level difference in the inferior colliculus of the barn owl.” Brain Research 619: 29-38, 1993.

- Knudsen, E.I., Esterly, S.D. and Olsen, J.F. “Adaptive plasticity of the auditory space map in the optic tectum of adult and baby barn owls in response to external ear modification.” Journal of Neurophysiology 71: 79-94, 1994.

- Knudsen, E.I. “Capacity for plasticity in the adult owl auditory system expanded by juvenile experience.” Science 279: 1531-1533, 1998.

- Knudsen, E.I. and Knudsen, P.F. “Vision guides the adjustment of auditory localization in young barn owls.” Science 230: 545-548, 1985.

- Knudsen, E.I. and Knudsen, P.F. “Vision calibrates sound localization in developing barn owls.” Journal of Neuroscience 9: 3306-3313, 1989.

- Brainard, M.S. and Knudsen, E.I. “Experience-dependent plasticity in the inferior colliculus: a site for visual calibration of the neural representation of auditory space in the barn owl.” Journal of Neuroscience 13: 4589-4608, 1993.