NetApp, Inc. is an intelligent data infrastructure company that provides unified data storage, integrated data services, and cloud operations (CloudOps) solutions to enterprise customers. The company is based in San Jose, California. It has ranked in the Fortune 500 from 2012 to 2021. Founded in 1992 with an initial public offering in 1995, NetApp offers cloud data services for management of applications and data both online and physically.

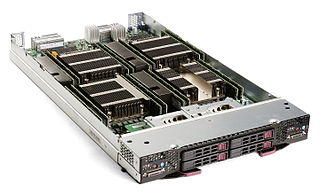

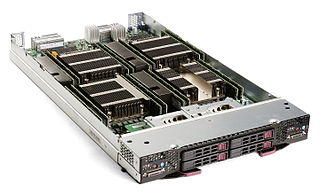

A blade server is a stripped-down server computer with a modular design optimized to minimize the use of physical space and energy. Blade servers have many components removed to save space, minimize power consumption and other considerations, while still having all the functional components to be considered a computer. Unlike a rack-mount server, a blade server fits inside a blade enclosure, which can hold multiple blade servers, providing services such as power, cooling, networking, various interconnects and management. Together, blades and the blade enclosure form a blade system, which may itself be rack-mounted. Different blade providers have differing principles regarding what to include in the blade itself, and in the blade system as a whole.

The HPE Storage (formerly HP StorageWorks) is a portfolio of HPE storage products, includes online storage, nearline storage, storage networking, archiving, de-duplication, and storage software. HP and their predecessor, the Compaq Corporation, has developed some of industry-first storage technologies to simplify network storage. HP is a proponent of converged storage, a storage architecture that combines storage and compute into a single entity.

The Parallel Virtual File System (PVFS) is an open-source parallel file system. A parallel file system is a type of distributed file system that distributes file data across multiple servers and provides for concurrent access by multiple tasks of a parallel application. PVFS was designed for use in large scale cluster computing. PVFS focuses on high performance access to large data sets. It consists of a server process and a client library, both of which are written entirely of user-level code. A Linux kernel module and pvfs-client process allow the file system to be mounted and used with standard utilities. The client library provides for high performance access via the message passing interface (MPI). PVFS is being jointly developed between The Parallel Architecture Research Laboratory at Clemson University and the Mathematics and Computer Science Division at Argonne National Laboratory, and the Ohio Supercomputer Center. PVFS development has been funded by NASA Goddard Space Flight Center, The DOE Office of Science Advanced Scientific Computing Research program, NSF PACI and HECURA programs, and other government and private agencies. PVFS is now known as OrangeFS in its newest development branch.

3PAR Inc. was a manufacturer of systems and software for data storage and information management headquartered in Fremont, California, USA. 3PAR produced computer data storage products, including hardware disk arrays and storage management software. It became a wholly owned subsidiary of Hewlett Packard Enterprise after an acquisition in 2010.

Dynamic Infrastructure is an information technology concept related to the design of data centers, whereby the underlying hardware and software can respond dynamically and more efficiently to changing levels of demand. In other words, data center assets such as storage and processing power can be provisioned to meet surges in user's needs. The concept has also been referred to as Infrastructure 2.0 and Next Generation Data Center.

The National Center for Computational Sciences (NCCS) is a United States Department of Energy (DOE) Leadership Computing Facility that houses the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science User Facility charged with helping researchers solve challenging scientific problems of global interest with a combination of leading high-performance computing (HPC) resources and international expertise in scientific computing.

Eucalyptus is a paid and open-source computer software for building Amazon Web Services (AWS)-compatible private and hybrid cloud computing environments, originally developed by the company Eucalyptus Systems. Eucalyptus is an acronym for Elastic Utility Computing Architecture for Linking Your Programs To Useful Systems. Eucalyptus enables pooling compute, storage, and network resources that can be dynamically scaled up or down as application workloads change. Mårten Mickos was the CEO of Eucalyptus. In September 2014, Eucalyptus was acquired by Hewlett-Packard and then maintained by DXC Technology. After DXC stopped developing the product in late 2017, AppScale Systems forked the code and started supporting Eucalyptus customers.

In computer science, memory virtualization decouples volatile random access memory (RAM) resources from individual systems in the data centre, and then aggregates those resources into a virtualized memory pool available to any computer in the cluster. The memory pool is accessed by the operating system or applications running on top of the operating system. The distributed memory pool can then be utilized as a high-speed cache, a messaging layer, or a large, shared memory resource for a CPU or a GPU application.

Cisco Unified Computing System (UCS) is a data center server computer product line composed of server hardware, virtualization support, switching fabric, and management software, introduced in 2009 by Cisco Systems. The products are marketed for scalability by integrating many components of a data center that can be managed as a single unit.

Hewlett Packard Enterprise Networking is the Networking Products division of Hewlett Packard Enterprise. HPE Networking and its predecessor entities have developed and sold networking products since 1979. Currently, it offers networking and switching products for small and medium sized businesses through its wholly owned subsidiary Aruba Networks. Prior to 2015, the entity within HP which offered networking products was called HP Networking.

Converged storage is a storage architecture that combines storage and computing resources into a single entity. This can result in the development of platforms for server centric, storage centric or hybrid workloads where applications and data come together to improve application performance and delivery. The combination of storage and compute differs to the traditional IT model in which computation and storage take place in separate or siloed computer equipment. The traditional model requires discrete provisioning changes, such as upgrades and planned migrations, in the face of server load changes, which are increasingly dynamic with virtualization, where converged storage increases the supply of resources along with new VM demands in parallel.

Nimble Storage, founded in 2008, is a subsidiary of Hewlett Packard Enterprise. It specializes in producing hardware and software products for data storage, particularly data storage arrays that utilize the iSCSI and Fibre Channel protocols, and includes data backup and data protection features.

Virtual Computing Environment Company (VCE) was a division of EMC Corporation that manufactured converged infrastructure appliances for enterprise environments. Founded in 2009 under the name Acadia, it was originally a joint venture between EMC and Cisco Systems, with additional investments by Intel and EMC subsidiary VMware. EMC acquired a 90% controlling stake in VCE from Cisco in October 2014, giving it majority ownership. VCE ended in 2016 after an internal division realignment, followed by the sale of EMC to Dell.

Cumulus Networks was a computer software company headquartered in Mountain View, California, US. The company designed and sold a Linux operating system for industry standard network switches, along with management software, for large datacenter, cloud computing, and enterprise environments.

A distributed file system for cloud is a file system that allows many clients to have access to data and supports operations on that data. Each data file may be partitioned into several parts called chunks. Each chunk may be stored on different remote machines, facilitating the parallel execution of applications. Typically, data is stored in files in a hierarchical tree, where the nodes represent directories. There are several ways to share files in a distributed architecture: each solution must be suitable for a certain type of application, depending on how complex the application is. Meanwhile, the security of the system must be ensured. Confidentiality, availability and integrity are the main keys for a secure system.

The Machine is the name of an experimental computer made by Hewlett Packard Enterprise. It was created as part of a research project to develop a new type of computer architecture for servers. The design focused on a “memory centric computing” architecture, where NVRAM replaced traditional DRAM and disks in the memory hierarchy. The NVRAM was byte addressable and could be accessed from any CPU via a photonic interconnect. The aim of the project was to build and evaluate this new design.

Fog computing or fog networking, also known as fogging, is an architecture that uses edge devices to carry out a substantial amount of computation, storage, and communication locally and routed over the Internet backbone.

Composable disaggregated infrastructure (CDI), sometimes stylized as composable/disaggregated infrastructure, is a technology that allows enterprise data center operators to achieve the cost and availability benefits of cloud computing using on-premises networking equipment. It is considered a class of converged infrastructure, and uses management software to combine compute, storage and network elements. It is similar to public cloud, except the equipment sits on premises in an enterprise data center.

Inspur Server Series is a series of server computers introduced in 1993 by Inspur, an information technology company, and later expanded to the international markets. The servers were likely among the first originally manufactured by a Chinese company. It is currently developed by Inspur Information and its San Francisco-based subsidiary company - Inspur Systems, both Inspur's spinoff companies. The product line includes GPU Servers, Rack-mounted servers, Open Computing Servers and Multi-node Servers.