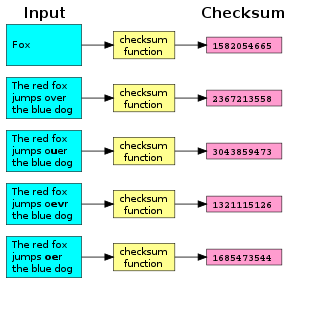

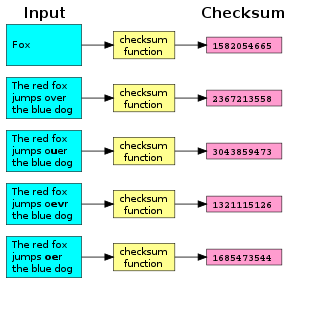

A checksum is a small-sized block of data derived from another block of digital data for the purpose of detecting errors that may have been introduced during its transmission or storage. By themselves, checksums are often used to verify data integrity but are not relied upon to verify data authenticity.

In cryptography, an HMAC is a specific type of message authentication code (MAC) involving a cryptographic hash function and a secret cryptographic key. As with any MAC, it may be used to simultaneously verify both the data integrity and authenticity of a message. An HMAC is a type of keyed hash function that can also be used in a key derivation scheme or a key stretching scheme.

The MD5 message-digest algorithm is a widely used hash function producing a 128-bit hash value. MD5 was designed by Ronald Rivest in 1991 to replace an earlier hash function MD4, and was specified in 1992 as RFC 1321.

In cryptography, SHA-1 is a hash function which takes an input and produces a 160-bit (20-byte) hash value known as a message digest – typically rendered as 40 hexadecimal digits. It was designed by the United States National Security Agency, and is a U.S. Federal Information Processing Standard. The algorithm has been cryptographically broken but is still widely used.

In computer science, a hash collision or hash clash is when two distinct pieces of data in a hash table share the same hash value. The hash value in this case is derived from a hash function which takes a data input and returns a fixed length of bits.

A cryptographic hash function (CHF) is a hash algorithm that has special properties desirable for a cryptographic application:

md5sum is a computer program that calculates and verifies 128-bit MD5 hashes, as described in RFC 1321. The MD5 hash functions as a compact digital fingerprint of a file. As with all such hashing algorithms, there is theoretically an unlimited number of files that will have any given MD5 hash. However, it is very unlikely that any two non-identical files in the real world will have the same MD5 hash, unless they have been specifically created to do so.

UUHash is a hash algorithm employed by clients on the FastTrack network. It is employed for its ability to hash very large files in a very short period of time, even on older computers. However, this is achieved by only hashing a fraction of the file. This weakness makes it trivial to create a hash collision, allowing large sections to be completely altered without altering the checksum.

In cryptography, a message authentication code (MAC), sometimes known as an authentication tag, is a short piece of information used for authenticating and integrity-checking a message. In other words, to confirm that the message came from the stated sender and has not been changed. The MAC value allows verifiers to detect any changes to the message content.

Simple file verification (SFV) is a file format for storing CRC32 checksums of files to verify the integrity of files. SFV is used to verify that a file has not been corrupted, but it does not otherwise verify the file's authenticity. The .sfv file extension is usually used for SFV files.

The Secure Hash Algorithms are a family of cryptographic hash functions published by the National Institute of Standards and Technology (NIST) as a U.S. Federal Information Processing Standard (FIPS), including:

In cryptography, a collision attack on a cryptographic hash tries to find two inputs producing the same hash value, i.e. a hash collision. This is in contrast to a preimage attack where a specific target hash value is specified.

Digest access authentication is one of the agreed-upon methods a web server can use to negotiate credentials, such as username or password, with a user's web browser. This can be used to confirm the identity of a user before sending sensitive information, such as online banking transaction history. It applies a hash function to the username and password before sending them over the network. In contrast, basic access authentication uses the easily reversible Base64 encoding instead of hashing, making it non-secure unless used in conjunction with TLS.

Magnet is a URI scheme that defines the format of magnet links, a de facto standard for identifying files (URN) by their content, via cryptographic hash value rather than by their location.

SHA-2 is a set of cryptographic hash functions designed by the United States National Security Agency (NSA) and first published in 2001. They are built using the Merkle–Damgård construction, from a one-way compression function itself built using the Davies–Meyer structure from a specialized block cipher.

In cryptography, the Merkle–Damgård construction or Merkle–Damgård hash function is a method of building collision-resistant cryptographic hash functions from collision-resistant one-way compression functions. This construction was used in the design of many popular hash algorithms such as MD5, SHA-1 and SHA-2.

sha1sum is a computer program that calculates and verifies SHA-1 hashes. It is commonly used to verify the integrity of files. It is installed by default on most Linux distributions. Typically distributed alongside sha1sum are sha224sum, sha256sum, sha384sum and sha512sum, which use a specific SHA-2 hash function and b2sum, which uses the BLAKE2 cryptographic hash function.

In computer science, a fingerprinting algorithm is a procedure that maps an arbitrarily large data item to a much shorter bit string, its fingerprint, that uniquely identifies the original data for all practical purposes just as human fingerprints uniquely identify people for practical purposes. This fingerprint may be used for data deduplication purposes. This is also referred to as file fingerprinting, data fingerprinting, or structured data fingerprinting.

BLAKE is a cryptographic hash function based on Daniel J. Bernstein's ChaCha stream cipher, but a permuted copy of the input block, XORed with round constants, is added before each ChaCha round. Like SHA-2, there are two variants differing in the word size. ChaCha operates on a 4×4 array of words. BLAKE repeatedly combines an 8-word hash value with 16 message words, truncating the ChaCha result to obtain the next hash value. BLAKE-256 and BLAKE-224 use 32-bit words and produce digest sizes of 256 bits and 224 bits, respectively, while BLAKE-512 and BLAKE-384 use 64-bit words and produce digest sizes of 512 bits and 384 bits, respectively.

crypt is a POSIX C library function. It is typically used to compute the hash of user account passwords. The function outputs a text string which also encodes the salt, and identifies the hash algorithm used. This output string forms a password record, which is usually stored in a text file.