Related Research Articles

In information theory and coding theory with applications in computer science and telecommunication, error detection and correction (EDAC) or error control are techniques that enable reliable delivery of digital data over unreliable communication channels. Many communication channels are subject to channel noise, and thus errors may be introduced during transmission from the source to a receiver. Error detection techniques allow detecting such errors, while error correction enables reconstruction of the original data in many cases.

The Real-time Transport Protocol (RTP) is a network protocol for delivering audio and video over IP networks. RTP is used in communication and entertainment systems that involve streaming media, such as telephony, video teleconference applications including WebRTC, television services and web-based push-to-talk features.

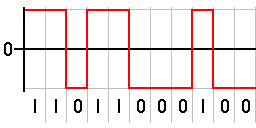

In telecommunication, a line code is a pattern of voltage, current, or photons used to represent digital data transmitted down a communication channel or written to a storage medium. This repertoire of signals is usually called a constrained code in data storage systems. Some signals are more prone to error than others as the physics of the communication channel or storage medium constrains the repertoire of signals that can be used reliably.

In computing, Deflate is a lossless data compression file format that uses a combination of LZ77 and Huffman coding. It was designed by Phil Katz, for version 2 of his PKZIP archiving tool. Deflate was later specified in RFC 1951 (1996).

In computer programming, Base64 is a group of tetrasexagesimal binary-to-text encoding schemes that represent binary data in sequences of 24 bits that can be represented by four 6-bit Base64 digits.

DVB-T, short for Digital Video Broadcasting – Terrestrial, is the DVB European-based consortium standard for the broadcast transmission of digital terrestrial television that was first published in 1997 and first broadcast in Singapore in February, 1998. This system transmits compressed digital audio, digital video and other data in an MPEG transport stream, using coded orthogonal frequency-division multiplexing modulation. It is also the format widely used worldwide for Electronic News Gathering for transmission of video and audio from a mobile newsgathering vehicle to a central receive point. It is also used in the US by Amateur television operators.

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data.

In information theory, a low-density parity-check (LDPC) code is a linear error correcting code, a method of transmitting a message over a noisy transmission channel. An LDPC code is constructed using a sparse Tanner graph. LDPC codes are capacity-approaching codes, which means that practical constructions exist that allow the noise threshold to be set very close to the theoretical maximum for a symmetric memoryless channel. The noise threshold defines an upper bound for the channel noise, up to which the probability of lost information can be made as small as desired. Using iterative belief propagation techniques, LDPC codes can be decoded in time linear in their block length.

In coding theory, an erasure code is a forward error correction (FEC) code under the assumption of bit erasures, which transforms a message of k symbols into a longer message with n symbols such that the original message can be recovered from a subset of the n symbols. The fraction r = k/n is called the code rate. The fraction k’/k, where k’ denotes the number of symbols required for recovery, is called reception efficiency.

In coding theory, Tornado codes are a class of erasure codes that support error correction. Tornado codes require a constant C more redundant blocks than the more data-efficient Reed–Solomon erasure codes, but are much faster to generate and can fix erasures faster. Software-based implementations of tornado codes are about 100 times faster on small lengths and about 10,000 times faster on larger lengths than Reed–Solomon erasure codes. Since the introduction of Tornado codes, many other similar erasure codes have emerged, most notably Online codes, LT codes and Raptor codes.

In computer science, Luby transform codes are the first class of practical fountain codes that are near-optimal erasure correcting codes. They were invented by Michael Luby in 1998 and published in 2002. Like some other fountain codes, LT codes depend on sparse bipartite graphs to trade reception overhead for encoding and decoding speed. The distinguishing characteristic of LT codes is in employing a particularly simple algorithm based on the exclusive or operation to encode and decode the message.

In computer science, Raptor codes are the first known class of fountain codes with linear time encoding and decoding. They were invented by Amin Shokrollahi in 2000/2001 and were first published in 2004 as an extended abstract. Raptor codes are a significant theoretical and practical improvement over LT codes, which were the first practical class of fountain codes.

In computing, telecommunication, information theory, and coding theory, forward error correction (FEC) or channel coding is a technique used for controlling errors in data transmission over unreliable or noisy communication channels.

In coding theory, a systematic code is any error-correcting code in which the input data are embedded in the encoded output. Conversely, in a non-systematic code the output does not contain the input symbols.

Michael George Luby is a mathematician and computer scientist, CEO of BitRipple, senior research scientist at the International Computer Science Institute (ICSI), former VP Technology at Qualcomm, co-founder and former chief technology officer of Digital Fountain. In coding theory he is known for leading the invention of the Tornado codes and the LT codes. In cryptography he is known for his contributions showing that any one-way function can be used as the basis for private cryptography, and for his analysis, in collaboration with Charles Rackoff, of the Feistel cipher construction. His distributed algorithm to find a maximal independent set in a computer network has also been influential.

ATSC-M/H is a U.S. standard for mobile digital TV that allows TV broadcasts to be received by mobile devices.

In coding theory, concatenated codes form a class of error-correcting codes that are derived by combining an inner code and an outer code. They were conceived in 1966 by Dave Forney as a solution to the problem of finding a code that has both exponentially decreasing error probability with increasing block length and polynomial-time decoding complexity. Concatenated codes became widely used in space communications in the 1970s.

Consistent Overhead Byte Stuffing (COBS) is an algorithm for encoding data bytes that results in efficient, reliable, unambiguous packet framing regardless of packet content, thus making it easy for receiving applications to recover from malformed packets. It employs a particular byte value, typically zero, to serve as a packet delimiter. When zero is used as a delimiter, the algorithm replaces each zero data byte with a non-zero value so that no zero data bytes will appear in the packet and thus be misinterpreted as packet boundaries.

Amin Shokrollahi is a German-Iranian mathematician who has worked on a variety of topics including coding theory and algebraic complexity theory. He is best known for his work on iterative decoding of graph based codes for which he received the IEEE Information Theory Paper Award of 2002. He is one of the inventors of a modern class of practical erasure codes known as tornado codes, and the principal developer of raptor codes, which belong to a class of rateless erasure codes known as Fountain codes. In connection with the work on these codes, he received the IEEE Eric E. Sumner Award in 2007 together with Michael Luby "for bridging mathematics, Internet design and mobile broadcasting as well as successful standardization" and the IEEE Richard W. Hamming Medal in 2012 together with Michael Luby "for the conception, development, and analysis of practical rateless codes". He also received the 2007 joint Communication Society and Information Theory Society best paper award as well as the 2017 Mustafa Prize for his work on raptor codes.

NACK-Oriented Reliable Multicast (NORM) is a transport layer Internet protocol designed to provide reliable transport in multicast groups in data networks. It is formally defined by the Internet Engineering Task Force (IETF) in Request for Comments (RFC) 5740, which was published in November 2009.

References

- Amin Shokrollahi and Michael Luby (2011). "Raptor Codes". Foundations and Trends in Communications and Information Theory. Now Publishers. 6 (3–4): 213–322. doi:10.1561/0100000060. S2CID 1731099.

- Luby, Michael (2002). "LT codes". The 43rd Annual IEEE Symposium on Foundations of Computer Science, 2002. Proceedings. pp. 271–282. doi:10.1109/sfcs.2002.1181950. ISBN 0-7695-1822-2. S2CID 1861068.

- A. Shokrollahi (2006), "Raptor Codes", IEEE Transactions on Information Theory, 52 (6): 2551–2567, doi:10.1109/tit.2006.874390, S2CID 61814971 .

- P. Maymounkov (November 2002). "Online Codes" (PDF). (Technical Report).

- David J. C. MacKay (2003). Information Theory, Inference, and Learning Algorithms. Cambridge University Press. Bibcode:2003itil.book.....M. ISBN 0-521-64298-1.

- M. Luby, A. Shokrollahi, M. Watson, T. Stockhammer (October 2007), Raptor Forward Error Correction Scheme for Object Delivery, RFC 5053

{{citation}}: CS1 maint: multiple names: authors list (link). - M. Luby, A. Shokrollahi, M. Watson, T. Stockhammer, L. Minder (May 2011), RaptorQ Forward Error Correction Scheme for Object Delivery, RFC 6330

{{citation}}: CS1 maint: multiple names: authors list (link).