In computing, floating-point arithmetic (FP) is arithmetic that represents subsets of real numbers using an integer with a fixed precision, called the significand, scaled by an integer exponent of a fixed base. Numbers of this form are called floating-point numbers. For example, 12.345 is a floating-point number in base ten with five digits of precision:

IEEE 754-1985 was an industry standard for representing floating-point numbers in computers, officially adopted in 1985 and superseded in 2008 by IEEE 754-2008, and then again in 2019 by minor revision IEEE 754-2019. During its 23 years, it was the most widely used format for floating-point computation. It was implemented in software, in the form of floating-point libraries, and in hardware, in the instructions of many CPUs and FPUs. The first integrated circuit to implement the draft of what was to become IEEE 754-1985 was the Intel 8087.

In computing, NaN, standing for Not a Number, is a particular value of a numeric data type which is undefined or unrepresentable, such as the result of zero divided by zero. Systematic use of NaNs was introduced by the IEEE 754 floating-point standard in 1985, along with the representation of other non-finite quantities such as infinities.

Double-precision floating-point format is a floating-point number format, usually occupying 64 bits in computer memory; it represents a wide dynamic range of numeric values by using a floating radix point.

In computer science, subnormal numbers are the subset of denormalized numbers that fill the underflow gap around zero in floating-point arithmetic. Any non-zero number with magnitude smaller than the smallest normal number is subnormal.

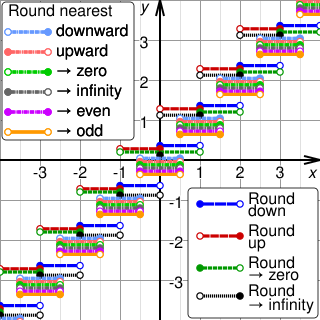

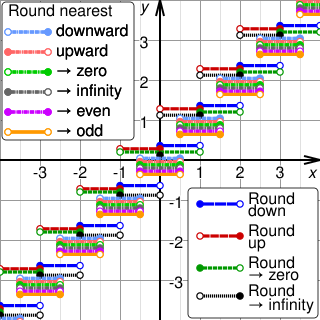

Rounding means replacing a number with an approximate value that has a shorter, simpler, or more explicit representation. For example, replacing $23.4476 with $23.45, the fraction 312/937 with 1/3, or the expression √2 with 1.414.

In computing, especially digital signal processing, the multiply–accumulate (MAC) or multiply-add (MAD) operation is a common step that computes the product of two numbers and adds that product to an accumulator. The hardware unit that performs the operation is known as a multiplier–accumulator ; the operation itself is also often called a MAC or a MAD operation. The MAC operation modifies an accumulator a:

The IEEE Standard for Floating-Point Arithmetic is a technical standard for floating-point arithmetic established in 1985 by the Institute of Electrical and Electronics Engineers (IEEE). The standard addressed many problems found in the diverse floating-point implementations that made them difficult to use reliably and portably. Many hardware floating-point units use the IEEE 754 standard.

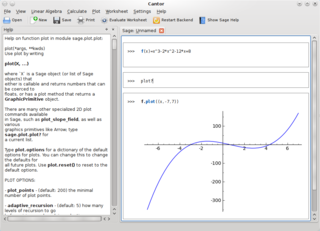

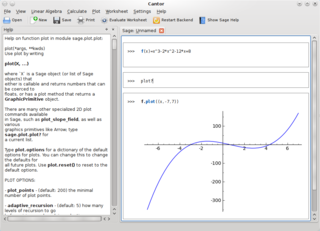

GNU Multiple Precision Arithmetic Library (GMP) is a free library for arbitrary-precision arithmetic, operating on signed integers, rational numbers, and floating-point numbers. There are no practical limits to the precision except the ones implied by the available memory (operands may be of up to 232−1 bits on 32-bit machines and 237 bits on 64-bit machines). GMP has a rich set of functions, and the functions have a regular interface. The basic interface is for C, but wrappers exist for other languages, including Ada, C++, C#, Julia, .NET, OCaml, Perl, PHP, Python, R, Ruby, and Rust. Prior to 2008, Kaffe, a Java virtual machine, used GMP to support Java built-in arbitrary precision arithmetic. Shortly after, GMP support was added to GNU Classpath.

C99 is an informal name for ISO/IEC 9899:1999, a past version of the C programming language standard. It extends the previous version (C90) with new features for the language and the standard library, and helps implementations make better use of available computer hardware, such as IEEE 754-1985 floating-point arithmetic, and compiler technology. The C11 version of the C programming language standard, published in 2011, replaces C99.

The GNU Scientific Library is a software library for numerical computations in applied mathematics and science. The GSL is written in C; wrappers are available for other programming languages. The GSL is part of the GNU Project and is distributed under the GNU General Public License.

In C and related programming languages, long double refers to a floating-point data type that is often more precise than double precision though the language standard only requires it to be at least as precise as double. As with C's other floating-point types, it may not necessarily map to an IEEE format.

Extended precision refers to floating-point number formats that provide greater precision than the basic floating-point formats. Extended precision formats support a basic format by minimizing roundoff and overflow errors in intermediate values of expressions on the base format. In contrast to extended precision, arbitrary-precision arithmetic refers to implementations of much larger numeric types using special software.

IEEE 754-2008 is a revision of the IEEE 754 standard for floating-point arithmetic. It was published in August 2008 and is a significant revision to, and replaces, the IEEE 754-1985 standard. The 2008 revision extended the previous standard where it was necessary, added decimal arithmetic and formats, tightened up certain areas of the original standard which were left undefined, and merged in IEEE 854 . In a few cases, where stricter definitions of binary floating-point arithmetic might be performance-incompatible with some existing implementation, they were made optional. In 2019, it was updated with a minor revision IEEE 754-2019.

In computing, half precision is a binary floating-point computer number format that occupies 16 bits in computer memory. It is intended for storage of floating-point values in applications where higher precision is not essential, in particular image processing and neural networks.

In computing, quadruple precision is a binary floating point–based computer number format that occupies 16 bytes with precision at least twice the 53-bit double precision.

Multiple Precision Integers and Rationals (MPIR) is an open-source software multiprecision integer library forked from the GNU Multiple Precision Arithmetic Library (GMP) project. It consists of much code from past GMP releases, and some original contributed code.

In computing, octuple precision is a binary floating-point-based computer number format that occupies 32 bytes in computer memory. This 256-bit octuple precision is for applications requiring results in higher than quadruple precision. This format is rarely used and very few environments support it.

Validated numerics, or rigorous computation, verified computation, reliable computation, numerical verification is numerics including mathematically strict error evaluation, and it is one field of numerical analysis. For computation, interval arithmetic is used, and all results are represented by intervals. Validated numerics were used by Warwick Tucker in order to solve the 14th of Smale's problems, and today it is recognized as a powerful tool for the study of dynamical systems.