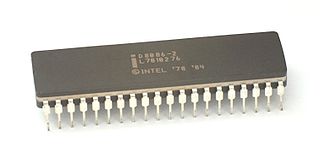

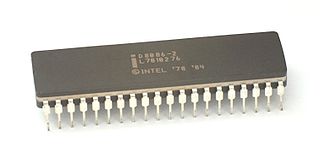

x86 is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was introduced in 1978 as a fully 16-bit extension of Intel's 8-bit 8080 microprocessor, with memory segmentation as a solution for addressing more memory than can be covered by a plain 16-bit address. The term "x86" came into being because the names of several successors to Intel's 8086 processor end in "86", including the 80186, 80286, 80386 and 80486 processors. Colloquially, their names were "186", "286", "386" and "486".

In computing, endianness is the order in which bytes within a word of digital data are transmitted over a data communication medium or stored (upwardly) in computer memory, counting only byte significance compared to earliness. Endianness is primarily expressed as big-endian (BE) or little-endian (LE), terms introduced by Danny Cohen into computer science for data ordering in an Internet Experiment Note published in 1980. The adjective endian has its origin in the writings of 18th century Anglo-Irish writer Jonathan Swift. In the 1726 novel Gulliver's Travels, he portrays the conflict between sects of Lilliputians divided into those breaking the shell of a boiled egg from the big end or from the little end. By analogy, a CPU may read a digital word big end first, or little end first.

x86 memory segmentation refers to the implementation of memory segmentation in the Intel x86 computer instruction set architecture. Segmentation was introduced on the Intel 8086 in 1978 as a way to allow programs to address more than 64 KB (65,536 bytes) of memory. The Intel 80286 introduced a second version of segmentation in 1982 that added support for virtual memory and memory protection. At this point the original mode was renamed to real mode, and the new version was named protected mode. The x86-64 architecture, introduced in 2003, has largely dropped support for segmentation in 64-bit mode.

In computing, protected mode, also called protected virtual address mode, is an operational mode of x86-compatible central processing units (CPUs). It allows system software to use features such as segmentation, virtual memory, paging and safe multi-tasking designed to increase an operating system's control over application software.

A memory management unit (MMU), sometimes called paged memory management unit (PMMU), is a computer hardware unit that examines all memory references on the memory bus, translating these requests, known as virtual memory addresses, into physical addresses in main memory.

x86 assembly language is the name for the family of assembly languages which provide some level of backward compatibility with CPUs back to the Intel 8008 microprocessor, which was launched in April 1972. It is used to produce object code for the x86 class of processors.

x86-64 is a 64-bit version of the x86 instruction set, first announced in 1999. It introduced two new modes of operation, 64-bit mode and compatibility mode, along with a new 4-level paging mode.

In computing, Physical Address Extension (PAE), sometimes referred to as Page Address Extension, is a memory management feature for the x86 architecture. PAE was first introduced by Intel in the Pentium Pro, and later by AMD in the Athlon processor. It defines a page table hierarchy of three levels (instead of two), with table entries of 64 bits each instead of 32, allowing these CPUs to directly access a physical address space larger than 4 gigabytes (232 bytes).

In DOS memory management, extended memory refers to memory above the first megabyte (220 bytes) of address space in an IBM PC or compatible with an 80286 or later processor. The term is mainly used under the DOS and Windows operating systems. DOS programs, running in real mode or virtual x86 mode, cannot directly access this memory, but are able to do so through an application programming interface called the Extended Memory Specification (XMS). This API is implemented by a driver (such as HIMEM.SYS) or the operating system, which takes care of memory management and copying memory between conventional and extended memory, by temporarily switching the processor into protected mode. In this context, the term "extended memory" may refer to either the whole of the extended memory or only the portion available through this API.

Memory protection is a way to control memory access rights on a computer, and is a part of most modern instruction set architectures and operating systems. The main purpose of memory protection is to prevent a process from accessing memory that has not been allocated to it. This prevents a bug or malware within a process from affecting other processes, or the operating system itself. Protection may encompass all accesses to a specified area of memory, write accesses, or attempts to execute the contents of the area. An attempt to access unauthorized memory results in a hardware fault, e.g., a segmentation fault, storage violation exception, generally causing abnormal termination of the offending process. Memory protection for computer security includes additional techniques such as address space layout randomization and executable space protection.

A general protection fault (GPF) in the x86 instruction set architectures (ISAs) is a fault initiated by ISA-defined protection mechanisms in response to an access violation caused by some running code, either in the kernel or a user program. The mechanism is first described in Intel manuals and datasheets for the Intel 80286 CPU, which was introduced in 1983; it is also described in section 9.8.13 in the Intel 80386 programmer's reference manual from 1986. A general protection fault is implemented as an interrupt. Some operating systems may also classify some exceptions not related to access violations, such as illegal opcode exceptions, as general protection faults, even though they have nothing to do with memory protection. If a CPU detects a protection violation, it stops executing the code and sends a GPF interrupt. In most cases, the operating system removes the failing process from the execution queue, signals the user, and continues executing other processes. If, however, the operating system fails to catch the general protection fault, i.e. another protection violation occurs before the operating system returns from the previous GPF interrupt, the CPU signals a double fault, stopping the operating system. If yet another failure occurs, the CPU is unable to recover; since 80286, the CPU enters a special halt state called "Shutdown", which can only be exited through a hardware reset. The IBM PC AT, the first PC-compatible system to contain an 80286, has hardware that detects the Shutdown state and automatically resets the CPU when it occurs. All descendants of the PC AT do the same, so in a PC, a triple fault causes an immediate system reset.

The x86 instruction set refers to the set of instructions that x86-compatible microprocessors support. The instructions are usually part of an executable program, often stored as a computer file and executed on the processor.

The NX bit (no-execute) is a technology used in CPUs to segregate areas of a virtual address space to store either data or processor instructions. An operating system with support for the NX bit may mark certain areas of an address space as non-executable. The processor will then refuse to execute any code residing in these areas of the address space. The general technique, known as executable space protection, also called Write XOR Execute, is used to prevent certain types of malicious software from taking over computers by inserting their code into another program's data storage area and running their own code from within this section; one class of such attacks is known as the buffer overflow attack.

In x86 computing, unreal mode, also big real mode, flat real mode, or voodoo mode is a variant of real mode, in which one or more segment descriptors has been loaded with non-standard values, like 32-bit limits allowing access to the entire memory. Contrary to its name, it is not a separate addressing mode that the x86 processors can operate in. It is used in the 80286 and later x86 processors.

Memory segmentation is an operating system memory management technique of dividing a computer's primary memory into segments or sections. In a computer system using segmentation, a reference to a memory location includes a value that identifies a segment and an offset within that segment. Segments or sections are also used in object files of compiled programs when they are linked together into a program image and when the image is loaded into memory.

The interrupt descriptor table (IDT) is a data structure used by the x86 architecture to implement an interrupt vector table. The IDT is used by the processor to determine the correct response to interrupts and exceptions.

A call gate is a mechanism in Intel's x86 architecture for changing the privilege level of a process when it executes a predefined function call using a CALL FAR instruction.

The task state segment (TSS) is a structure on x86-based computers which holds information about a task. It is used by the operating system kernel for task management. Specifically, the following information is stored in the TSS:

In memory addressing for Intel x86 computer architectures, segment descriptors are a part of the segmentation unit, used for translating a logical address to a linear address. Segment descriptors describe the memory segment referred to in the logical address. The segment descriptor contains the following fields:

- A segment base address

- The segment limit which specifies the segment size

- Access rights byte containing the protection mechanism information

- Control bits

Intel 5-level paging, referred to simply as 5-level paging in Intel documents, is a processor extension for the x86-64 line of processors. It extends the size of virtual addresses from 48 bits to 57 bits by adding an additional level to x86-64's multilevel page tables, increasing the addressable virtual memory from 256 TB to 128 PB. The extension was first implemented in the Ice Lake processors.