Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

Reconfigurable computing is a computer architecture combining some of the flexibility of software with the high performance of hardware by processing with flexible hardware platforms like field-programmable gate arrays (FPGAs). The principal difference when compared to using ordinary microprocessors is the ability to add custom computational blocks using FPGAs. On the other hand, the main difference from custom hardware, i.e. application-specific integrated circuits (ASICs) is the possibility to adapt the hardware during runtime by "loading" a new circuit on the reconfigurable fabric, thus providing new computational blocks without the need to manufacture and add new chips to the existing system.

Molecular dynamics (MD) is a computer simulation method for analyzing the physical movements of atoms and molecules. The atoms and molecules are allowed to interact for a fixed period of time, giving a view of the dynamic "evolution" of the system. In the most common version, the trajectories of atoms and molecules are determined by numerically solving Newton's equations of motion for a system of interacting particles, where forces between the particles and their potential energies are often calculated using interatomic potentials or molecular mechanical force fields. The method is applied mostly in chemical physics, materials science, and biophysics.

Theoretical Computer Science (TCS) is a subset of general computer science and mathematics that focuses on mathematical aspects of computer science such as the theory of computation (TOC), formal language theory, the lambda calculus and type theory.

Unified Parallel C (UPC) is an extension of the C programming language designed for high-performance computing on large-scale parallel machines, including those with a common global address space and those with distributed memory. The programmer is presented with a single partitioned global address space; where shared variables may be directly read and written by any processor, but each variable is physically associated with a single processor. UPC uses a single program, multiple data (SPMD) model of computation in which the amount of parallelism is fixed at program startup time, typically with a single thread of execution per processor.

In computer programming, dataflow programming is a programming paradigm that models a program as a directed graph of the data flowing between operations, thus implementing dataflow principles and architecture. Dataflow programming languages share some features of functional languages, and were generally developed in order to bring some functional concepts to a language more suitable for numeric processing. Some authors use the term datastream instead of dataflow to avoid confusion with dataflow computing or dataflow architecture, based on an indeterministic machine paradigm. Dataflow programming was pioneered by Jack Dennis and his graduate students at MIT in the 1960s.

In computing, a parallel programming model is an abstraction of parallel computer architecture, with which it is convenient to express algorithms and their composition in programs. The value of a programming model can be judged on its generality: how well a range of different problems can be expressed for a variety of different architectures, and its performance: how efficiently the compiled programs can execute. The implementation of a parallel programming model can take the form of a library invoked from a sequential language, as an extension to an existing language, or as an entirely new language.

Concurrent computing is a form of computing in which several computations are executed concurrently—during overlapping time periods—instead of sequentially—with one completing before the next starts.

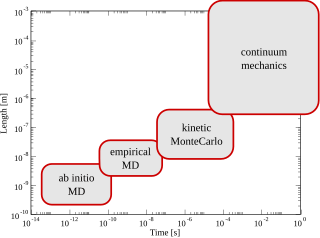

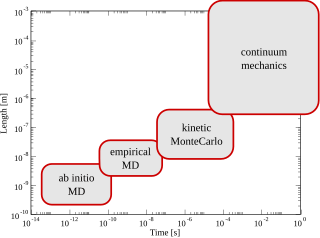

Multiscale modeling or multiscale mathematics is the field of solving problems that have important features at multiple scales of time and/or space. Important problems include multiscale modeling of fluids, solids, polymers, proteins, nucleic acids as well as various physical and chemical phenomena.

SIMD within a register (SWAR), also known by the name "packed SIMD" is a technique for performing parallel operations on data contained in a processor register. SIMD stands for single instruction, multiple data. Flynn's 1972 taxonomy categorises SWAR as "pipelined processing".

Programmable matter is matter which has the ability to change its physical properties in a programmable fashion, based upon user input or autonomous sensing. Programmable matter is thus linked to the concept of a material which inherently has the ability to perform information processing.

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to task parallelism as another form of parallelism.

Task parallelism is a form of parallelization of computer code across multiple processors in parallel computing environments. Task parallelism focuses on distributing tasks—concurrently performed by processes or threads—across different processors. In contrast to data parallelism which involves running the same task on different components of data, task parallelism is distinguished by running many different tasks at the same time on the same data. A common type of task parallelism is pipelining, which consists of moving a single set of data through a series of separate tasks where each task can execute independently of the others.

This is a glossary of terms used in the field of Reconfigurable computing and reconfigurable computing systems, as opposed to the traditional Von Neumann architecture.

Plurality Ltd. is an Israeli semiconductor company, the developer of the HyperCore technology and the HAL multi-core processor. The company is a member of the Multicore Association.

The High-performance Integrated Virtual Environment (HIVE) is a distributed computing environment used for healthcare-IT and biological research, including analysis of Next Generation Sequencing (NGS) data, preclinical, clinical and post market data, adverse events, metagenomic data, etc. Currently it is supported and continuously developed by US Food and Drug Administration, George Washington University, and by DNA-HIVE, WHISE-Global and Embleema. HIVE currently operates fully functionally within the US FDA supporting wide variety (+60) of regulatory research and regulatory review projects as well as for supporting MDEpiNet medical device postmarket registries. Academic deployments of HIVE are used for research activities and publications in NGS analytics, cancer research, microbiome research and in educational programs for students at GWU. Commercial enterprises use HIVE for oncology, microbiology, vaccine manufacturing, gene editing, healthcare-IT, harmonization of real-world data, in preclinical research and clinical studies.

The Xputer is a design for a reconfigurable computer, proposed by computer scientist Reiner Hartenstein. Hartenstein uses various terms to describe the various innovations in the design, including config-ware, flow-ware, morph-ware, and "anti-machine".

Coarse-grained modeling, coarse-grained models, aim at simulating the behaviour of complex systems using their coarse-grained (simplified) representation. Coarse-grained models are widely used for molecular modeling of biomolecules at various granularity levels.

In parallel computing, granularity of a task is a measure of the amount of work which is performed by that task.

Nader Bagherzadeh is a professor of computer engineering in the Department of Electrical Engineering and Computer Science at the University of California, Irvine, where he served as a chair from 1998 to 2003. Bagherzadeh has been involved in research and development in the areas of: Computer Architecture, Reconfigurable Computing, VLSI Chip Design, Network-on-Chip, 3D chips, Sensor Networks, Computer Graphics, Memory and Embedded Systems. Bagherzadeh was named Fellow of the Institute of Electrical and Electronics Engineers (IEEE) in 2014 for contributions to the design and analysis of coarse-grained reconfigurable processor architectures. Bagherzadeh has published more than 400 articles in peer-reviewed journals and conferences. He was with AT&T Bell Labs from 1980 to 1984.