The precautionary principle is a broad epistemological, philosophical and legal approach to innovations with potential for causing harm when extensive scientific knowledge on the matter is lacking. It emphasizes caution, pausing and review before leaping into new innovations that may prove disastrous. Critics argue that it is vague, self-cancelling, unscientific and an obstacle to progress.

Rationality is the quality of being guided by or based on reasons. In this regard, a person acts rationally if they have a good reason for what they do or a belief is rational if it is based on strong evidence. This quality can apply to an ability, as in rational animal, to a psychological process, like reasoning, to mental states, such as beliefs and intentions, or to persons who possess these other forms of rationality. A thing that lacks rationality is either arational, if it is outside the domain of rational evaluation, or irrational, if it belongs to this domain but does not fulfill its standards.

In economics and business decision-making, a sunk cost is a cost that has already been incurred and cannot be recovered. Sunk costs are contrasted with prospective costs, which are future costs that may be avoided if action is taken. In other words, a sunk cost is a sum paid in the past that is no longer relevant to decisions about the future. Even though economists argue that sunk costs are no longer relevant to future rational decision-making, people in everyday life often take previous expenditures in situations, such as repairing a car or house, into their future decisions regarding those properties.

Behavioral economics studies the effects of psychological, cognitive, emotional, cultural and social factors on the decisions of individuals or institutions, such as how those decisions vary from those implied by classical economic theory.

Prospect theory is a theory of behavioral economics and behavioral finance that was developed by Daniel Kahneman and Amos Tversky in 1979. The theory was cited in the decision to award Kahneman the 2002 Nobel Memorial Prize in Economics.

Decision theory is a branch of applied probability theory and analytic philosophy concerned with the theory of making decisions based on assigning probabilities to various factors and assigning numerical consequences to the outcome.

The expected utility hypothesis is a popular concept in economics that serves as a reference guide for decisions when the payoff is uncertain. The theory recommends which option rational individuals should choose in a complex situation, based on their risk appetite and preferences.

Cass Robert Sunstein is an American legal scholar known for his studies of constitutional law, administrative law, environmental law, and behavioral economics. He is also The New York Times best-selling author of The World According to Star Wars (2016) and Nudge (2008). He was the Administrator of the White House Office of Information and Regulatory Affairs in the Obama administration from 2009 to 2012.

In decision theory, the Ellsberg paradox is a paradox in which people's decisions are inconsistent with subjective expected utility theory. Daniel Ellsberg popularized the paradox in his 1961 paper, “Risk, Ambiguity, and the Savage Axioms”. John Maynard Keynes published a version of the paradox in 1921. It is generally taken to be evidence of ambiguity aversion, in which a person tends to prefer choices with quantifiable risks over those with unknown, incalculable risks.

The Allais paradox is a choice problem designed by Maurice Allais (1953) to show an inconsistency of actual observed choices with the predictions of expected utility theory.

Libertarian paternalism is the idea that it is both possible and legitimate for private and public institutions to affect behavior while also respecting freedom of choice, as well as the implementation of that idea. The term was coined by behavioral economist Richard Thaler and legal scholar Cass Sunstein in a 2003 article in the American Economic Review. The authors further elaborated upon their ideas in a more in-depth article published in the University of Chicago Law Review that same year. They propose that libertarian paternalism is paternalism in the sense that "it tries to influence choices in a way that will make choosers better off, as judged by themselves" ; note and consider, the concept paternalism specifically requires a restriction of choice. It is libertarian in the sense that it aims to ensure that "people should be free to opt out of specified arrangements if they choose to do so". The possibility to opt out is said to "preserve freedom of choice". Thaler and Sunstein published Nudge, a book-length defense of this political doctrine, in 2008.

Zero-risk bias is a tendency to prefer the complete elimination of risk in a sub-part over alternatives with greater overall risk reduction. It often manifests in cases where decision makers address problems concerning health, safety, and the environment. Its effect on decision making has been observed in surveys presenting hypothetical scenarios.

Choice architecture is the design of different ways in which choices can be presented to decision makers, and the impact of that presentation on decision-making. For example, each of the following:

Attribute substitution is a psychological process thought to underlie a number of cognitive biases and perceptual illusions. It occurs when an individual has to make a judgment that is computationally complex, and instead substitutes a more easily calculated heuristic attribute. This substitution is thought of as taking place in the automatic intuitive judgment system, rather than the more self-aware reflective system. Hence, when someone tries to answer a difficult question, they may actually answer a related but different question, without realizing that a substitution has taken place. This explains why individuals can be unaware of their own biases, and why biases persist even when the subject is made aware of them. It also explains why human judgments often fail to show regression toward the mean.

In simple terms, risk is the possibility of something bad happening. Risk involves uncertainty about the effects/implications of an activity with respect to something that humans value, often focusing on negative, undesirable consequences. Many different definitions have been proposed. The international standard definition of risk for common understanding in different applications is “effect of uncertainty on objectives”.

In decision theory, the von Neumann–Morgenstern (VNM) utility theorem shows that, under certain axioms of rational behavior, a decision-maker faced with risky (probabilistic) outcomes of different choices will behave as if he or she is maximizing the expected value of some function defined over the potential outcomes at some specified point in the future. This function is known as the von Neumann–Morgenstern utility function. The theorem is the basis for expected utility theory.

Heuristics is the process by which humans use mental short cuts to arrive at decisions. Heuristics are simple strategies that humans, animals, organizations, and even machines use to quickly form judgments, make decisions, and find solutions to complex problems. Often this involves focusing on the most relevant aspects of a problem or situation to formulate a solution. While heuristic processes are used to find the answers and solutions that are most likely to work or be correct, they are not always right or the most accurate. Judgments and decisions based on heuristics are simply good enough to satisfy a pressing need in situations of uncertainty, where information is incomplete. In that sense they can differ from answers given by logic and probability.

Risk aversion is a preference for a sure outcome over a gamble with higher or equal expected value. Conversely, the rejection of a sure thing in favor of a gamble of lower or equal expected value is known as risk-seeking behavior.

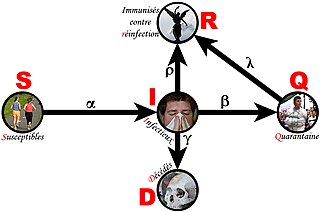

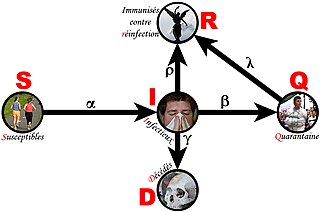

The discipline of forensic epidemiology (FE) is a hybrid of principles and practices common to both forensic medicine and epidemiology. FE is directed at filling the gap between clinical judgment and epidemiologic data for determinations of causality in civil lawsuits and criminal prosecution and defense.

Noise: A Flaw in Human Judgment is a nonfiction book by professors Daniel Kahneman, Olivier Sibony and Cass Sunstein. It was first published on May 18, 2021. The book concerns 'noise' in human judgment and decision-making. The authors define noise in human judgment as "undesirable variability in judgments of the same problem" and focus on the statistical properties and psychological perspectives of the issue.