Related Research Articles

LINPACK is a software library for performing numerical linear algebra on digital computers. It was written in Fortran by Jack Dongarra, Jim Bunch, Cleve Moler, and Gilbert Stewart, and was intended for use on supercomputers in the 1970s and early 1980s. It has been largely superseded by LAPACK, which runs more efficiently on modern architectures.

Netlib is a repository of software for scientific computing maintained by AT&T, Bell Laboratories, the University of Tennessee and Oak Ridge National Laboratory. Netlib comprises many separate programs and libraries. Most of the code is written in C and Fortran, with some programs in other languages.

Jack Joseph Dongarra is an American computer scientist and mathematician. He is the American University Distinguished Professor of Computer Science in the Electrical Engineering and Computer Science Department at the University of Tennessee. He holds the position of a Distinguished Research Staff member in the Computer Science and Mathematics Division at Oak Ridge National Laboratory, Turing Fellowship in the School of Mathematics at the University of Manchester, and is an adjunct professor and teacher in the Computer Science Department at Rice University. He served as a faculty fellow at the Texas A&M University Institute for Advanced Study (2014–2018). Dongarra is the founding director of the Innovative Computing Laboratory at the University of Tennessee. He was the recipient of the Turing Award in 2021.

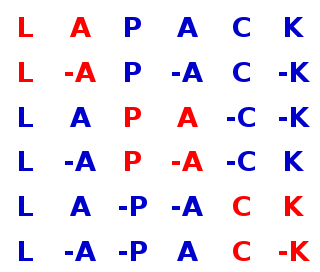

LAPACK is a standard software library for numerical linear algebra. It provides routines for solving systems of linear equations and linear least squares, eigenvalue problems, and singular value decomposition. It also includes routines to implement the associated matrix factorizations such as LU, QR, Cholesky and Schur decomposition. LAPACK was originally written in FORTRAN 77, but moved to Fortran 90 in version 3.2 (2008). The routines handle both real and complex matrices in both single and double precision. LAPACK relies on an underlying BLAS implementation to provide efficient and portable computational building blocks for its routines.

Basic Linear Algebra Subprograms (BLAS) is a specification that prescribes a set of low-level routines for performing common linear algebra operations such as vector addition, scalar multiplication, dot products, linear combinations, and matrix multiplication. They are the de facto standard low-level routines for linear algebra libraries; the routines have bindings for both C and Fortran. Although the BLAS specification is general, BLAS implementations are often optimized for speed on a particular machine, so using them can bring substantial performance benefits. BLAS implementations will take advantage of special floating point hardware such as vector registers or SIMD instructions.

Computational science, also known as scientific computing, technical computing or scientific computation (SC), is a division of science that uses advanced computing capabilities to understand and solve complex physical problems. This includes

AMD Core Math Library (ACML) is an end-of-life software development library released by AMD, replaced by many open source libraries, including AMD libm 4.0. This library provides mathematical routines optimized for AMD processors.

The ScaLAPACK library includes a subset of LAPACK routines redesigned for distributed memory MIMD parallel computers. It is currently written in a Single-Program-Multiple-Data style using explicit message passing for interprocessor communication. It assumes matrices are laid out in a two-dimensional block cyclic decomposition.

Automatically Tuned Linear Algebra Software (ATLAS) is a software library for linear algebra. It provides a mature open source implementation of BLAS APIs for C and Fortran77.

Trilinos is a collection of open-source software libraries, called packages, intended to be used as building blocks for the development of scientific applications. The word "Trilinos" is Greek and conveys the idea of "a string of pearls", suggesting a number of software packages linked together by a common infrastructure. Trilinos was developed at Sandia National Laboratories from a core group of existing algorithms and utilizes the functionality of software interfaces such as the BLAS, LAPACK, and MPI . In 2004, Trilinos received an R&D100 Award.

Lis is a scalable parallel software library for solving discretized linear equations and eigenvalue problems that mainly arise in the numerical solution of partial differential equations by using iterative methods. Although it is designed for parallel computers, the library can be used without being conscious of parallel processing.

Armadillo is a linear algebra software library for the C++ programming language. It aims to provide efficient and streamlined base calculations, while at the same time having a straightforward and easy-to-use interface. Its intended target users are scientists and engineers.

Intel oneAPI Math Kernel Library is a library of optimized math routines for science, engineering, and financial applications. Core math functions include BLAS, LAPACK, ScaLAPACK, sparse solvers, fast Fourier transforms, and vector math. That is besides the math.h standard C library, that is also more accurate compared to glibc.

The following tables provide a comparison of linear algebra software libraries, either specialized or general purpose libraries with significant linear algebra coverage.

The LINPACK Benchmarks are a measure of a system's floating-point computing power. Introduced by Jack Dongarra, they measure how fast a computer solves a dense n by n system of linear equations Ax = b, which is a common task in engineering.

In scientific computing, GotoBLAS and GotoBLAS2 are open source implementations of the BLAS API with many hand-crafted optimizations for specific processor types. GotoBLAS was developed by Kazushige Goto at the Texas Advanced Computing Center. As of 2003, it was used in seven of the world's ten fastest supercomputers.

Convex Over and Under ENvelopes for Nonlinear Estimation (Couenne) is an open-source library for solving global optimization problems, also termed mixed integer nonlinear optimization problems. A global optimization problem requires to minimize a function, called objective function, subject to a set of constraints. Both the objective function and the constraints might be nonlinear and nonconvex. For solving these problems, Couenne uses a reformulation procedure and provides a linear programming approximation of any nonconvex optimization problem.

OpenBLAS is an open-source implementation of the BLAS and LAPACK APIs with many hand-crafted optimizations for specific processor types. It is developed at the Lab of Parallel Software and Computational Science, ISCAS.

In scientific computing, BLIS is an open-source framework for implementing a superset of BLAS functionality for specific processor types that was recently awarded the J. H. Wilkinson Prize for Numerical Software. It exposes that functionality through two traditional Application Programming Interfaces (APIs): the BLAS interface and the CBLAS interface. BLIS also includes two APIs native to the framework: a typed (BLAS-like) API and an object API. These native interfaces provide access to BLAS-like functionality that is not supported by, but closely related to, operations found in the BLAS . The framework is developed and supported by the Science of High-Performance Computing (SHPC) group of the Oden Institute for Computational Engineering and Sciences at The University of Texas at Austin and the Matthews Research Group at Southern Methodist University.

References

- ↑ Choi, Jaeyoung; Dongarra, Jack; Ostrouchov, Susan; Petitet, Antoine; Walker, David; Whaley, R. Clinton (1996). "A proposal for a set of parallel basic linear algebra subprograms". Applied Parallel Computing Computations in Physics, Chemistry and Engineering Science. Lecture Notes in Computer Science. Vol. 1041. p. 107. doi:10.1007/3-540-60902-4_13. ISBN 978-3-540-60902-5.

- ↑ Petitet, Antoine (1995). "PBLAS". Netlib. Retrieved 13 July 2012.

- ↑ Jaeyoung Choi; Dongarra, J.J.; Walker, D.W. (May 1994). "PB-BLAS: A set of Parallel Block Basic Linear Algebra Subprograms" (PDF). Proceedings of IEEE Scalable High Performance Computing Conference. pp. 534–541. doi:10.1109/SHPCC.1994.296688. ISBN 0-8186-5680-8. S2CID 9013914.