ML is a functional programming language. It is known for its use of the polymorphic Hindley–Milner type system, which automatically assigns the types of most expressions without requiring explicit type annotations, and ensures type safety – there is a formal proof that a well-typed ML program does not cause runtime type errors. ML provides pattern matching for function arguments, garbage collection, imperative programming, call-by-value and currying. While a general-purpose programming language, ML is used heavily in programming language research and is one of the few languages to be completely specified and verified using formal semantics. Its types and pattern matching make it well-suited and commonly used to operate on other formal languages, such as in compiler writing, automated theorem proving, and formal verification.

Combinatory logic is a notation to eliminate the need for quantified variables in mathematical logic. It was introduced by Moses Schönfinkel and Haskell Curry, and has more recently been used in computer science as a theoretical model of computation and also as a basis for the design of functional programming languages. It is based on combinators, which were introduced by Schönfinkel in 1920 with the idea of providing an analogous way to build up functions—and to remove any mention of variables—particularly in predicate logic. A combinator is a higher-order function that uses only function application and earlier defined combinators to define a result from its arguments.

In computer programming, a type system is a logical system comprising a set of rules that assigns a property called a type to every "term". Usually the terms are various constructs of a computer program, such as variables, expressions, functions, or modules. A type system dictates the operations that can be performed on a term. For variables, the type system determines the allowed values of that term. Type systems formalize and enforce the otherwise implicit categories the programmer uses for algebraic data types, data structures, or other components.

In programming language theory and type theory, polymorphism is the provision of a single interface to entities of different types or the use of a single symbol to represent multiple different types. The concept is borrowed from a principle in biology where an organism or species can have many different forms or stages.

Type inference refers to the automatic detection of the type of an expression in a formal language. These include programming languages and mathematical type systems, but also natural languages in some branches of computer science and linguistics.

In type theory and programming languages, a type variable is a mathematical variable ranging over types. Even in programming languages that allow mutable variables, a type variable remains an abstraction, in the sense that it does not correspond to some memory locations.

In programming languages, ad hoc polymorphism is a kind of polymorphism in which polymorphic functions can be applied to arguments of different types, because a polymorphic function can denote a number of distinct and potentially heterogeneous implementations depending on the type of argument(s) to which it is applied. When applied to object-oriented or procedural concepts, it is also known as function overloading or operator overloading. The term ad hoc in this context is not intended to be pejorative; it refers simply to the fact that this type of polymorphism is not a fundamental feature of the type system. This is in contrast to parametric polymorphism, in which polymorphic functions are written without mention of any specific type, and can thus apply a single abstract implementation to any number of types in a transparent way. This classification was introduced by Christopher Strachey in 1967.

The Glasgow Haskell Compiler (GHC) is a native or machine code compiler for the functional programming language Haskell. It provides a cross-platform software environment for writing and testing Haskell code and supports many extensions, libraries, and optimisations that streamline the process of generating and executing code. GHC is the most commonly used Haskell compiler. It is free and open-source software released under a BSD license. The lead developers are Simon Peyton Jones and Simon Marlow.

In functional programming, a monad is a structure that combines program fragments (functions) and wraps their return values in a type with additional computation. In addition to defining a wrapping monadic type, monads define two operators: one to wrap a value in the monad type, and another to compose together functions that output values of the monad type. General-purpose languages use monads to reduce boilerplate code needed for common operations. Functional languages use monads to turn complicated sequences of functions into succinct pipelines that abstract away control flow, and side-effects.

System F is a typed lambda calculus that introduces, to simply typed lambda calculus, a mechanism of universal quantification over types. System F formalizes parametric polymorphism in programming languages, thus forming a theoretical basis for languages such as Haskell and ML. It was discovered independently by logician Jean-Yves Girard (1972) and computer scientist John C. Reynolds.

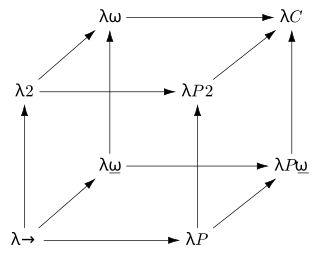

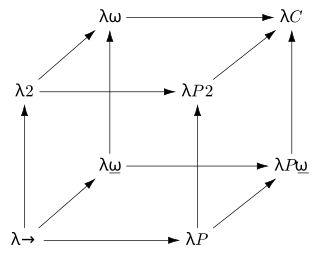

In mathematical logic and type theory, the λ-cube is a framework introduced by Henk Barendregt to investigate the different dimensions in which the calculus of constructions is a generalization of the simply typed λ-calculus. Each dimension of the cube corresponds to a new kind of dependency between terms and types. Here, "dependency" refers to the capacity of a term or type to bind a term or type. The respective dimensions of the λ-cube correspond to:

In programming languages and type theory, parametric polymorphism allows a single piece of code to be given a "generic" type, using variables in place of actual types, and then instantiated with particular types as needed. Parametrically polymorphic functions and data types are sometimes called generic functions and generic datatypes, respectively, and they form the basis of generic programming.

In computer science, a type class is a type system construct that supports ad hoc polymorphism. This is achieved by adding constraints to type variables in parametrically polymorphic types. Such a constraint typically involves a type class T and a type variable a, and means that a can only be instantiated to a type whose members support the overloaded operations associated with T.

In many programming languages, map is the name of a higher-order function that applies a given function to each element of a collection, e.g. a list or set, returning the results in a collection of the same type. It is often called apply-to-all when considered in functional form.

Many-sorted logic can reflect formally our intention not to handle the universe as a homogeneous collection of objects, but to partition it in a way that is similar to types in typeful programming. Both functional and assertive "parts of speech" in the language of the logic reflect this typeful partitioning of the universe, even on the syntax level: substitution and argument passing can be done only accordingly, respecting the "sorts".

In the area of mathematical logic and computer science known as type theory, a kind is the type of a type constructor or, less commonly, the type of a higher-order type operator. A kind system is essentially a simply typed lambda calculus "one level up", endowed with a primitive type, denoted and called "type", which is the kind of any data type which does not need any type parameters.

In type theory, bounded quantification refers to universal or existential quantifiers which are restricted ("bounded") to range only over the subtypes of a particular type. Bounded quantification is an interaction of parametric polymorphism with subtyping. Bounded quantification has traditionally been studied in the functional setting of System F<:, but is available in modern object-oriented languages supporting parametric polymorphism (generics) such as Java, C# and Scala.

Haskell is a general-purpose, statically-typed, purely functional programming language with type inference and lazy evaluation. Designed for teaching, research, and industrial applications, Haskell has pioneered a number of programming language features such as type classes, which enable type-safe operator overloading, and monadic input/output (IO). It is named after logician Haskell Curry. Haskell's main implementation is the Glasgow Haskell Compiler (GHC).

A Hindley–Milner (HM) type system is a classical type system for the lambda calculus with parametric polymorphism. It is also known as Damas–Milner or Damas–Hindley–Milner. It was first described by J. Roger Hindley and later rediscovered by Robin Milner. Luis Damas contributed a close formal analysis and proof of the method in his PhD thesis.

In programming languages with Hindley-Milner type inference and imperative features, in particular the ML programming language family, the value restriction means that declarations are only polymorphically generalized if they are syntactic values. The value restriction prevents reference cells from holding values of different types and preserves type safety.