Related Research Articles

Cognitive science is the interdisciplinary, scientific study of the mind and its processes. It examines the nature, the tasks, and the functions of cognition. Mental faculties of concern to cognitive scientists include language, perception, memory, attention, reasoning, and emotion; to understand these faculties, cognitive scientists borrow from fields such as linguistics, psychology, artificial intelligence, philosophy, neuroscience, and anthropology. The typical analysis of cognitive science spans many levels of organization, from learning and decision to logic and planning; from neural circuitry to modular brain organization. One of the fundamental concepts of cognitive science is that "thinking can best be understood in terms of representational structures in the mind and computational procedures that operate on those structures."

The following outline is provided as an overview and topical guide to linguistics:

In linguistics, syntax is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). There are numerous approaches to syntax that differ in their central assumptions and goals.

Lexical functional grammar (LFG) is a constraint-based grammar framework in theoretical linguistics. It posits two separate levels of syntactic structure, a phrase structure grammar representation of word order and constituency, and a representation of grammatical functions such as subject and object, similar to dependency grammar. The development of the theory was initiated by Joan Bresnan and Ronald Kaplan in the 1970s, in reaction to the theory of transformational grammar which was current in the late 1970s. It mainly focuses on syntax, including its relation with morphology and semantics. There has been little LFG work on phonology.

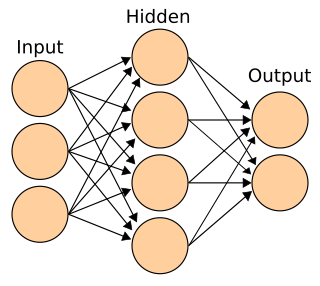

Connectionism is the name of an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks. Connectionism has had many 'waves' since its beginnings.

A symbolic linguistic representation is a representation of an utterance that uses symbols to represent linguistic information about the utterance, such as information about phonetics, phonology, morphology, syntax, or semantics. Symbolic linguistic representations are different from non-symbolic representations, such as recordings, because they use symbols to represent linguistic information rather than measurements.

The language of thought hypothesis (LOTH), sometimes known as thought ordered mental expression (TOME), is a view in linguistics, philosophy of mind and cognitive science, forwarded by American philosopher Jerry Fodor. It describes the nature of thought as possessing "language-like" or compositional structure. On this view, simple concepts combine in systematic ways to build thoughts. In its most basic form, the theory states that thought, like language, has syntax.

Ivan Andrew Sag was an American linguist and cognitive scientist. He did research in areas of syntax and semantics as well as work in computational linguistics.

Construction grammar is a family of theories within the field of cognitive linguistics which posit that constructions, or learned pairings of linguistic patterns with meanings, are the fundamental building blocks of human language. Constructions include words, morphemes, fixed expressions and idioms, and abstract grammatical rules such as the passive voice or the ditransitive. Any linguistic pattern is considered to be a construction as long as some aspect of its form or its meaning cannot be predicted from its component parts, or from other constructions that are recognized to exist. In construction grammar, every utterance is understood to be a combination of multiple different constructions, which together specify its precise meaning and form.

Charles J. Fillmore was an American linguist and Professor of Linguistics at the University of California, Berkeley. He received his Ph.D. in Linguistics from the University of Michigan in 1961. Fillmore spent ten years at Ohio State University and a year as a Fellow at the Center for Advanced Study in the Behavioral Sciences at Stanford University before joining Berkeley's Department of Linguistics in 1971. Fillmore was extremely influential in the areas of syntax and lexical semantics.

Neurophilosophy or the philosophy of neuroscience is the interdisciplinary study of neuroscience and philosophy that explores the relevance of neuroscientific studies to the arguments traditionally categorized as philosophy of mind. The philosophy of neuroscience attempts to clarify neuroscientific methods and results using the conceptual rigor and methods of philosophy of science.

In linguistics, linguistic competence is the system of unconscious knowledge that one knows when they know a language. It is distinguished from linguistic performance, which includes all other factors that allow one to use one's language in practice.

Joan Wanda Bresnan FBA is Sadie Dernham Patek Professor in Humanities Emerita at Stanford University. She is best known as one of the architects of the theoretical framework of lexical functional grammar.

Harmonic grammar is a linguistic model proposed by Geraldine Legendre, Yoshiro Miyata, and Paul Smolensky in 1990. It is a connectionist approach to modeling linguistic well-formedness. During the late 2000s and early 2010s, the term 'harmonic grammar' has been used to refer more generally to models of language that use weighted constraints, including ones that are not explicitly connectionist – see e.g. Pater (2009) and Potts et al. (2010).

Relational Network Theory (RNT), also known as Neurocognitive Linguistics (NCL) and formerly as Stratificational Linguistics or Cognitive-Stratificational Linguistics, is a connectionist theoretical framework in linguistics primarily developed by Sydney Lamb which aims to integrate theoretical linguistics with neuroanatomy. It views the linguistic system of individual speakers, responsible for language comprehension and production, as consisting of networks of relationships which interconnect across different "strata" of language. These relational networks are hypothesized to correspond to neural maps of cortical columns or minicolumns in the human brain. Consequently, RNT is related to the wider family of cognitive linguistic theories. Furthermore, as a functionalist approach to linguistics, RNT shares a close relationship with Systemic Functional Linguistics (SFL).

Ronald J. Williams is professor of computer science at Northeastern University, and one of the pioneers of neural networks. He co-authored a paper on the backpropagation algorithm which triggered a boom in neural network research. He also made fundamental contributions to the fields of recurrent neural networks and reinforcement learning. Together with Wenxu Tong and Mary Jo Ondrechen he developed Partial Order Optimum Likelihood (POOL), a machine learning method used in the prediction of active amino acids in protein structures. POOL is a maximum likelihood method with a monotonicity constraint and is a general predictor of properties that depend monotonically on the input features.

In linguistics, the term formalism is used in a variety of meanings which relate to formal linguistics in different ways. In common usage, it is merely synonymous with a grammatical model or a syntactic model: a method for analyzing sentence structures. Such formalisms include different methodologies of generative grammar which are especially designed to produce grammatically correct strings of words; or the likes of Functional Discourse Grammar which builds on predicate logic.

In linguistics, optimality theory is a linguistic model proposing that the observed forms of language arise from the optimal satisfaction of conflicting constraints. OT differs from other approaches to phonological analysis, which typically use rules rather than constraints. However, phonological models of representation, such as autosegmental phonology, prosodic phonology, and linear phonology (SPE), are equally compatible with rule-based and constraint-based models. OT views grammars as systems that provide mappings from inputs to outputs; typically, the inputs are conceived of as underlying representations, and the outputs as their surface realizations. It is an approach within the larger framework of generative grammar.

Jane Barbara Grimshaw is a Distinguished Professor [emerita] in the Department of Linguistics at Rutgers University-New Brunswick. She is known for her contributions to the areas of syntax, optimality theory, language acquisition, and lexical representation.

Géraldine Legendre is a French-American cognitive scientist and linguist known for her work on French grammar, on mathematical models for the development of syntax in natural languages including harmonic grammar and Optimality Theory, and on universal grammar and innate syntactic ability of humans in natural language. She is a professor of cognitive science at Johns Hopkins University and the chair of the Johns Hopkins Cognitive Science Department.

References

- ↑ Prince, Alan; Smolensky, Paul (2002). Optimality Theory: Constraint Interaction in Generative Grammar (Report). Rutgers University. doi: 10.7282/T34M92MV .— updated version of July 1993 report

- ↑ Legendre, Géraldine; Grimshaw, Jane; Vikner, Sten, eds. (2001). Optimality-theoretic syntax. MIT Press. ISBN 978-0-262-62138-0.

- ↑ Legendre, Géraldine; Putnam, Michael T.; De Swart, Henriette; Zaroukian, Erin, eds. (2016). Optimality-theoretic syntax, semantics, and pragmatics: From uni-to bidirectional optimization. Oxford University Press. ISBN 978-0-19-875711-5.

- ↑ Smolensky, Paul (November 1990). "Tensor product variable binding and the representation of symbolic structures in connectionist systems". Artificial Intelligence. 46 (1–2): 159–216. doi:10.1016/0004-3702(90)90007-M.

- ↑ Legendre, Géraldine; Miyata, Yoshiro; Smolensky, Paul (1990). Harmonic Grammar: A formal multi-level connectionist theory of linguistic well-formedness: Theoretical foundations (PDF) (Report). In Proceedings of the twelfth annual conference of the Cognitive Science Society (pp. 388–395). Cambridge, MA: Lawrence Erlbaum. Report CU-CS-465-90. Computer Science Department, University of Colorado at Boulder.

- ↑

- Smolensky, Paul; Legendre, Géraldine (2006). The Harmonic Mind: From Neural Computation to Optimality-Theoretic Grammar. Vol. 1: Cognitive Architecture. Cambridge, MA: MIT Press.

- Smolensky, Paul; Legendre, Géraldine (2006). The Harmonic Mind: From Neural Computation to Optimality-Theoretic Grammar. Vol. 2: Linguistic and Philosophical Implications. Cambridge, MA: MIT Press.

- ↑ Smolensky, Paul; Goldrick, Matthew; Mathis, Donald (2014). "Optimization and quantization in gradient symbol systems: A framework for integrating the continuous and the discrete in cognition". Cognitive Science. 38 (6): 1102−1138. doi:10.1111/cogs.12047. PMID 23802807 – via Rutgers Optimality Archive.

- ↑ Smolensky, Paul; Rosen, Eric; Goldrick, Matthew (2020). "Learning a gradient grammar of French liaison". Proceedings of the 2019 Annual Meeting on Phonology. 8. doi: 10.3765/amp.v8i0.4680 .

- ↑ Cho, Pyeong Whan; Goldrick, Matthew; Smolensky, Paul (2017). "Incremental parsing in a continuous dynamical system: Sentence processing in Gradient Symbolic Computation". Linguistics Vanguard. 3 (1). doi:10.1515/lingvan-2016-0105. S2CID 67362174.