Speculative execution is an optimization technique where a computer system performs some task that may not be needed. Work is done before it is known whether it is actually needed, so as to prevent a delay that would have to be incurred by doing the work after it is known that it is needed. If it turns out the work was not needed after all, most changes made by the work are reverted and the results are ignored.

Computer simulation is the process of mathematical modelling, performed on a computer, which is designed to predict the behaviour of, or the outcome of, a real-world or physical system. The reliability of some mathematical models can be determined by comparing their results to the real-world outcomes they aim to predict. Computer simulations have become a useful tool for the mathematical modeling of many natural systems in physics, astrophysics, climatology, chemistry, biology and manufacturing, as well as human systems in economics, psychology, social science, health care and engineering. Simulation of a system is represented as the running of the system's model. It can be used to explore and gain new insights into new technology and to estimate the performance of systems too complex for analytical solutions.

In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch will go before this is known definitively. The purpose of the branch predictor is to improve the flow in the instruction pipeline. Branch predictors play a critical role in achieving high performance in many modern pipelined microprocessor architectures such as x86.

The worst-case execution time (WCET) of a computational task is the maximum length of time the task could take to execute on a specific hardware platform.

In computer engineering, microarchitecture, also called computer organization and sometimes abbreviated as µarch or uarch, is the way a given instruction set architecture (ISA) is implemented in a particular processor. A given ISA may be implemented with different microarchitectures; implementations may vary due to different goals of a given design or due to shifts in technology.

Nios II is a 32-bit embedded processor architecture designed specifically for the Altera family of field-programmable gate array (FPGA) integrated circuits. Nios II incorporates many enhancements over the original Nios architecture, making it more suitable for a wider range of embedded computing applications, from digital signal processing (DSP) to system-control.

In software engineering, profiling is a form of dynamic program analysis that measures, for example, the space (memory) or time complexity of a program, the usage of particular instructions, or the frequency and duration of function calls. Most commonly, profiling information serves to aid program optimization, and more specifically, performance engineering.

An instruction set simulator (ISS) is a simulation model, usually coded in a high-level programming language, which mimics the behavior of a mainframe or microprocessor by "reading" instructions and maintaining internal variables which represent the processor's registers.

In control theory, advanced process control (APC) refers to a broad range of techniques and technologies implemented within industrial process control systems. Advanced process controls are usually deployed optionally and in addition to basic process controls. Basic process controls are designed and built with the process itself, to facilitate basic operation, control and automation requirements. Advanced process controls are typically added subsequently, often over the course of many years, to address particular performance or economic improvement opportunities in the process.

Trace scheduling is an optimization technique developed by Josh Fisher used in compilers for computer programs.

A computer architecture simulator is a program that simulates the execution of computer architecture.

In computing, computer performance is the amount of useful work accomplished by a computer system. Outside of specific contexts, computer performance is estimated in terms of accuracy, efficiency and speed of executing computer program instructions. When it comes to high computer performance, one or more of the following factors might be involved:

Simulation software is based on the process of modeling a real phenomenon with a set of mathematical formulas. It is, essentially, a program that allows the user to observe an operation through simulation without actually performing that operation. Simulation software is used widely to design equipment so that the final product will be as close to design specs as possible without expensive in process modification. Simulation software with real-time response is often used in gaming, but it also has important industrial applications. When the penalty for improper operation is costly, such as airplane pilots, nuclear power plant operators, or chemical plant operators, a mock up of the actual control panel is connected to a real-time simulation of the physical response, giving valuable training experience without fear of a disastrous outcome.

In the context of computer programming, instrumentation refers to the measure of a product's performance, in order to diagnose errors and to write trace information. Instrumentation can be of two types: source instrumentation and binary instrumentation.

In computing, an emulator is hardware or software that enables one computer system to behave like another computer system. An emulator typically enables the host system to run software or use peripheral devices designed for the guest system. Emulation refers to the ability of a computer program in an electronic device to emulate another program or device.

MikroSim is an educational software computer program for hardware-non-specific explanation of the general functioning and behaviour of a virtual processor, running on the Microsoft Windows operating system. Devices like miniaturized calculators, microcontroller, microprocessors, and computer can be explained on custom-developed instruction code on a register transfer level controlled by sequences of micro instructions (microcode). Based on this it is possible to develop an instruction set to control a virtual application board at higher level of abstraction.

In computer science, trace-based simulation refers to system simulation performed by looking at traces of program execution or system component access with the purpose of performance prediction.

Message passing is an inherent element of all computer clusters. All computer clusters, ranging from homemade Beowulfs to some of the fastest supercomputers in the world, rely on message passing to coordinate the activities of the many nodes they encompass. Message passing in computer clusters built with commodity servers and switches is used by virtually every internet service.

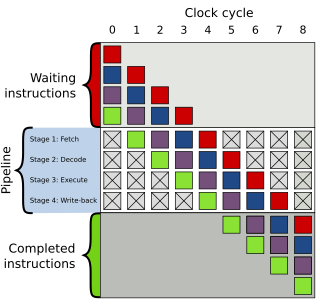

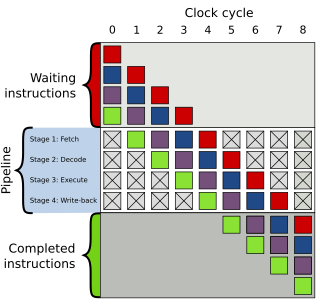

Microarchitecture simulation is an important technique in computer architecture research and computer science education. It is a tool for modeling the design and behavior of a microprocessor and its components, such as the ALU, cache memory, control unit, and data path, among others. The simulation allows researchers to explore the design space as well as to evaluate the performance and efficiency of novel microarchitecture features. For example, several microarchitecture components, such as branch predictors, re-order buffer, and trace cache, went through numerous simulation cycles before they become common components in contemporary microprocessors of today. In addition, the simulation also enables educators to teach computer organization and architecture courses with hand-on experiences.

In computer architecture, a trace cache or execution trace cache is a specialized instruction cache which stores the dynamic stream of instructions known as trace. It helps in increasing the instruction fetch bandwidth and decreasing power consumption by storing traces of instructions that have already been fetched and decoded. A trace processor is an architecture designed around the trace cache and processes the instructions at trace level granularity. The formal mathematical theory of traces is described by trace monoids.