A computer mouse is a hand-held pointing device that detects two-dimensional motion relative to a surface. This motion is typically translated into the motion of the pointer on a display, which allows a smooth control of the graphical user interface of a computer.

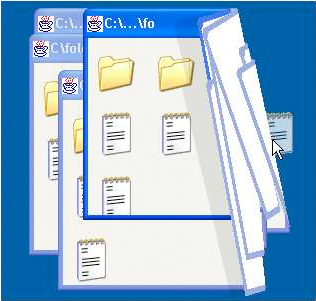

A graphical user interface, or GUI, is a form of user interface that allows users to interact with electronic devices through graphical icons and visual indicators such as secondary notation. In many applications, GUIs are used instead of text-based UIs, which are based on typed command labels or text navigation. GUIs were introduced in reaction to the perceived steep learning curve of command-line interfaces (CLIs), which require commands to be typed on a computer keyboard.

A pointing device is a human interface device that allows a user to input spatial data to a computer. Graphical user interfaces (GUI) and CAD systems allow the user to control and provide data to the computer using physical gestures by moving a hand-held mouse or similar device across the surface of the physical desktop and activating switches on the mouse. Movements of the pointing device are echoed on the screen by movements of the pointer and other visual changes. Common gestures are point and click and drag and drop.

In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines occur. The goal of this interaction is to allow effective operation and control of the machine from the human end, while the machine simultaneously feeds back information that aids the operators' decision-making process. Examples of this broad concept of user interfaces include the interactive aspects of computer operating systems, hand tools, heavy machinery operator controls and process controls. The design considerations applicable when creating user interfaces are related to, or involve such disciplines as, ergonomics and psychology.

A scrollbar is an interaction technique or widget in which continuous text, pictures, or any other content can be scrolled in a predetermined direction on a computer display, window, or viewport so that all of the content can be viewed, even if only a fraction of the content can be seen on a device's screen at one time. It offers a solution to the problem of navigation to a known or unknown location within a two-dimensional information space. It was also known as a handle in the very first GUIs. They are present in a wide range of electronic devices including computers, graphing calculators, mobile phones, and portable media players. The user interacts with the scrollbar elements using some method of direct action, the scrollbar translates that action into scrolling commands, and the user receives feedback through a visual updating of both the scrollbar elements and the scrolled content.

A touchpad or trackpad is a type of pointing device. Its largest component is a tactile sensor: an electronic device with a flat surface, that detects the motion and position of a user's fingers, and translates them to 2D motion, to control a pointer in a graphical user interface on a computer screen. Touchpads are common on laptop computers, contrasted with desktop computers, where mice are more prevalent. Trackpads are sometimes used on desktops, where desk space is scarce. Because trackpads can be made small, they can be found on personal digital assistants (PDAs) and some portable media players. Wireless touchpads are also available, as detached accessories.

In computer graphical user interfaces, drag and drop is a pointing device gesture in which the user selects a virtual object by "grabbing" it and dragging it to a different location or onto another virtual object. In general, it can be used to invoke many kinds of actions, or create various types of associations between two abstract objects.

A touchscreen is a type of display that can detect touch input from a user. It consists of both an input device and an output device. The touch panel is typically layered on the top of the electronic visual display of a device. Touchscreens are commonly found in smartphones, tablets, laptops, and other electronic devices.

A scroll wheel is a wheel used for scrolling. The term usually refers to such wheels found on computer mice. It is often made of hard plastic with a rubbery surface, centred around an internal rotary encoder. It is usually located between the left and right mouse buttons and is positioned perpendicular to the mouse surface. Sometimes the wheel can be pressed left and right, which is actually just two additional macros buttons.

In human–computer interaction, a cursor is an indicator used to show the current position on a computer monitor or other display device that will respond to input.

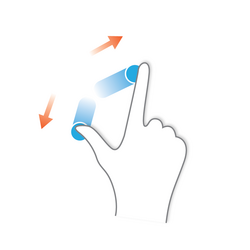

In computing, multi-touch is technology that enables a surface to recognize the presence of more than one point of contact with the surface at the same time. The origins of multitouch began at CERN, MIT, University of Toronto, Carnegie Mellon University and Bell Labs in the 1970s. CERN started using multi-touch screens as early as 1976 for the controls of the Super Proton Synchrotron. Capacitive multi-touch displays were popularized by Apple's iPhone in 2007. Multi-touch may be used to implement additional functionality, such as pinch to zoom or to activate certain subroutines attached to predefined gestures using gesture recognition.

The Human–Computer Interaction Lab (HCIL) at the University of Maryland, College Park is an academic research center specializing in the field of human-computer interaction (HCI). Founded in 1983 by Ben Shneiderman, it is one of the oldest HCI labs of its kind. The HCIL conducts research on the design, implementation, and evaluation of computer interface technologies. Additional research focuses on the development of user interfaces and design methods. Primary activities of the HCIL include collaborative research, publication and the sponsorship of open houses, workshops and annual symposiums.

Pen computing refers to any computer user-interface using a pen or stylus and tablet, over input devices such as a keyboard or a mouse.

A text entry interface or text entry device is an interface that is used to enter text information in an electronic device. A commonly used device is a mechanical computer keyboard. Most laptop computers have an integrated mechanical keyboard, and desktop computers are usually operated primarily using a keyboard and mouse. Devices such as smartphones and tablets mean that interfaces such as virtual keyboards and voice recognition are becoming more popular as text entry systems.

An interaction technique, user interface technique or input technique is a combination of hardware and software elements that provides a way for computer users to accomplish a single task. For example, one can go back to the previously visited page on a Web browser by either clicking a button, pressing a key, performing a mouse gesture or uttering a speech command. It is a widely used term in human-computer interaction. In particular, the term "new interaction technique" is frequently used to introduce a novel user interface design idea.

Hands-on computing is a branch of human-computer interaction research which focuses on computer interfaces that respond to human touch or expression, allowing the machine and the user to interact physically. Hands-on computing can make complicated computer tasks more natural to users by attempting to respond to motions and interactions that are natural to human behavior. Thus hands-on computing is a component of user-centered design, focusing on how users physically respond to virtual environments.

In computing, a natural user interface (NUI) or natural interface is a user interface that is effectively invisible, and remains invisible as the user continuously learns increasingly complex interactions. The word "natural" is used because most computer interfaces use artificial control devices whose operation has to be learned. Examples include voice assistants, such as Alexa and Siri, touch and multitouch interactions on today's mobile phones and tablets, but also touch interfaces invisibly integrated into the textiles furnitures.

In computing, an input device is a piece of equipment used to provide data and control signals to an information processing system, such as a computer or information appliance. Examples of input devices include keyboards, computer mice, scanners, cameras, joysticks, and microphones.

Microsoft Tablet PC is a term coined by Microsoft for tablet computers conforming to a set of specifications announced in 2001 by Microsoft, for a pen-enabled personal computer, conforming to hardware specifications devised by Microsoft and running a licensed copy of Windows XP Tablet PC Edition operating system or a derivative thereof.