Related Research Articles

Google Search is a search engine provided by Google. Handling more than 3.5 billion searches per day, it has a 92% share of the global search engine market. It is also the most-visited website in the world.

Information retrieval (IR) in computing and information science is the process of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds.

A search engine is an information retrieval system designed to help find information stored on a computer system. The search results are usually presented in a list and are commonly called hits. Search engines help to minimize the time required to find information and the amount of information which must be consulted, akin to other techniques for managing information overload.

Search engine optimization (SEO) is the process of improving the quality and quantity of website traffic to a website or a web page from search engines. SEO targets unpaid traffic rather than direct traffic or paid traffic. Unpaid traffic may originate from different kinds of searches, including image search, video search, academic search, news search, and industry-specific vertical search engines.

The deep web, invisible web, or hidden web are parts of the World Wide Web whose contents are not indexed by standard web search-engines. This is in contrast to the "surface web", which is accessible to anyone using the Internet. Computer-scientist Michael K. Bergman is credited with coining the term in 2001 as a search-indexing term.

A metasearch engine is an online information retrieval tool that uses the data of a web search engine to produce its own results. Metasearch engines take input from a user and immediately query search engines for results. Sufficient data is gathered, ranked, and presented to the users.

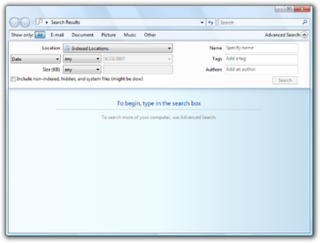

Desktop search tools search within a user's own computer files as opposed to searching the Internet. These tools are designed to find information on the user's PC, including web browser history, e-mail archives, text documents, sound files, images, and video. A variety of desktop search programs are now available; see this list for examples. Most desktop search programs are standalone applications. Desktop search products are software alternatives to the search software included in the operating system, helping users sift through desktop files, emails, attachments, and more.

Federated search retrieves information from a variety of sources via a search application built on top of one or more search engines. A user makes a single query request which is distributed to the search engines, databases or other query engines participating in the federation. The federated search then aggregates the results that are received from the search engines for presentation to the user. Federated search can be used to integrate disparate information resources within a single large organization ("enterprise") or for the entire web.

A scraper site is a website that copies content from other websites using web scraping. The content is then mirrored with the goal of creating revenue, usually through advertising and sometimes by selling user data. Scraper sites come in various forms. Some provide little, if any material or information, and are intended to obtain user information such as e-mail addresses, to be targeted for spam e-mail. Price aggregation and shopping sites access multiple listings of a product and allow a user to rapidly compare the prices.

A search engine is a software system designed to carry out web searches. They search the World Wide Web in a systematic way for particular information specified in a textual web search query. The search results are generally presented in a line of results, often referred to as search engine results pages (SERPs). The information may be a mix of links to web pages, images, videos, infographics, articles, research papers, and other types of files. Some search engines also mine data available in databases or open directories. Unlike web directories, which are maintained only by human editors, search engines also maintain real-time information by running an algorithm on a web crawler. Any internet content that can't be indexed and searched by a web search engine falls under the category of deep web.

Search Engine Results Pages (SERP) are the pages displayed by search engines in response to a query by a user. The main component of the SERP is the listing of results that are returned by the search engine in response to a keyword query.

Google Images is a search engine owned by Google that allows users to search the World Wide Web for images. It was introduced on July 12, 2001 due to a demand for pictures of the green Versace dress of Jennifer Lopez worn in February 2000. In 2011, reverse image search functionality was added.

A search engine is an information retrieval software program that discovers, crawls, transforms and stores information for retrieval and presentation in response to user queries.

Search engine indexing is the collecting, parsing, and storing of data to facilitate fast and accurate information retrieval. Index design incorporates interdisciplinary concepts from linguistics, cognitive psychology, mathematics, informatics, and computer science. An alternate name for the process, in the context of search engines designed to find web pages on the Internet, is web indexing.

Enterprise search is the practice of making content from multiple enterprise-type sources, such as databases and intranets, searchable to a defined audience.

Windows Search is a content index desktop search platform by Microsoft introduced in Windows Vista as a replacement for both the previous Indexing Service of Windows 2000 and the optional MSN Desktop Search for Windows XP and Windows Server 2003, designed to facilitate local and remote queries for files and non-file items in compatible applications including Windows Explorer. It was developed after the postponement of WinFS and introduced to Windows constituents originally touted as benefits of that platform.

DuckDuckGo (DDG) is an internet search engine that emphasizes protecting searchers' privacy and avoiding the filter bubble of personalized search results. DuckDuckGo does not show search results from content farms. It uses various APIs of other websites to show quick results to queries and for traditional links it uses the help of its partners and its own crawler.

Elasticsearch is a search engine based on the Lucene library. It provides a distributed, multitenant-capable full-text search engine with an HTTP web interface and schema-free JSON documents. Elasticsearch is developed in Java and is dual-licensed under the source-available Server Side Public License and the Elastic license, while other parts fall under the proprietary (source-available) Elastic License. Official clients are available in Java, .NET (C#), PHP, Python, Apache Groovy, Ruby and many other languages. According to the DB-Engines ranking, Elasticsearch is the most popular enterprise search engine.

Facebook Graph Search was a semantic search engine that was introduced by Facebook in March 2013. It was designed to give answers to user natural language queries rather than a list of links. The name refers to the social graph nature of Facebook, which maps the relationships among users. The Graph Search feature combined the big data acquired from its over one billion users and external data into a search engine providing user-specific search results. In a presentation headed by Facebook CEO Mark Zuckerberg, it was announced that the Graph Search algorithm finds information from within a user's network of friends. Additional results were provided by Microsoft's Bing search engine. In July it was made available to all users using the U.S. English version of Facebook. After being made less publicly visible starting December 2014, the original Graph Search was almost entirely deprecated in June 2019.

Contextual search is a form of optimizing web-based search results based on context provided by the user and the computer being used to enter the query. Contextual search services differ from current search engines based on traditional information retrieval that return lists of documents based on their relevance to the query. Rather, contextual search attempts to increase the precision of results based on how valuable they are to individual users.

References

- ↑ "Percolate Queries". manticoresearch.com. Retrieved 2022-06-24.

- ↑ Bob Wyman (2005). "Blogs & Prospective Search Technology for Corporate Reputation Management". Global PR Blog Week website.

- ↑ "Feature deprecations | App Engine Documentation".

- ↑ "Feature deprecations | App Engine Documentation".

- ↑ "Full Text Trackers".