A cognitive bias is a systematic pattern of deviation from norm or rationality in judgment. Individuals create their own "subjective reality" from their perception of the input. An individual's construction of reality, not the objective input, may dictate their behavior in the world. Thus, cognitive biases may sometimes lead to perceptual distortion, inaccurate judgment, illogical interpretation, or what is broadly called irrationality.

In economics and business decision-making, a sunk cost is a cost that has already been incurred and cannot be recovered. Sunk costs are contrasted with prospective costs, which are future costs that may be avoided if action is taken. In other words, a sunk cost is a sum paid in the past that is no longer relevant to decisions about the future. Even though economists argue that sunk costs are no longer relevant to future rational decision-making, people in everyday life often take previous expenditures in situations, such as repairing a car or house, into their future decisions regarding those properties.

Amos Nathan Tversky was an Israeli cognitive and mathematical psychologist and a key figure in the discovery of systematic human cognitive bias and handling of risk.

Behavioral economics studies the effects of psychological, cognitive, emotional, cultural and social factors on the decisions of individuals or institutions, such as how those decisions vary from those implied by classical economic theory.

In economics and finance, risk aversion is the tendency of people to prefer outcomes with low uncertainty to those outcomes with high uncertainty, even if the average outcome of the latter is equal to or higher in monetary value than the more certain outcome. Risk aversion explains the inclination to agree to a situation with a more predictable, but possibly lower payoff, rather than another situation with a highly unpredictable, but possibly higher payoff. For example, a risk-averse investor might choose to put their money into a bank account with a low but guaranteed interest rate, rather than into a stock that may have high expected returns, but also involves a chance of losing value.

Prospect theory is a theory of behavioral economics and behavioral finance that was developed by Daniel Kahneman and Amos Tversky in 1979. The theory was cited in the decision to award Kahneman the 2002 Nobel Memorial Prize in Economics.

Loss aversion is the tendency to prefer avoiding losses to acquiring equivalent gains. The principle is prominent in the domain of economics. What distinguishes loss aversion from risk aversion is that the utility of a monetary payoff depends on what was previously experienced or was expected to happen. Some studies have suggested that losses are twice as powerful, psychologically, as gains. Loss aversion was first identified by Amos Tversky and Daniel Kahneman.

The conjunction fallacy is an inference from an array of particulars, in violation of the laws of probability, that a conjoint set of two or more conclusions is likelier than any single member of that same set. It is a type of formal fallacy.

Mental accounting attempts to describe the process whereby people code, categorize and evaluate economic outcomes. The concept was first named by Richard Thaler. Mental accounting deals with the budgeting and categorization of expenditures. People budget money into mental accounts for expenses or expense categories. Mental accounts are believed to act as a self-control strategy. People are presumed to make mental accounts as a way to manage and keep track of their spending and resources. People also are assumed to make mental accounts to facilitate savings for larger purposes. Like many other cognitive processes, it can prompt biases and systematic departures from rational, value-maximizing behavior, and its implications are quite robust. Understanding the flaws and inefficiencies of mental accounting is essential to making good decisions and reducing human error.

The Allais paradox is a choice problem designed by Maurice Allais (1953) to show an inconsistency of actual observed choices with the predictions of expected utility theory.

The disposition effect is an anomaly discovered in behavioral finance. It relates to the tendency of investors to sell assets that have increased in value, while keeping assets that have dropped in value.

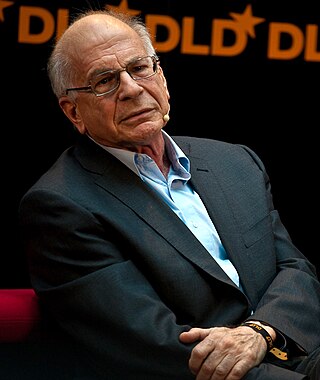

Cumulative prospect theory (CPT) is a model for descriptive decisions under risk and uncertainty which was introduced by Amos Tversky and Daniel Kahneman in 1992. It is a further development and variant of prospect theory. The difference between this version and the original version of prospect theory is that weighting is applied to the cumulative probability distribution function, as in rank-dependent expected utility theory but not applied to the probabilities of individual outcomes. In 2002, Daniel Kahneman received the Bank of Sweden Prize in Economic Sciences in Memory of Alfred Nobel for his contributions to behavioral economics, in particular the development of Cumulative Prospect Theory (CPT).

Reference class forecasting or comparison class forecasting is a method of predicting the future by looking at similar past situations and their outcomes. The theories behind reference class forecasting were developed by Daniel Kahneman and Amos Tversky. The theoretical work helped Kahneman win the Nobel Prize in Economics.

The framing effect is a cognitive bias where people decide between options based on whether the options are presented with positive or negative connotations. Individuals have a tendency to make risk-avoidant choices when options are positively framed, while selecting more loss-avoidant options when presented with a negative frame. In studies of the bias, options are presented in terms of the probability of either losses or gains. While differently expressed, the options described are in effect identical. Gain and loss are defined in the scenario as descriptions of outcomes, for example, lives lost or saved, patients treated or not treated, monetary gains or losses.

Heuristics is the process by which humans use mental short cuts to arrive at decisions. Heuristics are simple strategies that humans, animals, organizations, and even machines use to quickly form judgments, make decisions, and find solutions to complex problems. Often this involves focusing on the most relevant aspects of a problem or situation to formulate a solution. While heuristic processes are used to find the answers and solutions that are most likely to work or be correct, they are not always right or the most accurate. Judgments and decisions based on heuristics are simply good enough to satisfy a pressing need in situations of uncertainty, where information is incomplete. In that sense they can differ from answers given by logic and probability.

The certainty effect is the psychological effect resulting from the reduction of probability from certain to probable. It is an idea introduced in prospect theory.

Thinking, Fast and Slow is a 2011 book by psychologist Daniel Kahneman. The book's main thesis is a differentiation between two modes of thought: "System 1" is fast, instinctive and emotional; "System 2" is slower, more deliberative, and more logical.

Eldar Shafir is an American behavioral scientist, and the co-author of Scarcity: Why Having Too Little Means So Much. He is the Class of 1987 Professor in Behavioral Science and Public Policy; Professor of Psychology and Public Affairs at Princeton University Department of Psychology and the Princeton School of Public and International Affairs, and Inaugural Director of Princeton’s Kahneman-Treisman Center for Behavioral Science and Public Policy,.

Risk aversion is a preference for a sure outcome over a gamble with higher or equal expected value. Conversely, the rejection of a sure thing in favor of a gamble of lower or equal expected value is known as risk-seeking behavior.

The priority heuristic is a simple, lexicographic decision strategy that correctly predicts classic violations of expected utility theory such as the Allais paradox, the four-fold pattern, the certainty effect, the possibility effect, or intransitivities.