Related Research Articles

Persuasive technology is broadly defined as technology that is designed to change attitudes or behaviors of the users through persuasion and social influence, but not necessarily through coercion. Such technologies are regularly used in sales, diplomacy, politics, religion, military training, public health, and management, and may potentially be used in any area of human-human or human-computer interaction. Most self-identified persuasive technology research focuses on interactive, computational technologies, including desktop computers, Internet services, video games, and mobile devices, but this incorporates and builds on the results, theories, and methods of experimental psychology, rhetoric, and human-computer interaction. The design of persuasive technologies can be seen as a particular case of design with intent.

An online community, also called an internet community or web community, is a community whose members interact with each other primarily via the Internet. Members of the community usually share common interests. For many, online communities may feel like home, consisting of a "family of invisible friends". Additionally, these "friends" can be connected through gaming communities and gaming companies. Those who wish to be a part of an online community usually have to become a member via a specific site and thereby gain access to specific content or links.

Personal information management (PIM) is the study and implementation of the activities that people perform in order to acquire or create, store, organize, maintain, retrieve, and use informational items such as documents, web pages, and email messages for everyday use to complete tasks and fulfill a person's various roles ; it is information management with intrapersonal scope.

Computer-supported cooperative work (CSCW) is the study of how people utilize technology collaboratively, often towards a shared goal. CSCW addresses how computer systems can support collaborative activity and coordination. More specifically, the field of CSCW seeks to analyze and draw connections between currently understood human psychological and social behaviors and available collaborative tools, or groupware. Often the goal of CSCW is to help promote and utilize technology in a collaborative way, and help create new tools to succeed in that goal. These parallels allow CSCW research to inform future design patterns or assist in the development of entirely new tools.

A recommender system, or a recommendation system, is a subclass of information filtering system that provide suggestions for items that are most pertinent to a particular user. Typically, the suggestions refer to various decision-making processes, such as what product to purchase, what music to listen to, or what online news to read. Recommender systems are particularly useful when an individual needs to choose an item from a potentially overwhelming number of items that a service may offer.

End-user development (EUD) or end-user programming (EUP) refers to activities and tools that allow end-users – people who are not professional software developers – to program computers. People who are not professional developers can use EUD tools to create or modify software artifacts and complex data objects without significant knowledge of a programming language. In 2005 it was estimated that by 2012 there would be more than 55 million end-user developers in the United States, compared with fewer than 3 million professional programmers. Various EUD approaches exist, and it is an active research topic within the field of computer science and human-computer interaction. Examples include natural language programming, spreadsheets, scripting languages, visual programming, trigger-action programming and programming by example.

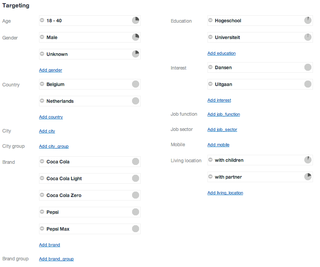

Targeted advertising is a form of advertising, including online advertising, that is directed towards an audience with certain traits, based on the product or person the advertiser is promoting. These traits can either be demographic with a focus on race, economic status, sex, age, generation, level of education, income level, and employment, or psychographic focused on the consumer values, personality, attitude, opinion, lifestyle and interest. This focus can also entail behavioral variables, such as browser history, purchase history, and other recent online activities. The process of algorithm targeting eliminates waste.

Social information processing is "an activity through which collective human actions organize knowledge." It is the creation and processing of information by a group of people. As an academic field Social Information Processing studies the information processing power of networked social systems.

Collaborative tagging, also known as social tagging or folksonomy, allows users to apply public tags to online items, typically to make those items easier for themselves or others to find later. It has been argued that these tagging systems can provide navigational cues or "way-finders" for other users to explore information. The notion is that given that social tags are labels users create to represent topics extracted from online documents, the interpretation of these tags should allow other users to predict the contents of different documents efficiently. Social tags are arguably more important in exploratory search, in which the users may engage in iterative cycles of goal refinement and exploration of new information, and interpretation of information contents by others will provide useful cues for people to discover topics that are relevant.

Web tracking is the practice by which operators of websites and third parties collect, store and share information about visitors’ activities on the World Wide Web. Analysis of a user's behaviour may be used to provide content that enables the operator to infer their preferences and may be of interest to various parties, such as advertisers. Web tracking can be part of visitor management.

Value sensitive design (VSD) is a theoretically grounded approach to the design of technology that accounts for human values in a principled and comprehensive manner. VSD originated within the field of information systems design and human-computer interaction to address design issues within the fields by emphasizing the ethical values of direct and indirect stakeholders. It was developed by Batya Friedman and Peter Kahn at the University of Washington starting in the late 1980s and early 1990s. Later, in 2019, Batya Friedman and David Hendry wrote a book on this topic called "Value Sensitive Design: Shaping Technology with Moral Imagination". Value Sensitive Design takes human values into account in a well-defined matter throughout the whole process. Designs are developed using an investigation consisting of three phases: conceptual, empirical and technological. These investigations are intended to be iterative, allowing the designer to modify the design continuously.

Since the arrival of early social networking sites in the early 2000s, online social networking platforms have expanded exponentially, with the biggest names in social media in the mid-2010s being Facebook, Instagram, Twitter and Snapchat. The massive influx of personal information that has become available online and stored in the cloud has put user privacy at the forefront of discussion regarding the database's ability to safely store such personal information. The extent to which users and social media platform administrators can access user profiles has become a new topic of ethical consideration, and the legality, awareness, and boundaries of subsequent privacy violations are critical concerns in advance of the technological age.

Diary studies is a research method that collects qualitative information by having participants record entries about their everyday lives in a log, diary or journal about the activity or experience being studied. This collection of data uses a longitudinal technique, meaning participants are studied over a period of time. This research tool, although not being able to provide results as detailed as a true field study, can still offer a vast amount of contextual information without the costs of a true field study. Diary studies are also known as experience sampling or ecological momentary assessment (EMA) methodology.

Social Visualization is an interdisciplinary intersection of information visualization to study creating intuitive depictions of massive and complex social interactions for social purposes. By visualizing those interactions made not only in the cyberspace including social media but also the physical world, captured through sensors, it can reveal overall patterns of social memes or it highlights one individual's implicit behaviors in diverse social spaces. In particular, it is the study “primarily concerned with the visualization of text, audio, and visual interaction data to uncover social connections and interaction patterns in online and physical spaces. ACM Computing Classification System has classified this field of study under the category of Human-Centered Computing (1st) and Information Visualization (2nd) as a third level concept in a general sense.

Dataveillance is the practice of monitoring and collecting online data as well as metadata. The word is a portmanteau of data and surveillance. Dataveillance is concerned with the continuous monitoring of users' communications and actions across various platforms. For instance, dataveillance refers to the monitoring of data resulting from credit card transactions, GPS coordinates, emails, social networks, etc. Using digital media often leaves traces of data and creates a digital footprint of our activity. Unlike sousveillance, this type of surveillance is not often known and happens discreetly. Dataveillance may involve the surveillance of groups of individuals. There exist three types of dataveillance: personal dataveillance, mass dataveillance, and facilitative mechanisms.

Social navigation is a form of social computing introduced by Paul Dourish and Matthew Chalmers in 1994, who defined it as when "movement from one item to another is provoked as an artifact of the activity of another or a group of others". According to later research in 2002, "social navigation exploits the knowledge and experience of peer users of information resources" to guide users in the information space, and that it is becoming more difficult to navigate and search efficiently with all the digital information available from the World Wide Web and other sources. Studying others' navigational trails and understanding their behavior can help improve one's own search strategy by guiding them to make more informed decisions based on the actions of others.

Cross-device tracking refers to technology that enables the tracking of users across multiple devices such as smartphones, television sets, smart TVs, and personal computers.

Privacy settings are "the part of a social networking website, internet browser, piece of software, etc. that allows you to control who sees information about you". With the growing prevalence of social networking services, opportunities for privacy exposures also grow. Privacy settings allow a person to control what information is shared on these platforms.

Click tracking is when user click behavior or user navigational behavior is collected in order to derive insights and fingerprint users. Click behavior is commonly tracked using server logs which encompass click paths and clicked URLs. This log is often presented in a standard format including information like the hostname, date, and username. However, as technology develops, new software allows for in depth analysis of user click behavior using hypervideo tools. Given that the internet can be considered a risky environment, research strives to understand why users click certain links and not others. Research has also been conducted to explore the user experience of privacy with making user personal identification information individually anonymized and improving how data collection consent forms are written and structured.

References

- 1 2 McDonald, David W.; Gokhman, Stephanie; Zachry, Mark (2012). Building for social translucence. New York, New York, USA: ACM Press. doi:10.1145/2145204.2145301. ISBN 978-1-4503-1086-4.

- ↑ Mead, George. H. (1934). Mind, Self, and Society: From the Standpoint of a Social Behaviorist . Chicago: University of Chicago Press.

- ↑ Goffman, Erving (1990). The presentation of self in everyday life. London: Penguin. ISBN 978-0-14-013571-8.

- ↑ Erickson, Thomas; Kellogg, Wendy A. (2000-03-01). "Social translucence: an approach to designing systems that support social processes". ACM Transactions on Computer-Human Interaction. 7 (1): 59–83. doi:10.1145/344949.345004. ISSN 1073-0516.

- ↑ Zolyomi, Annuska; Ross, Anne Spencer; Bhattacharya, Arpita; Milne, Lauren; Munson, Sean A. (2018). Values, Identity, and Social Translucence. New York, New York, USA: ACM Press. doi:10.1145/3173574.3174073. ISBN 978-1-4503-5620-6.

- 1 2 3 4 Ding, Xianghua; Erickson, Thomas; Kellogg, Wendy A.; Patterson, Donald J. (2011). "Informing and performing: investigating how mediated sociality becomes visible". Personal and Ubiquitous Computing. 16 (8): 1095–1117. doi:10.1007/s00779-011-0443-8. ISSN 1617-4909.

- ↑ Barreto, Mary; Szóstek, Agnieszka; Karapanos, Evangelos (2013). An initial model for designing socially translucent systems for behavior change. New York, New York, USA: ACM Press. doi:10.1145/2499149.2499162. hdl: 10400.13/4512 . ISBN 978-1-4503-2061-0.

- ↑ Patil, Sameer; Lai, Jennifer (2005). "Who gets to know what when". Who gets to know what when: configuring privacy permissions in an awareness application. p. 101. doi:10.1145/1054972.1054987. ISBN 978-1581139983.

- Chi, E. H.; Suh, B.; Kittur, A. (2008), "Providing social transparency through visualizations in Wikipedia", Social Data Analysis Workshop at CHI 2008; 2008 April 6; Florence, Italy