Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.

Machine vision is the technology and methods used to provide imaging-based automatic inspection and analysis for such applications as automatic inspection, process control, and robot guidance, usually in industry. Machine vision refers to many technologies, software and hardware products, integrated systems, actions, methods and expertise. Machine vision as a systems engineering discipline can be considered distinct from computer vision, a form of computer science. It attempts to integrate existing technologies in new ways and apply them to solve real world problems. The term is the prevalent one for these functions in industrial automation environments but is also used for these functions in other environment vehicle guidance.

Stereoscopy is a technique for creating or enhancing the illusion of depth in an image by means of stereopsis for binocular vision. The word stereoscopy derives from Greek στερεός (stereos) 'firm, solid', and σκοπέω (skopeō) 'to look, to see'. Any stereoscopic image is called a stereogram. Originally, stereogram referred to a pair of stereo images which could be viewed using a stereoscope.

In computer vision and image processing, motion estimation is the process of determining motion vectors that describe the transformation from one 2D image to another; usually from adjacent frames in a video sequence. It is an ill-posed problem as the motion happens in three dimensions (3D) but the images are a projection of the 3D scene onto a 2D plane. The motion vectors may relate to the whole image or specific parts, such as rectangular blocks, arbitrary shaped patches or even per pixel. The motion vectors may be represented by a translational model or many other models that can approximate the motion of a real video camera, such as rotation and translation in all three dimensions and zoom.

In the fields of computing and computer vision, pose represents the position and orientation of an object, usually in three dimensions. Poses are often stored internally as transformation matrices. The term “pose” is largely synonymous with the term “transform”, but a transform may often include scale, whereas pose does not.

A light field camera, also known as a plenoptic camera, is a camera that captures information about the light field emanating from a scene; that is, the intensity of light in a scene, and also the precise direction that the light rays are traveling in space. This contrasts with conventional cameras, which record only light intensity at various wavelengths.

The following are common definitions related to the machine vision field.

Binocular disparity refers to the difference in image location of an object seen by the left and right eyes, resulting from the eyes’ horizontal separation (parallax). The mind uses binocular disparity to extract depth information from the two-dimensional retinal images in stereopsis. In computer vision, binocular disparity refers to the difference in coordinates of similar features within two stereo images.

Image rectification is a transformation process used to project images onto a common image plane. This process has several degrees of freedom and there are many strategies for transforming images to the common plane. Image rectification is used in computer stereo vision to simplify the problem of finding matching points between images, and in geographic information systems to merge images taken from multiple perspectives into a common map coordinate system.

Range imaging is the name for a collection of techniques that are used to produce a 2D image showing the distance to points in a scene from a specific point, normally associated with some type of sensor device.

2D-plus-Depthis a stereoscopic video coding format that is used for 3D displays, such as Philips WOWvx. Philips discontinued work on the WOWvx line in 2009, citing "current market developments". Currently, this Philips technology is used by SeeCubic company, led by former key 3D engineers and scientists of Philips. They offer autostereoscopic 3D displays which use the 2D-plus-Depth format for 3D video input.

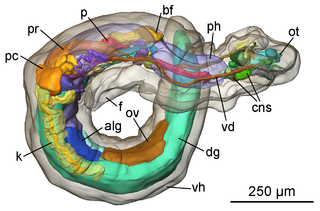

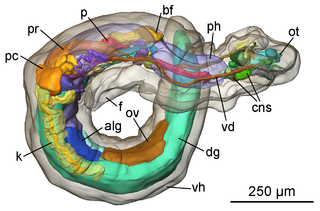

In computer vision and computer graphics, 3D reconstruction is the process of capturing the shape and appearance of real objects. This process can be accomplished either by active or passive methods. If the model is allowed to change its shape in time, this is referred to as non-rigid or spatio-temporal reconstruction.

In robotics and computer vision, visual odometry is the process of determining the position and orientation of a robot by analyzing the associated camera images. It has been used in a wide variety of robotic applications, such as on the Mars Exploration Rovers.

In photography, an omnidirectional camera, also known as 360-degree camera, is a camera having a field of view that covers approximately the entire sphere or at least a full circle in the horizontal plane. Omnidirectional cameras are important in areas where large visual field coverage is needed, such as in panoramic photography and robotics.

A time-of-flight camera, also known as time-of-flight sensor, is a range imaging camera system for measuring distances between the camera and the subject for each point of the image based on time-of-flight, the round trip time of an artificial light signal, as provided by a laser or an LED. Laser-based time-of-flight cameras are part of a broader class of scannerless LIDAR, in which the entire scene is captured with each laser pulse, as opposed to point-by-point with a laser beam such as in scanning LIDAR systems. Time-of-flight camera products for civil applications began to emerge around 2000, as the semiconductor processes allowed the production of components fast enough for such devices. The systems cover ranges of a few centimeters up to several kilometers.

Computer stereo vision is the extraction of 3D information from digital images, such as those obtained by a CCD camera. By comparing information about a scene from two vantage points, 3D information can be extracted by examining the relative positions of objects in the two panels. This is similar to the biological process of stereopsis.

In 3D computer graphics and computer vision, a depth map is an image or image channel that contains information relating to the distance of the surfaces of scene objects from a viewpoint. The term is related to depth buffer, Z-buffer, Z-buffering, and Z-depth. The "Z" in these latter terms relates to a convention that the central axis of view of a camera is in the direction of the camera's Z axis, and not to the absolute Z axis of a scene.

2D to 3D video conversion is the process of transforming 2D ("flat") film to 3D form, which in almost all cases is stereo, so it is the process of creating imagery for each eye from one 2D image.

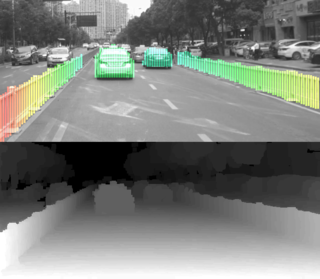

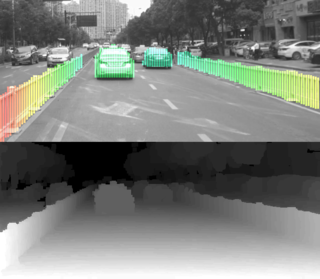

In computer vision, a stixel is a superpixel representation of depth information in an image, in the form of a vertical stick that approximates the closest obstacles within a certain vertical slice of the scene. Introduced in 2009, stixels have applications in robotic navigation and advanced driver-assistance systems, where they can be used to define a representation of robotic environments and traffic scenes with a medium level of abstraction.

Gérard G. Medioni is a computer scientist, author, academic and inventor. He is a vice president and distinguished scientist at Amazon and serves as emeritus professor of Computer Science at the University of Southern California.