In machine learning, a neural network is a model inspired by the structure and function of biological neural networks in animal brains.

Richard Ernest Bellman was an American applied mathematician, who introduced dynamic programming in 1953, and made important contributions in other fields of mathematics, such as biomathematics. He founded the leading biomathematical journal Mathematical Biosciences, as well as the Journal of Mathematical Analysis and Applications.

The Hamilton-Jacobi-Bellman (HJB) equation is a nonlinear partial differential equation that provides necessary and sufficient conditions for optimality of a control with respect to a loss function. Its solution is the value function of the optimal control problem which, once known, can be used to obtain the optimal control by taking the maximizer of the Hamiltonian involved in the HJB equation.

Hubert Lederer Dreyfus was an American philosopher and professor of philosophy at the University of California, Berkeley. His main interests included phenomenology, existentialism and the philosophy of both psychology and literature, as well as the philosophical implications of artificial intelligence. He was widely known for his exegesis of Martin Heidegger, which critics labeled "Dreydegger".

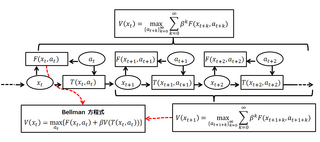

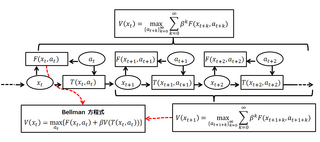

A Bellman equation, named after Richard E. Bellman, is a necessary condition for optimality associated with the mathematical optimization method known as dynamic programming. It writes the "value" of a decision problem at a certain point in time in terms of the payoff from some initial choices and the "value" of the remaining decision problem that results from those initial choices. This breaks a dynamic optimization problem into a sequence of simpler subproblems, as Bellman's “principle of optimality" prescribes. The equation applies to algebraic structures with a total ordering; for algebraic structures with a partial ordering, the generic Bellman's equation can be used.

In machine learning, backpropagation is a gradient estimation method used to train neural network models. The gradient estimate is used by the optimization algorithm to compute the network parameter updates.

A recurrent neural network (RNN) is one of the two broad types of artificial neural network, characterized by direction of the flow of information between its layers. In contrast to the uni-directional feedforward neural network, it is a bi-directional artificial neural network, meaning that it allows the output from some nodes to affect subsequent input to the same nodes. Their ability to use internal state (memory) to process arbitrary sequences of inputs makes them applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition. The term "recurrent neural network" is used to refer to the class of networks with an infinite impulse response, whereas "convolutional neural network" refers to the class of finite impulse response. Both classes of networks exhibit temporal dynamic behavior. A finite impulse recurrent network is a directed acyclic graph that can be unrolled and replaced with a strictly feedforward neural network, while an infinite impulse recurrent network is a directed cyclic graph that cannot be unrolled.

A feedforward neural network (FNN) is one of the two broad types of artificial neural network, characterized by direction of the flow of information between its layers. Its flow is uni-directional, meaning that the information in the model flows in only one direction—forward—from the input nodes, through the hidden nodes and to the output nodes, without any cycles or loops, in contrast to recurrent neural networks, which have a bi-directional flow. Modern feedforward networks are trained using the backpropagation method and are colloquially referred to as the "vanilla" neural networks.

The history of artificial intelligence (AI) began in antiquity, with myths, stories and rumors of artificial beings endowed with intelligence or consciousness by master craftsmen. The seeds of modern AI were planted by philosophers who attempted to describe the process of human thinking as the mechanical manipulation of symbols. This work culminated in the invention of the programmable digital computer in the 1940s, a machine based on the abstract essence of mathematical reasoning. This device and the ideas behind it inspired a handful of scientists to begin seriously discussing the possibility of building an electronic brain.

The philosophy of artificial intelligence is a branch of the philosophy of mind and the philosophy of computer science that explores artificial intelligence and its implications for knowledge and understanding of intelligence, ethics, consciousness, epistemology, and free will. Furthermore, the technology is concerned with the creation of artificial animals or artificial people so the discipline is of considerable interest to philosophers. These factors contributed to the emergence of the philosophy of artificial intelligence.

Quantum neural networks are computational neural network models which are based on the principles of quantum mechanics. The first ideas on quantum neural computation were published independently in 1995 by Subhash Kak and Ron Chrisley, engaging with the theory of quantum mind, which posits that quantum effects play a role in cognitive function. However, typical research in quantum neural networks involves combining classical artificial neural network models with the advantages of quantum information in order to develop more efficient algorithms. One important motivation for these investigations is the difficulty to train classical neural networks, especially in big data applications. The hope is that features of quantum computing such as quantum parallelism or the effects of interference and entanglement can be used as resources. Since the technological implementation of a quantum computer is still in a premature stage, such quantum neural network models are mostly theoretical proposals that await their full implementation in physical experiments.

Meta-learning is a subfield of machine learning where automatic learning algorithms are applied to metadata about machine learning experiments. As of 2017, the term had not found a standard interpretation, however the main goal is to use such metadata to understand how automatic learning can become flexible in solving learning problems, hence to improve the performance of existing learning algorithms or to learn (induce) the learning algorithm itself, hence the alternative term learning to learn.

Recursive economics is a branch of modern economics based on a paradigm of individuals making a series of two-period optimization decisions over time.

Kumpati S. Narendra is an American control theorist, who currently holds the Harold W. Cheel Professorship of Electrical Engineering at Yale University. He received the Richard E. Bellman Control Heritage Award in 2003. He is noted "for pioneering contributions to stability theory, adaptive and learning systems theory". He is also well recognized for his research work towards learning including Neural Networks and Learning Automata.

Arthur Earl Bryson Jr. is the Paul Pigott Professor of Engineering Emeritus at Stanford University and the "father of modern optimal control theory". With Henry J. Kelley, he also pioneered an early version of the backpropagation procedure, now widely used for machine learning and artificial neural networks.

Josef "Sepp" Hochreiter is a German computer scientist. Since 2018 he has led the Institute for Machine Learning at the Johannes Kepler University of Linz after having led the Institute of Bioinformatics from 2006 to 2018. In 2017 he became the head of the Linz Institute of Technology (LIT) AI Lab. Hochreiter is also a founding director of the Institute of Advanced Research in Artificial Intelligence (IARAI). Previously, he was at the Technical University of Berlin, at University of Colorado Boulder, and at the Technical University of Munich. He is a chair of the Critical Assessment of Massive Data Analysis (CAMDA) conference.

Joseph Pierre LaSalle was an American mathematician specialising in dynamical systems and responsible for important contributions to stability theory, such as LaSalle's invariance principle which bears his name.

Henry J. Kelley (1926-1988) was Christopher C. Kraft Professor of Aerospace and Ocean Engineering at the Virginia Polytechnic Institute. He produced major contributions to control theory, especially in aeronautical engineering and flight optimization.

In artificial intelligence, a differentiable neural computer (DNC) is a memory augmented neural network architecture (MANN), which is typically recurrent in its implementation. The model was published in 2016 by Alex Graves et al. of DeepMind.

Artificial neural networks (ANNs) are models created using machine learning to perform a number of tasks. Their creation was inspired by neural circuitry. While some of the computational implementations ANNs relate to earlier discoveries in mathematics, the first implementation of ANNs was by psychologist Frank Rosenblatt, who developed the perceptron. Little research was conducted on ANNs in the 1970s and 1980s, with the AAAI calling that period an "AI winter".