In computing, a process is the instance of a computer program that is being executed by one or many threads. There are many different process models, some of which are light weight, but almost all processes are rooted in an operating system (OS) process which comprises the program code, assigned system resources, physical and logical access permissions, and data structures to initiate, control and coordinate execution activity. Depending on the OS, a process may be made up of multiple threads of execution that execute instructions concurrently.

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. The implementation of threads and processes differs between operating systems. In Modern Operating Systems, Tanenbaum shows that many distinct models of process organization are possible. In many cases, a thread is a component of a process. The multiple threads of a given process may be executed concurrently, sharing resources such as memory, while different processes do not share these resources. In particular, the threads of a process share its executable code and the values of its dynamically allocated variables and non-thread-local global variables at any given time.

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

In computer science, an algorithm is called non-blocking if failure or suspension of any thread cannot cause failure or suspension of another thread; for some operations, these algorithms provide a useful alternative to traditional blocking implementations. A non-blocking algorithm is lock-free if there is guaranteed system-wide progress, and wait-free if there is also guaranteed per-thread progress. "Non-blocking" was used as a synonym for "lock-free" in the literature until the introduction of obstruction-freedom in 2003.

In computer science, concurrency is the ability of different parts or units of a program, algorithm, or problem to be executed out-of-order or in partial order, without affecting the outcome. This allows for parallel execution of the concurrent units, which can significantly improve overall speed of the execution in multi-processor and multi-core systems. In more technical terms, concurrency refers to the decomposability of a program, algorithm, or problem into order-independent or partially-ordered components or units of computation.

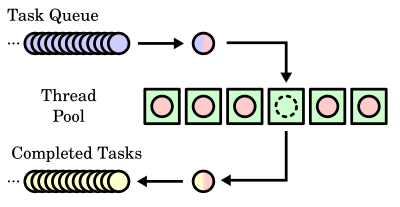

In computing, a task is a unit of execution or a unit of work. The term is ambiguous; precise alternative terms include process, light-weight process, thread, step, request, or query. In the adjacent diagram, there are queues of incoming work to do and outgoing completed work, and a thread pool of threads to perform this work. Either the work units themselves or the threads that perform the work can be referred to as "tasks", and these can be referred to respectively as requests/responses/threads, incoming tasks/completed tasks/threads, or requests/responses/tasks.

Concurrent computing is a form of computing in which several computations are executed concurrently—during overlapping time periods—instead of sequentially—with one completing before the next starts.

The object pool pattern is a software creational design pattern that uses a set of initialized objects kept ready to use – a "pool" – rather than allocating and destroying them on demand. A client of the pool will request an object from the pool and perform operations on the returned object. When the client has finished, it returns the object to the pool rather than destroying it; this can be done manually or automatically.

The active object design pattern decouples method execution from method invocation for objects that each reside in their own thread of control. The goal is to introduce concurrency, by using asynchronous method invocation and a scheduler for handling requests.

In computer programming, a green thread is a thread that is scheduled by a runtime library or virtual machine (VM) instead of natively by the underlying operating system (OS). Green threads emulate multithreaded environments without relying on any native OS abilities, and they are managed in user space instead of kernel space, enabling them to work in environments that do not have native thread support.

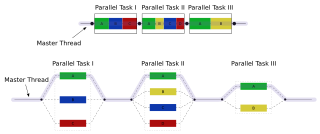

Task parallelism is a form of parallelization of computer code across multiple processors in parallel computing environments. Task parallelism focuses on distributing tasks—concurrently performed by processes or threads—across different processors. In contrast to data parallelism which involves running the same task on different components of data, task parallelism is distinguished by running many different tasks at the same time on the same data. A common type of task parallelism is pipelining, which consists of moving a single set of data through a series of separate tasks where each task can execute independently of the others.

oneAPI Threading Building Blocks, is a C++ template library developed by Intel for parallel programming on multi-core processors. Using TBB, a computation is broken down into tasks that can run in parallel. The library manages and schedules threads to execute these tasks.

Parallel Extensions was the development name for a managed concurrency library developed by a collaboration between Microsoft Research and the CLR team at Microsoft. The library was released in version 4.0 of the .NET Framework. It is composed of two parts: Parallel LINQ (PLINQ) and Task Parallel Library (TPL). It also consists of a set of coordination data structures (CDS) – sets of data structures used to synchronize and co-ordinate the execution of concurrent tasks.

Grand Central Dispatch, is a technology developed by Apple Inc. to optimize application support for systems with multi-core processors and other symmetric multiprocessing systems. It is an implementation of task parallelism based on the thread pool pattern. The fundamental idea is to move the management of the thread pool out of the hands of the developer, and closer to the operating system. The developer injects "work packages" into the pool oblivious of the pool's architecture. This model improves simplicity, portability and performance.

In computing, algorithmic skeletons, or parallelism patterns, are a high-level parallel programming model for parallel and distributed computing.

Node.js is a cross-platform, open-source server environment that can run on Windows, Linux, Unix, macOS, and more. Node.js is a back-end JavaScript runtime environment, runs on the V8 JavaScript Engine, and executes JavaScript code outside a web browser.

For several years parallel hardware was only available for distributed computing but recently it is becoming available for the low end computers as well. Hence it has become inevitable for software programmers to start writing parallel applications. It is quite natural for programmers to think sequentially and hence they are less acquainted with writing multi-threaded or parallel processing applications. Parallel programming requires handling various issues such as synchronization and deadlock avoidance. Programmers require added expertise for writing such applications apart from their expertise in the application domain. Hence programmers prefer to write sequential code and most of the popular programming languages support it. This allows them to concentrate more on the application. Therefore, there is a need to convert such sequential applications to parallel applications with the help of automated tools. The need is also non-trivial because large amount of legacy code written over the past few decades needs to be reused and parallelized.

In parallel computing, work stealing is a scheduling strategy for multithreaded computer programs. It solves the problem of executing a dynamically multithreaded computation, one that can "spawn" new threads of execution, on a statically multithreaded computer, with a fixed number of processors. It does so efficiently in terms of execution time, memory usage, and inter-processor communication.

In parallel computing, the fork–join model is a way of setting up and executing parallel programs, such that execution branches off in parallel at designated points in the program, to "join" (merge) at a subsequent point and resume sequential execution. Parallel sections may fork recursively until a certain task granularity is reached. Fork–join can be considered a parallel design pattern. It was formulated as early as 1963.

In computer programming, virtual threads are threads that are scheduled by a runtime library instead of natively by the underlying operating system (OS). Virtual threads allows for tens of millions of preemptive tasks and events without swapping on a 2021 consumer-grade computer.., compared to low thousands of operating system threads. Preemptive execution is important to performance gains through parallelism and fast preemptive response times for tens of millions of events. Earlier constructs that are not preemptive, such as coroutines or the largely single-threaded Node.js, introduce delays in responding to asynchronous events such as every incoming request in a server application