Related Research Articles

A distributed data store is a computer network where information is stored on more than one node, often in a replicated fashion. It is usually specifically used to refer to either a distributed database where users store information on a number of nodes, or a computer network in which users store information on a number of peer network nodes.

In data processing R*-trees are a variant of R-trees used for indexing spatial information. R*-trees have slightly higher construction cost than standard R-trees, as the data may need to be reinserted; but the resulting tree will usually have a better query performance. Like the standard R-tree, it can store both point and spatial data. It was proposed by Norbert Beckmann, Hans-Peter Kriegel, Ralf Schneider, and Bernhard Seeger in 1990.

In computer science, an interval tree is a tree data structure to hold intervals. Specifically, it allows one to efficiently find all intervals that overlap with any given interval or point. It is often used for windowing queries, for instance, to find all roads on a computerized map inside a rectangular viewport, or to find all visible elements inside a three-dimensional scene. A similar data structure is the segment tree.

Eventual consistency is a consistency model used in distributed computing to achieve high availability that informally guarantees that, if no new updates are made to a given data item, eventually all accesses to that item will return the last updated value. Eventual consistency, also called optimistic replication, is widely deployed in distributed systems and has origins in early mobile computing projects. A system that has achieved eventual consistency is often said to have converged, or achieved replica convergence. Eventual consistency is a weak guarantee – most stronger models, like linearizability, are trivially eventually consistent.

Multi-master replication is a method of database replication which allows data to be stored by a group of computers, and updated by any member of the group. All members are responsive to client data queries. The multi-master replication system is responsible for propagating the data modifications made by each member to the rest of the group and resolving any conflicts that might arise between concurrent changes made by different members.

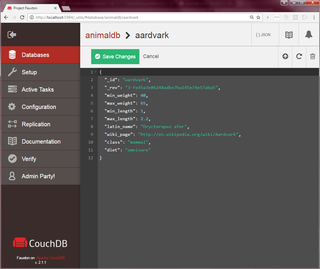

Apache CouchDB is an open-source document-oriented NoSQL database, implemented in Erlang.

Database caching is a process included in the design of computer applications which generate web pages on-demand (dynamically) by accessing backend databases.

Cassandra is a free and open-source, distributed, wide-column store, NoSQL database management system designed to handle large amounts of data across many commodity servers, providing high availability with no single point of failure. Cassandra offers support for clusters spanning multiple datacenters, with asynchronous masterless replication allowing low latency operations for all clients. Cassandra was designed to implement a combination of Amazon's Dynamo distributed storage and replication techniques combined with Google's Bigtable data and storage engine model.

A NoSQL database provides a mechanism for storage and retrieval of data that is modeled in means other than the tabular relations used in relational databases. Such databases have existed since the late 1960s, but the name "NoSQL" was only coined in the early 21st century, triggered by the needs of Web 2.0 companies. NoSQL databases are increasingly used in big data and real-time web applications. NoSQL systems are also sometimes called Not only SQL to emphasize that they may support SQL-like query languages or sit alongside SQL databases in polyglot-persistent architectures.

The elasticity of a data store relates to the flexibility of its data model and clustering capabilities. The greater the number of data model changes that can be tolerated, and the more easily the clustering can be managed, the more elastic the data store is considered to be.

Hector is a high-level client API for Apache Cassandra. Named after Hector, a warrior of Troy in Greek mythology, it is a substitute for the Cassandra Java Client, or Thrift, that is encapsulated by Hector. It also has Maven repository access.

The standard column family is a NoSQL object that contains columns of related data. It is a tuple (pair) that consists of a key–value pair, where the key is mapped to a value that is a set of columns. In analogy with relational databases, a standard column family is as a "table", each key–value pair being a "row". Each column is a tuple consisting of a column name, a value, and a timestamp. In a relational database table, this data would be grouped together within a table with other non-related data.

Amazon DynamoDB is a fully managed proprietary NoSQL database offered by Amazon.com as part of the Amazon Web Services portfolio. DynamoDB offers a fast persistent Key-Value Datastore with built-in support for replication, autoscaling, encryption at rest, and on-demand backup among other features.

SingleStore is a proprietary, cloud-native database designed for data-intensive applications. A distributed, relational, SQL database management system (RDBMS) that features ANSI SQL support, it is known for speed in data ingest, transaction processing, and query processing.

Oracle NoSQL Database is a NoSQL-type distributed key-value database from Oracle Corporation. It provides transactional semantics for data manipulation, horizontal scalability, and simple administration and monitoring.

Druid is a column-oriented, open-source, distributed data store written in Java. Druid is designed to quickly ingest massive quantities of event data, and provide low-latency queries on top of the data. The name Druid comes from the shapeshifting Druid class in many role-playing games, to reflect that the architecture of the system can shift to solve different types of data problems.

Elliptics is a distributed key–value data storage with open source code. By default it is a classic distributed hash table (DHT) with multiple replicas put in different groups. Elliptics was created to meet requirements of multi-datacenter and physically distributed storage locations when storing huge amount of medium and large files.

In distributed computing, a conflict-free replicated data type (CRDT) is a data structure that is replicated across multiple computers in a network, with the following features:

- The application can update any replica independently, concurrently and without coordinating with other replicas.

- An algorithm automatically resolves any inconsistencies that might occur.

- Although replicas may have different state at any particular point in time, they are guaranteed to eventually converge.

Azure Cosmos DB is a globally distributed, multi-model database service offered by Microsoft Azure. It is designed to provide high availability, scalability, and low-latency access to data for mission-critical applications. Unlike traditional relational databases, Cosmos DB is a NoSQL database, which means it can handle unstructured and semi-structured data types.

RocksDB is a high performance embedded database for key-value data. It is a fork of Google's LevelDB optimized to exploit multi-core processors (CPUs), and make efficient use of fast storage, such as solid-state drives (SSD), for input/output (I/O) bound workloads. It is based on a log-structured merge-tree data structure. It is written in C++ and provides official language bindings for C++, C, and Java. Many third-party language bindings exist. RocksDB is free and open-source software, released originally under a BSD 3-clause license. However, in July 2017 the project was migrated to a dual license of both Apache 2.0 and GPLv2 license, possibly in response to the Apache Software Foundation's blacklist of the previous BSD+Patents license clause.

References

- 1 2 3 "DistributedDeletes". Apache Software Foundation. Archived from the original on 2011-05-11. Retrieved 2011-04-13.

Thus, the "eventual" in eventual consistency: if a client reads from a replica that did not get the update with a low enough ConsistencyLevel, it will potentially see old data. [...] There's one more piece to the problem: how do we know when it's safe to remove tombstones? [...] [It] defined a constant, GCGraceSeconds, and had each node track tombstone age locally. Once it has aged past the constant, it can be GC'd during compaction (see MemtableSSTable).

- 1 2 "What are Tombstones". Apache Cassandra . Retrieved 18 June 2019.

- ↑ "Removing tombstones in Cassandra". IBM. 21 May 2018. Retrieved 18 June 2019.

- ↑ "User Guide: Dealing with Tombstones". GitHub . Retrieved 2011-04-13.

To put this in the context of an example, say we have just created 10 rows of data with three columns each. If half the columns are later deleted, and a compaction has not yet occurred, these columns will show up in get_range_slices queries as empty. Using RangeSlicesQuery as described in the previous section, we would have 10 results returned, but only five of them will have values. More importantly, calls to get (via ColumnQuery) by design assume the Column you are retrieving exists in the store. Therefore if you call get on tombstoned data, null is returned (note: this is different than previous versions of Hector where the underlying NotFoundException was propagated up the stack).