Character encoding is the process of assigning numbers to graphical characters, especially the written characters of human language, allowing them to be stored, transmitted, and transformed using digital computers. The numerical values that make up a character encoding are known as "code points" and collectively comprise a "code space", a "code page", or a "character map".

UTF-8 is a variable-length character encoding standard used for electronic communication. Defined by the Unicode Standard, the name is derived from Unicode Transformation Format – 8-bit.

UTF-16 (16-bit Unicode Transformation Format) is a character encoding capable of encoding all 1,112,064 valid code points of Unicode (in fact this number of code points is dictated by the design of UTF-16). The encoding is variable-length, as code points are encoded with one or two 16-bit code units. UTF-16 arose from an earlier obsolete fixed-width 16-bit encoding now known as UCS-2 (for 2-byte Universal Character Set), once it became clear that more than 216 (65,536) code points were needed, including most emoji and important CJK characters such as for personal and place names.

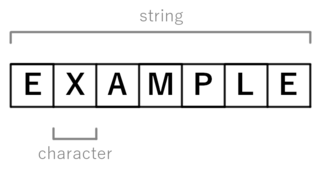

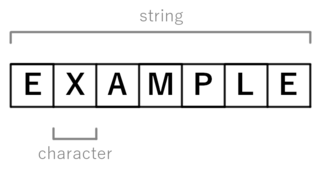

In computer and machine-based telecommunications terminology, a character is a unit of information that roughly corresponds to a grapheme, grapheme-like unit, or symbol, such as in an alphabet or syllabary in the written form of a natural language.

The byte order mark (BOM) is a particular usage of the special Unicode character, U+FEFFZERO WIDTH NO-BREAK SPACE, whose appearance as a magic number at the start of a text stream can signal several things to a program reading the text:

UTF-32 (32-bit Unicode Transformation Format) is a fixed-length encoding used to encode Unicode code points that uses exactly 32 bits (four bytes) per code point (but a number of leading bits must be zero as there are far fewer than 232 Unicode code points, needing actually only 21 bits). UTF-32 is a fixed-length encoding, in contrast to all other Unicode transformation formats, which are variable-length encodings. Each 32-bit value in UTF-32 represents one Unicode code point and is exactly equal to that code point's numerical value.

A text file is a kind of computer file that is structured as a sequence of lines of electronic text. A text file exists stored as data within a computer file system. In operating systems such as CP/M and DOS, where the operating system does not keep track of the file size in bytes, the end of a text file is denoted by placing one or more special characters, known as an end-of-file (EOF) marker, as padding after the last line in a text file. On modern operating systems such as Microsoft Windows and Unix-like systems, text files do not contain any special EOF character, because file systems on those operating systems keep track of the file size in bytes. Most text files need to have end-of-line delimiters, which are done in a few different ways depending on operating system. Some operating systems with record-orientated file systems may not use new line delimiters and will primarily store text files with lines separated as fixed or variable length records.

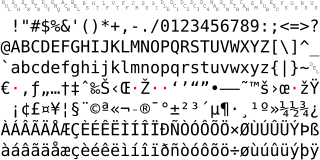

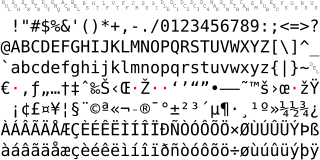

Windows-1252 or CP-1252 is a single-byte character encoding of the Latin alphabet that was used by default in Microsoft Windows for English and many Romance and Germanic languages including Spanish, Portuguese, French, and German. This character-encoding scheme is used throughout the Americas, Western Europe, Oceania, and much of Africa.

In computing, a locale is a set of parameters that defines the user's language, region and any special variant preferences that the user wants to see in their user interface. Usually a locale identifier consists of at least a language code and a country/region code. Locale is an important aspect of i18n.

A double-byte character set (DBCS) is a character encoding in which either all characters are encoded in two bytes, or merely every graphic character not representable by an accompanying single-byte character set (SBCS) is encoded in two bytes. A DBCS supports national languages that contain many unique characters or symbols. Examples of such languages include Japanese and Chinese. Korean Hangul does not contain as many characters, but KS X 1001 supports both Hangul and Hanja, and uses two bytes per character.

GB 18030 is a Chinese government standard, described as Information Technology — Chinese coded character set and defines the required language and character support necessary for software in China. GB18030 is the registered Internet name for the official character set of the People's Republic of China (PRC) superseding GB2312. As a Unicode Transformation Format, GB18030 supports both simplified and traditional Chinese characters. It is also compatible with legacy encodings including GB2312, CP936, and GBK 1.0.

Windows Console is the infrastructure for console applications in Microsoft Windows. An instance of a Windows Console has a screen buffer and an input buffer. It allows console apps to run inside a window or in hardware text mode. The user can switch between the two using the Alt+↵ Enter key combination. The text mode is unavailable in Windows Vista and later. Starting with Windows 10, however, a native full-screen mode is available.

A wide character is a computer character datatype that generally has a size greater than the traditional 8-bit character. The increased datatype size allows for the use of larger coded character sets.

A variable-width encoding is a type of character encoding scheme in which codes of differing lengths are used to encode a character set for representation, usually in a computer. Most common variable-width encodings are multibyte encodings, which use varying numbers of bytes (octets) to encode different characters. (Some authors, notably in Microsoft documentation, use the term multibyte character set, which is a misnomer, because representation size is an attribute of the encoding, not of the character set.)

This article compares Unicode encodings. Two situations are considered: 8-bit-clean environments, and environments that forbid use of byte values that have the high bit set. Originally such prohibitions were to allow for links that used only seven data bits, but they remain in some standards and so some standard-conforming software must generate messages that comply with the restrictions. Standard Compression Scheme for Unicode and Binary Ordered Compression for Unicode are excluded from the comparison tables because it is difficult to simply quantify their size.

Windows code pages are sets of characters or code pages used in Microsoft Windows from the 1980s and 1990s. Windows code pages were gradually superseded when Unicode was implemented in Windows, although they are still supported both within Windows and other platforms, and still apply when Alt code shortcuts are used.

The C++ programming language has support for string handling, mostly implemented in its standard library. The language standard specifies several string types, some inherited from C, some designed to make use of the language's features, such as classes and RAII. The most-used of these is std::string.

Bush hid the facts is a common name for a bug present in some versions of Microsoft Windows, which causes text encoded in ASCII to be interpreted as if it were UTF-16LE, resulting in garbled text. When the string "Bush hid the facts", without quotes, was put in a new Notepad document and saved, closed, and reopened, the nonsensical sequence of the Chinese characters "畂桳栠摩琠敨映捡獴" would appear instead.

The C programming language has a set of functions implementing operations on strings in its standard library. Various operations, such as copying, concatenation, tokenization and searching are supported. For character strings, the standard library uses the convention that strings are null-terminated: a string of n characters is represented as an array of n + 1 elements, the last of which is a "NUL character" with numeric value 0.

Microsoft Windows code page 932, also called Windows-31J amongst other names, is the Microsoft Windows code page for the Japanese language, which is an extended variant of the Shift JIS Japanese character encoding. It contains standard 7-bit ASCII codes, and Japanese characters are indicated by the high bit of the first byte being set to 1. Some code points in this page require a second byte, so characters use either 8 or 16 bits for encoding.