Related Research Articles

Audio signal processing is a subfield of signal processing that is concerned with the electronic manipulation of audio signals. Audio signals are electronic representations of sound waves—longitudinal waves which travel through air, consisting of compressions and rarefactions. The energy contained in audio signals or sound level is typically measured in decibels. As audio signals may be represented in either digital or analog format, processing may occur in either domain. Analog processors operate directly on the electrical signal, while digital processors operate mathematically on its digital representation.

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder.

Digital signal processing (DSP) is the use of digital processing, such as by computers or more specialized digital signal processors, to perform a wide variety of signal processing operations. The digital signals processed in this manner are a sequence of numbers that represent samples of a continuous variable in a domain such as time, space, or frequency. In digital electronics, a digital signal is represented as a pulse train, which is typically generated by the switching of a transistor.

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis.

Linear predictive coding (LPC) is a method used mostly in audio signal processing and speech processing for representing the spectral envelope of a digital signal of speech in compressed form, using the information of a linear predictive model.

Vector quantization (VQ) is a classical quantization technique from signal processing that allows the modeling of probability density functions by the distribution of prototype vectors. It was originally used for data compression. It works by dividing a large set of points (vectors) into groups having approximately the same number of points closest to them. Each group is represented by its centroid point, as in k-means and some other clustering algorithms.

A harmonic sound is said to have a missing fundamental, suppressed fundamental, or phantom fundamental when its overtones suggest a fundamental frequency but the sound lacks a component at the fundamental frequency itself. The brain perceives the pitch of a tone not only by its fundamental frequency, but also by the periodicity implied by the relationship between the higher harmonics; we may perceive the same pitch even if the fundamental frequency is missing from a tone.

PSOLA is a digital signal processing technique used for speech processing and more specifically speech synthesis. It can be used to modify the pitch and duration of a speech signal. It was invented around 1986.

Kaito Kuroba, the true identity of the gentleman thief "Kaito Kid", is a fictional character and the main protagonist of the Magic Kaito manga series created by Gosho Aoyama. His father Toichi Kuroba was the original Kaito Kid before being killed by an unknown organization, while his mother was a former phantom thief known as the Phantom Lady. Kaito Kuroba then takes on the role of Kid after learning the organization is after a gemstone called Pandora and decides to find and destroy it.

The Fastest Fourier Transform in the West (FFTW) is a software library for computing discrete Fourier transforms (DFTs) developed by Matteo Frigo and Steven G. Johnson at the Massachusetts Institute of Technology.

In electrical engineering and applied mathematics, blind deconvolution is deconvolution without explicit knowledge of the impulse response function used in the convolution. This is usually achieved by making appropriate assumptions of the input to estimate the impulse response by analyzing the output. Blind deconvolution is not solvable without making assumptions on input and impulse response. Most of the algorithms to solve this problem are based on assumption that both input and impulse response live in respective known subspaces. However, blind deconvolution remains a very challenging non-convex optimization problem even with this assumption.

In applied mathematics, a bit-reversal permutation is a permutation of a sequence of items, where is a power of two. It is defined by indexing the elements of the sequence by the numbers from to , representing each of these numbers by its binary representation, and mapping each item to the item whose representation has the same bits in the reversed order.

Audio Analytic is a British company headquartered in Cambridge, England that has developed a patented sound recognition software framework called ai3, which provides technology with the ability to understand context through sound. This framework includes an embeddable software platform that can react to a range of sounds such as smoke alarms and carbon monoxide alarms, window breakage, infant crying and dogs barking.

In communications technology, the technique of compressed sensing (CS) may be applied to the processing of speech signals under certain conditions. In particular, CS can be used to reconstruct a sparse vector from a smaller number of measurements, provided the signal can be represented in sparse domain. "Sparse domain" refers to a domain in which only a few measurements have non-zero values.

Perceptual-based 3D sound localization is the application of knowledge of the human auditory system to develop 3D sound localization technology.

An audio coding format is a content representation format for storage or transmission of digital audio. Examples of audio coding formats include MP3, AAC, Vorbis, FLAC, and Opus. A specific software or hardware implementation capable of audio compression and decompression to/from a specific audio coding format is called an audio codec; an example of an audio codec is LAME, which is one of several different codecs which implements encoding and decoding audio in the MP3 audio coding format in software.

Cepstral mean and variance normalization (CMVN) is a computationally efficient normalization technique for robust speech recognition. The performance of CMVN is known to degrade for short utterances. This is due to insufficient data for parameter estimation and loss of discriminable information as all utterances are forced to have zero mean and unit variance.

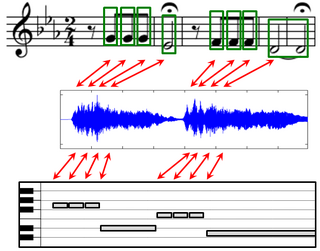

Music can be described and represented in many different ways including sheet music, symbolic representations, and audio recordings. For each of these representations, there may exist different versions that correspond to the same musical work. The general goal of music alignment is to automatically link the various data streams, thus interrelating the multiple information sets related to a given musical work. More precisely, music alignment is taken to mean a procedure which, for a given position in one representation of a piece of music, determines the corresponding position within another representation. In the figure on the right, such an alignment is visualized by the red bidirectional arrows. Such synchronization results form the basis for novel interfaces that allow users to access, search, and browse musical content in a convenient way.

Enhanced Voice Services (EVS) is a superwideband speech audio coding standard that was developed for VoLTE. It offers up to 20 kHz audio bandwidth and has high robustness to delay jitter and packet losses due to its channel aware coding and improved packet loss concealment. It has been developed in 3GPP and is described in 3GPP TS 26.441. The application areas of EVS consist of improved telephony and teleconferencing, audiovisual conferencing services, and streaming audio. Source code of both decoder and encoder in ANSI C is available as 3GPP TS 26.442 and is being updated regularly. Samsung uses the term HD+ when doing a call using EVS.

Generative audio refers to the creation of audio files from databases of audio clips. This technology differs from AI voices such as Apple's Siri or Amazon's Alexa, which use a collection of fragments that are stitched together on demand.

References

- ↑ Abe, M.; Nakamura, S.; Shikano, K.; Kuwabara, H. (April 1988). "Voice conversion through vector quantization". ICASSP-88., International Conference on Acoustics, Speech, and Signal Processing. pp. 655–658 vol.1. doi:10.1109/ICASSP.1988.196671. S2CID 62203146.

- ↑ Hui Ye; Young, S. (May 2004). "High quality voice morphing". 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing. Vol. 1. pp. I–9. doi:10.1109/ICASSP.2004.1325909. ISBN 0-7803-8484-9. S2CID 14019057.

- ↑ Ahmed, I.; Sadiq, A.; Atif, M.; Naseer, M.; Adnan, M. (February 2018). "Voice morphing: An illusion or reality". 2018 International Conference on Advancements in Computational Sciences (ICACS). pp. 1–6. doi:10.1109/ICACS.2018.8333282. ISBN 978-1-5386-2172-1. S2CID 4775311.

- ↑ Brayne, Sarah (2018). "Visual Data and the Law". Law & Social Inquiry. 43 (4): 1149–1163. doi:10.1111/lsi.12373. S2CID 150076575.