Related Research Articles

Subsumption architecture is a reactive robotic architecture heavily associated with behavior-based robotics which was very popular in the 1980s and 90s. The term was introduced by Rodney Brooks and colleagues in 1986. Subsumption has been widely influential in autonomous robotics and elsewhere in real-time AI.

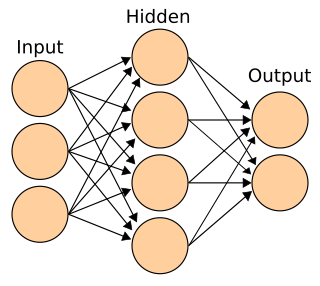

Connectionism is the name of an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks. Connectionism has had many 'waves' since its beginnings.

In artificial intelligence, symbolic artificial intelligence is the term for the collection of all methods in artificial intelligence research that are based on high-level symbolic (human-readable) representations of problems, logic and search. Symbolic AI used tools such as logic programming, production rules, semantic nets and frames, and it developed applications such as knowledge-based systems, symbolic mathematics, automated theorem provers, ontologies, the semantic web, and automated planning and scheduling systems. The Symbolic AI paradigm led to seminal ideas in search, symbolic programming languages, agents, multi-agent systems, the semantic web, and the strengths and limitations of formal knowledge and reasoning systems.

A cognitive model is an approximation of one or more cognitive processes in humans or other animals for the purposes of comprehension and prediction. There are many types of cognitive models, and they can range from box-and-arrow diagrams to a set of equations to software programs that interact with the same tools that humans use to complete tasks. In terms of information processing, cognitive modeling is modeling of human perception, reasoning, memory and action.

Behavior-based robotics (BBR) or behavioral robotics is an approach in robotics that focuses on robots that are able to exhibit complex-appearing behaviors despite little internal variable state to model its immediate environment, mostly gradually correcting its actions via sensory-motor links.

Soar is a cognitive architecture, originally created by John Laird, Allen Newell, and Paul Rosenbloom at Carnegie Mellon University. It is now maintained and developed by John Laird's research group at the University of Michigan.

ACT-R is a cognitive architecture mainly developed by John Robert Anderson and Christian Lebiere at Carnegie Mellon University. Like any cognitive architecture, ACT-R aims to define the basic and irreducible cognitive and perceptual operations that enable the human mind. In theory, each task that humans can perform should consist of a series of these discrete operations.

A cognitive architecture refers to both a theory about the structure of the human mind and to a computational instantiation of such a theory used in the fields of artificial intelligence (AI) and computational cognitive science. The formalized models can be used to further refine a comprehensive theory of cognition and as a useful artificial intelligence program. Successful cognitive architectures include ACT-R and SOAR. The research on cognitive architectures as software instantiation of cognitive theories was initiated by Allen Newell in 1990.

Computational cognition is the study of the computational basis of learning and inference by mathematical modeling, computer simulation, and behavioral experiments. In psychology, it is an approach which develops computational models based on experimental results. It seeks to understand the basis behind the human method of processing of information. Early on computational cognitive scientists sought to bring back and create a scientific form of Brentano's psychology.

Cognitive Robotics or Cognitive Technology is a subfield of robotics concerned with endowing a robot with intelligent behavior by providing it with a processing architecture that will allow it to learn and reason about how to behave in response to complex goals in a complex world. Cognitive robotics may be considered the engineering branch of embodied cognitive science and embodied embedded cognition, consisting of Robotic Process Automation, Artificial Intelligence, Machine Learning, Deep Learning, Optical Character Recognition, Image Processing, Process Mining, Analytics, Software Development and System Integration.

Action selection is a way of characterizing the most basic problem of intelligent systems: what to do next. In artificial intelligence and computational cognitive science, "the action selection problem" is typically associated with intelligent agents and animats—artificial systems that exhibit complex behaviour in an agent environment. The term is also sometimes used in ethology or animal behavior.

In artificial intelligence, reactive planning denotes a group of techniques for action selection by autonomous agents. These techniques differ from classical planning in two aspects. First, they operate in a timely fashion and hence can cope with highly dynamic and unpredictable environments. Second, they compute just one next action in every instant, based on the current context. Reactive planners often exploit reactive plans, which are stored structures describing the agent's priorities and behaviour. The term reactive planning goes back to at least 1988, and is synonymous with the more modern term dynamic planning.

Embodied cognitive science is an interdisciplinary field of research, the aim of which is to explain the mechanisms underlying intelligent behavior. It comprises three main methodologies: the modeling of psychological and biological systems in a holistic manner that considers the mind and body as a single entity; the formation of a common set of general principles of intelligent behavior; and the experimental use of robotic agents in controlled environments.

Neurorobotics is the combined study of neuroscience, robotics, and artificial intelligence. It is the science and technology of embodied autonomous neural systems. Neural systems include brain-inspired algorithms, computational models of biological neural networks and actual biological systems. Such neural systems can be embodied in machines with mechanic or any other forms of physical actuation. This includes robots, prosthetic or wearable systems but also, at smaller scale, micro-machines and, at the larger scales, furniture and infrastructures.

Psi-theory, developed by Dietrich Dörner at the University of Bamberg, is a systemic psychological theory covering human action regulation, intention selection and emotion. It models the human mind as an information processing agent, controlled by a set of basic physiological, social and cognitive drives. Perceptual and cognitive processing are directed and modulated by these drives, which allow the autonomous establishment and pursuit of goals in an open environment.

MIBE architecture is a behavior-based robot architecture developed at Artificial Intelligence and Robotics Lab of Politecnico di Milano by Fabio La Daga and Andrea Bonarini in 1998. MIBE architecture is based on the idea of animat and derived from subsumption architecture, formerly developed by Rodney Brooks and colleagues at MIT in 1986.

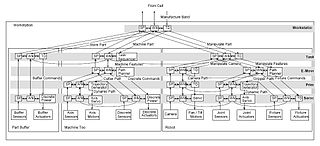

Real-time Control System (RCS) is a reference model architecture, suitable for many software-intensive, real-time computing control problem domains. It defines the types of functions needed in a real-time intelligent control system, and how these functions relate to each other.

The LIDA cognitive architecture is an integrated artificial cognitive system that attempts to model a broad spectrum of cognition in biological systems, from low-level perception/action to high-level reasoning. Developed primarily by Stan Franklin and colleagues at the University of Memphis, the LIDA architecture is empirically grounded in cognitive science and cognitive neuroscience. In addition to providing hypotheses to guide further research, the architecture can support control structures for software agents and robots. Providing plausible explanations for many cognitive processes, the LIDA conceptual model is also intended as a tool with which to think about how minds work.

In artificial intelligence research, the situated approach builds agents that are designed to behave effectively successfully in their environment. This requires designing AI "from the bottom-up" by focussing on the basic perceptual and motor skills required to survive. The situated approach gives a much lower priority to abstract reasoning or problem-solving skills.

FORR is a cognitive architecture for learning and problem solving inspired by Herbert A. Simon's ideas of bounded rationality and satisficing. It was first developed in the early 1990s at the City University of New York. It has been used in game playing, robot pathfinding, recreational park design, spoken dialog systems, and solving NP-hard constraint satisfaction problems, and is general enough for many problem solving applications.

References

- ↑ Schilling, M., Paskarbeit, J., Hoinville, T., Hüffmeier, A., Schneider, A., Schmitz, J., Cruse, H. (Sept. 17 2013). A hexapod walker using a heterarchical structure for action selection. Frontiers in Computational Neuroscience, 7. doi : 10.3389/fncom.2013.00126

- ↑ Öztürk, P. (2009). Levels and types of action selection: The action selection soup. Adaptive Behavior, 17. doi : 10.1177/1059712309339854

- ↑ Koch, C., Ullman, S. (1985). Shifts in selective visual attention: Towards the underlying neural circuitry. Retrieved from .

- 1 2 Jones, J.L. (2004). Robot programming: A practical guide to behavior-based robotics. The McGraw Hill Companies, Inc.

- ↑ Ballard, D.H., Feldman, J.A. (1982). Connectionist models and their properties. Cognitive Science, 6, 205-54.

- ↑ Brooks, R.A. (1986). A robust layered control system for a mobile robot. IEEE Journal of Robotics and Automation, 2, 14-23. Retrieved from .

- 1 2 Rosenblatt, J.K. (1995). DAMN: A distributed architecture for mobile navigation. Retrieved from .

- ↑ Blumberg, B.M. (1996). Old tricks, new dogs: Ethology and interactive creatures. Retrieved from ProQuest Dissertations & Theses Database .

- ↑ Tyrrell, T. (Mar. 1 1994). An evaluation of Maes’ bottom-up mechanism for behavior selection. Adaptive Behavior, 2, 307-348. doi : 10.1177/105971239400200401