An anankastic conditional is a grammatical construction of the form

where Y is required in order to get X. For example:

Not all conditionals of this form have an anankastic interpretation:

where thinking about something else is not required in order to eat chocolate, but is rather advice on how to avoid eating chocolate. [2]

The term comes from the Greek ἀναγκαστικός "compulsory", from ἀνάγκη "necessity."[ citation needed ]

Anankastic conditionals have been argued to pose problems for compositional semantics. [3] Other semanticists have argued that anankastic conditionals can be interpreted the same way as "regular, hypothetical, indicative conditionals". [4]

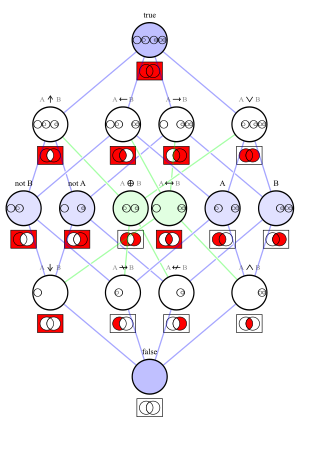

In logic, a logical connective is a logical constant. Connectives can be used to connect logical formulas. For instance in the syntax of propositional logic, the binary connective can be used to join the two atomic formulas and , rendering the complex formula .

In linguistics and related fields, pragmatics is the study of how context contributes to meaning. The field of study evaluates how human language is utilized in social interactions, as well as the relationship between the interpreter and the interpreted. Linguists who specialize in pragmatics are called pragmaticians. The field has been represented since 1986 by the International Pragmatics Association (IPrA).

A proposition is a central concept in the philosophy of language, semantics, logic, and related fields, often characterized as the primary bearer of truth or falsity. Propositions are also often characterized as being the kind of thing that declarative sentences denote. For instance the sentence "The sky is blue" denotes the proposition that the sky is blue. However, crucially, propositions are not themselves linguistic expressions. For instance, the English sentence "Snow is white" denotes the same proposition as the German sentence "Schnee ist weiß" even though the two sentences are not the same. Similarly, propositions can also be characterized as the objects of belief and other propositional attitudes. For instance if one believes that the sky is blue, what one believes is the proposition that the sky is blue. A proposition can also be thought of as a kind of idea: Collins Dictionary has a definition for proposition as "a statement or an idea that people can consider or discuss whether it is true."

In pragmatics, a subdiscipline of linguistics, an implicature is something the speaker suggests or implies with an utterance, even though it is not literally expressed. Implicatures can aid in communicating more efficiently than by explicitly saying everything we want to communicate. The philosopher H. P. Grice coined the term in 1975. Grice distinguished conversational implicatures, which arise because speakers are expected to respect general rules of conversation, and conventional ones, which are tied to certain words such as "but" or "therefore". Take for example the following exchange:

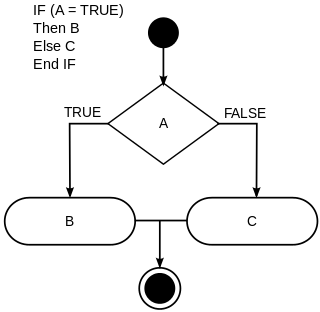

In computer science, conditionals are programming language constructs that perform different computations or actions or return different values depending on the value of a Boolean expression, called a condition.

Counterfactual conditionals are conditional sentences which discuss what would have been true under different circumstances, e.g. "If Peter believed in ghosts, he would be afraid to be here." Counterfactuals are contrasted with indicatives, which are generally restricted to discussing open possibilities. Counterfactuals are characterized grammatically by their use of fake tense morphology, which some languages use in combination with other kinds of morphology including aspect and mood.

Conditional sentences are natural language sentences that express that one thing is contingent on something else, e.g. "If it rains, the picnic will be cancelled." They are so called because the impact of the main clause of the sentence is conditional on the dependent clause. A full conditional thus contains two clauses: a dependent clause called the antecedent, which expresses the condition, and a main clause called the consequent expressing the result.

In computer programming, the ternary conditional operator is a ternary operator that is part of the syntax for basic conditional expressions in several programming languages. It is commonly referred to as the conditional operator, ternary if, or inline if. An expression a ? b : c evaluates to b if the value of a is true, and otherwise to c. One can read it aloud as "if a then b otherwise c". The form a ? b : c is the most common, but alternative syntax do exist; for example, Raku uses the syntax a ?? b !! c to avoid confusion with the infix operators ? and !, whereas in Visual Basic .NET, it instead takes the form If(a, b, c).

Short-circuit evaluation, minimal evaluation, or McCarthy evaluation is the semantics of some Boolean operators in some programming languages in which the second argument is executed or evaluated only if the first argument does not suffice to determine the value of the expression: when the first argument of the AND function evaluates to false, the overall value must be false; and when the first argument of the OR function evaluates to true, the overall value must be true.

Truth-conditional semantics is an approach to semantics of natural language that sees meaning as being the same as, or reducible to, their truth conditions. This approach to semantics is principally associated with Donald Davidson, and attempts to carry out for the semantics of natural language what Tarski's semantic theory of truth achieves for the semantics of logic.

Construction grammar is a family of theories within the field of cognitive linguistics which posit that constructions, or learned pairings of linguistic patterns with meanings, are the fundamental building blocks of human language. Constructions include words, morphemes, fixed expressions and idioms, and abstract grammatical rules such as the passive voice or the ditransitive. Any linguistic pattern is considered to be a construction as long as some aspect of its form or its meaning cannot be predicted from its component parts, or from other constructions that are recognized to exist. In construction grammar, every utterance is understood to be a combination of multiple different constructions, which together specify its precise meaning and form.

Cognitive semantics is part of the cognitive linguistics movement. Semantics is the study of linguistic meaning. Cognitive semantics holds that language is part of a more general human cognitive ability, and can therefore only describe the world as people conceive of it. It is implicit that different linguistic communities conceive of simple things and processes in the world differently, not necessarily some difference between a person's conceptual world and the real world.

In linguistics and philosophy, a presupposition is an implicit assumption about the world or background belief relating to an utterance whose truth is taken for granted in discourse. Examples of presuppositions include:

In formal linguistics, discourse representation theory (DRT) is a framework for exploring meaning under a formal semantics approach. One of the main differences between DRT-style approaches and traditional Montagovian approaches is that DRT includes a level of abstract mental representations within its formalism, which gives it an intrinsic ability to handle meaning across sentence boundaries. DRT was created by Hans Kamp in 1981. A very similar theory was developed independently by Irene Heim in 1982, under the name of File Change Semantics (FCS). Discourse representation theories have been used to implement semantic parsers and natural language understanding systems.

In philosophy—more specifically, in its sub-fields semantics, semiotics, philosophy of language, metaphysics, and metasemantics—meaning "is a relationship between two sorts of things: signs and the kinds of things they intend, express, or signify".

In linguistic typology, object–subject–verb (OSV) or object–agent–verb (OAV) is a classification of languages, based on whether the structure predominates in pragmatically neutral expressions. An example of this would be "Oranges Sam ate".

In semantics, a donkey sentence is a sentence containing a pronoun which is semantically bound but syntactically free. They are a classic puzzle in formal semantics and philosophy of language because they are fully grammatical and yet defy straightforward attempts to generate their formal language equivalents. In order to explain how speakers are able to understand them, semanticists have proposed a variety of formalisms including systems of dynamic semantics such as Discourse representation theory. Their name comes from the example sentence "Every farmer who owns a donkey beats it", in which the donkey pronoun acts as a donkey pronoun because it is semantically but not syntactically bound by the indefinite noun phrase "a donkey". The phenomenon is known as donkey anaphora.

Dynamic semantics is a framework in logic and natural language semantics that treats the meaning of a sentence as its potential to update a context. In static semantics, knowing the meaning of a sentence amounts to knowing when it is true; in dynamic semantics, knowing the meaning of a sentence means knowing "the change it brings about in the information state of anyone who accepts the news conveyed by it." In dynamic semantics, sentences are mapped to functions called context change potentials, which take an input context and return an output context. Dynamic semantics was originally developed by Irene Heim and Hans Kamp in 1981 to model anaphora, but has since been applied widely to phenomena including presupposition, plurals, questions, discourse relations, and modality.

Formal semantics is the study of grammatical meaning in natural languages using formal tools from logic, mathematics and theoretical computer science. It is an interdisciplinary field, sometimes regarded as a subfield of both linguistics and philosophy of language. It provides accounts of what linguistic expressions mean and how their meanings are composed from the meanings of their parts. The enterprise of formal semantics can be thought of as that of reverse-engineering the semantic components of natural languages' grammars.

In formal semantics and pragmatics, modal subordination is the phenomenon whereby a modal expression is interpreted relative to another modal expression to which it is not syntactically subordinate. For instance, the following example does not assert that the birds will in fact be hungry, but rather that hungry birds would be a consequence of Joan forgetting to fill the birdfeeder. This interpretation was unexpected in early theories of the syntax-semantics interface since the content concerning the birds' hunger occurs in a separate sentence from the if-clause.