Control theory is a field of mathematics that deals with the control of dynamical systems in engineered processes and machines. The objective is to develop a model or algorithm governing the application of system inputs to drive the system to a desired state, while minimizing any delay, overshoot, or steady-state error and ensuring a level of control stability; often with the aim to achieve a degree of optimality.

In mathematics and science, a nonlinear system is a system in which the change of the output is not proportional to the change of the input. Nonlinear problems are of interest to engineers, biologists, physicists, mathematicians, and many other scientists because most systems are inherently nonlinear in nature. Nonlinear dynamical systems, describing changes in variables over time, may appear chaotic, unpredictable, or counterintuitive, contrasting with much simpler linear systems.

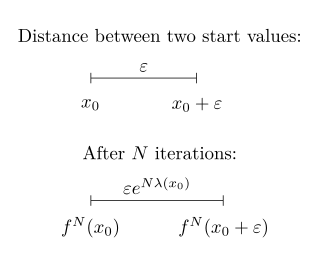

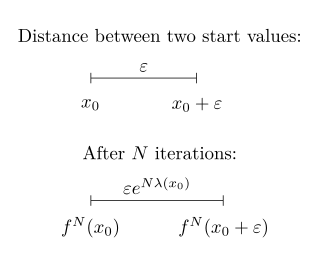

In mathematics, the Lyapunov exponent or Lyapunov characteristic exponent of a dynamical system is a quantity that characterizes the rate of separation of infinitesimally close trajectories. Quantitatively, two trajectories in phase space with initial separation vector diverge at a rate given by

H∞methods are used in control theory to synthesize controllers to achieve stabilization with guaranteed performance. To use H∞ methods, a control designer expresses the control problem as a mathematical optimization problem and then finds the controller that solves this optimization. H∞ techniques have the advantage over classical control techniques in that H∞ techniques are readily applicable to problems involving multivariate systems with cross-coupling between channels; disadvantages of H∞ techniques include the level of mathematical understanding needed to apply them successfully and the need for a reasonably good model of the system to be controlled. It is important to keep in mind that the resulting controller is only optimal with respect to the prescribed cost function and does not necessarily represent the best controller in terms of the usual performance measures used to evaluate controllers such as settling time, energy expended, etc. Also, non-linear constraints such as saturation are generally not well-handled. These methods were introduced into control theory in the late 1970s-early 1980s by George Zames, J. William Helton , and Allen Tannenbaum.

Various types of stability may be discussed for the solutions of differential equations or difference equations describing dynamical systems. The most important type is that concerning the stability of solutions near to a point of equilibrium. This may be discussed by the theory of Aleksandr Lyapunov. In simple terms, if the solutions that start out near an equilibrium point stay near forever, then is Lyapunov stable. More strongly, if is Lyapunov stable and all solutions that start out near converge to , then is asymptotically stable. The notion of exponential stability guarantees a minimal rate of decay, i.e., an estimate of how quickly the solutions converge. The idea of Lyapunov stability can be extended to infinite-dimensional manifolds, where it is known as structural stability, which concerns the behavior of different but "nearby" solutions to differential equations. Input-to-state stability (ISS) applies Lyapunov notions to systems with inputs.

In control systems, sliding mode control (SMC) is a nonlinear control method that alters the dynamics of a nonlinear system by applying a discontinuous control signal that forces the system to "slide" along a cross-section of the system's normal behavior. The state-feedback control law is not a continuous function of time. Instead, it can switch from one continuous structure to another based on the current position in the state space. Hence, sliding mode control is a variable structure control method. The multiple control structures are designed so that trajectories always move toward an adjacent region with a different control structure, and so the ultimate trajectory will not exist entirely within one control structure. Instead, it will slide along the boundaries of the control structures. The motion of the system as it slides along these boundaries is called a sliding mode and the geometrical locus consisting of the boundaries is called the sliding (hyper)surface. In the context of modern control theory, any variable structure system, like a system under SMC, may be viewed as a special case of a hybrid dynamical system as the system both flows through a continuous state space but also moves through different discrete control modes.

In control engineering, a state-space representation is a mathematical model of a physical system as a set of input, output and state variables related by first-order differential equations or difference equations. State variables are variables whose values evolve over time in a way that depends on the values they have at any given time and on the externally imposed values of input variables. Output variables’ values depend on the values of the state variables.

Observability is a measure of how well internal states of a system can be inferred from knowledge of its external outputs.

In control theory, the discrete Lyapunov equation is of the form

In mathematical optimization, the Karush–Kuhn–Tucker (KKT) conditions, also known as the Kuhn–Tucker conditions, are first derivative tests for a solution in nonlinear programming to be optimal, provided that some regularity conditions are satisfied.

Nonlinear control theory is the area of control theory which deals with systems that are nonlinear, time-variant, or both. Control theory is an interdisciplinary branch of engineering and mathematics that is concerned with the behavior of dynamical systems with inputs, and how to modify the output by changes in the input using feedback, feedforward, or signal filtering. The system to be controlled is called the "plant". One way to make the output of a system follow a desired reference signal is to compare the output of the plant to the desired output, and provide feedback to the plant to modify the output to bring it closer to the desired output.

LaSalle's invariance principle is a criterion for the asymptotic stability of an autonomous dynamical system.

In control theory, a control-Lyapunov function (CLF) is an extension of the idea of Lyapunov function to systems with control inputs. The ordinary Lyapunov function is used to test whether a dynamical system is (Lyapunov) stable or asymptotically stable. Lyapunov stability means that if the system starts in a state in some domain D, then the state will remain in D for all time. For asymptotic stability, the state is also required to converge to . A control-Lyapunov function is used to test whether a system is asymptotically stabilizable, that is whether for any state x there exists a control such that the system can be brought to the zero state asymptotically by applying the control u.

In mathematics, a vector measure is a function defined on a family of sets and taking vector values satisfying certain properties. It is a generalization of the concept of finite measure, which takes nonnegative real values only.

Numerical continuation is a method of computing approximate solutions of a system of parameterized nonlinear equations,

In control theory, backstepping is a technique developed circa 1990 by Petar V. Kokotovic and others for designing stabilizing controls for a special class of nonlinear dynamical systems. These systems are built from subsystems that radiate out from an irreducible subsystem that can be stabilized using some other method. Because of this recursive structure, the designer can start the design process at the known-stable system and "back out" new controllers that progressively stabilize each outer subsystem. The process terminates when the final external control is reached. Hence, this process is known as backstepping.

In nonlinear control, the technique of Lyapunov redesign refers to the design where a stabilizing state feedback controller can be constructed with knowledge of the Lyapunov function . Consider the system

In control theory, dynamical systems are in strict-feedback form when they can be expressed as

Wassim Michael Haddad is a Lebanese-Greek-American applied mathematician, scientist, and engineer, with research specialization in the areas of dynamical systems and control. His research has led to fundamental breakthroughs in applied mathematics, thermodynamics, stability theory, robust control, dynamical system theory, and neuroscience. Professor Haddad is a member of the faculty of the School of Aerospace Engineering at Georgia Institute of Technology, where he holds the rank of Professor and Chair of the Flight Mechanics and Control Discipline. Dr. Haddad is a member of the Academy of Nonlinear Sciences for recognition of paramount contributions to the fields of nonlinear stability theory, nonlinear dynamical systems, and nonlinear control and an IEEE Fellow for contributions to robust, nonlinear, and hybrid control systems.

Input-to-state stability (ISS) is a stability notion widely used to study stability of nonlinear control systems with external inputs. Roughly speaking, a control system is ISS if it is globally asymptotically stable in the absence of external inputs and if its trajectories are bounded by a function of the size of the input for all sufficiently large times. The importance of ISS is due to the fact that the concept has bridged the gap between input–output and state-space methods, widely used within the control systems community.