Continuum mechanics is a branch of mechanics that deals with the deformation of and transmission of forces through materials modeled as a continuous medium rather than as discrete particles. The French mathematician Augustin-Louis Cauchy was the first to formulate such models in the 19th century.

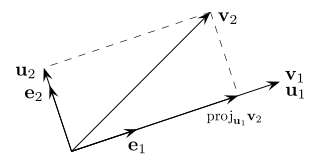

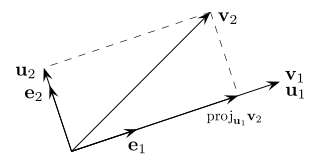

In mathematics, particularly linear algebra and numerical analysis, the Gram–Schmidt process or Gram-Schmidt algorithm is a way of finding a set of two or more vectors that are perpendicular to each other.

In the theory of ordinary differential equations (ODEs), Lyapunov functions, named after Aleksandr Lyapunov, are scalar functions that may be used to prove the stability of an equilibrium of an ODE. Lyapunov functions are important to stability theory of dynamical systems and control theory. A similar concept appears in the theory of general state-space Markov chains usually under the name Foster–Lyapunov functions.

Various types of stability may be discussed for the solutions of differential equations or difference equations describing dynamical systems. The most important type is that concerning the stability of solutions near to a point of equilibrium. This may be discussed by the theory of Aleksandr Lyapunov. In simple terms, if the solutions that start out near an equilibrium point stay near forever, then is Lyapunov stable. More strongly, if is Lyapunov stable and all solutions that start out near converge to , then is said to be asymptotically stable. The notion of exponential stability guarantees a minimal rate of decay, i.e., an estimate of how quickly the solutions converge. The idea of Lyapunov stability can be extended to infinite-dimensional manifolds, where it is known as structural stability, which concerns the behavior of different but "nearby" solutions to differential equations. Input-to-state stability (ISS) applies Lyapunov notions to systems with inputs.

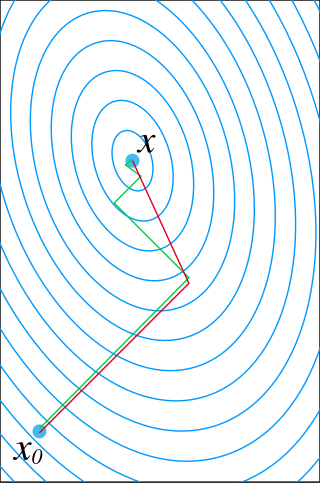

In control systems, sliding mode control (SMC) is a nonlinear control method that alters the dynamics of a nonlinear system by applying a discontinuous control signal that forces the system to "slide" along a cross-section of the system's normal behavior. The state-feedback control law is not a continuous function of time. Instead, it can switch from one continuous structure to another based on the current position in the state space. Hence, sliding mode control is a variable structure control method. The multiple control structures are designed so that trajectories always move toward an adjacent region with a different control structure, and so the ultimate trajectory will not exist entirely within one control structure. Instead, it will slide along the boundaries of the control structures. The motion of the system as it slides along these boundaries is called a sliding mode and the geometrical locus consisting of the boundaries is called the sliding (hyper)surface. In the context of modern control theory, any variable structure system, like a system under SMC, may be viewed as a special case of a hybrid dynamical system as the system both flows through a continuous state space but also moves through different discrete control modes.

In physics, the S-matrix or scattering matrix relates the initial state and the final state of a physical system undergoing a scattering process. It is used in quantum mechanics, scattering theory and quantum field theory (QFT).

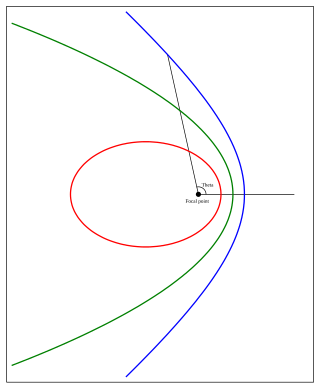

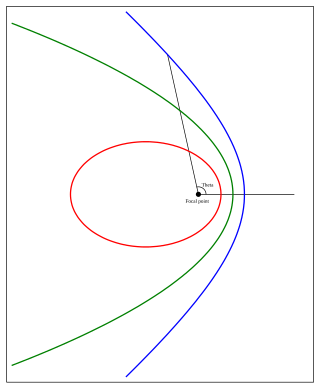

In geometry, an envelope of a planar family of curves is a curve that is tangent to each member of the family at some point, and these points of tangency together form the whole envelope. Classically, a point on the envelope can be thought of as the intersection of two "infinitesimally adjacent" curves, meaning the limit of intersections of nearby curves. This idea can be generalized to an envelope of surfaces in space, and so on to higher dimensions.

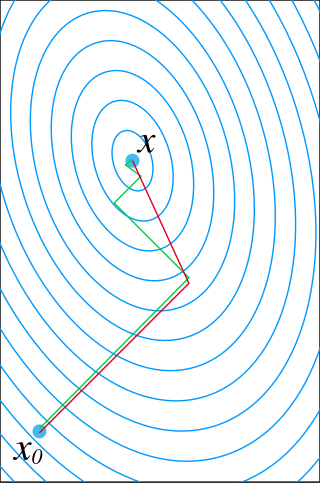

In mathematics, the conjugate gradient method is an algorithm for the numerical solution of particular systems of linear equations, namely those whose matrix is positive-semidefinite. The conjugate gradient method is often implemented as an iterative algorithm, applicable to sparse systems that are too large to be handled by a direct implementation or other direct methods such as the Cholesky decomposition. Large sparse systems often arise when numerically solving partial differential equations or optimization problems.

In differential geometry, a tensor density or relative tensor is a generalization of the tensor field concept. A tensor density transforms as a tensor field when passing from one coordinate system to another, except that it is additionally multiplied or weighted by a power W of the Jacobian determinant of the coordinate transition function or its absolute value. A tensor density with a single index is called a vector density. A distinction is made among (authentic) tensor densities, pseudotensor densities, even tensor densities and odd tensor densities. Sometimes tensor densities with a negative weight W are called tensor capacity. A tensor density can also be regarded as a section of the tensor product of a tensor bundle with a density bundle.

An osculating circle is a circle that best approximates the curvature of a curve at a specific point. It is tangent to the curve at that point and has the same curvature as the curve at that point. The osculating circle provides a way to understand the local behavior of a curve and is commonly used in differential geometry and calculus.

In applied mathematics, comparison functions are several classes of continuous functions, which are used in stability theory to characterize the stability properties of control systems as Lyapunov stability, uniform asymptotic stability etc.

In directional statistics, the von Mises–Fisher distribution, is a probability distribution on the -sphere in . If the distribution reduces to the von Mises distribution on the circle.

The Newman–Penrose (NP) formalism is a set of notation developed by Ezra T. Newman and Roger Penrose for general relativity (GR). Their notation is an effort to treat general relativity in terms of spinor notation, which introduces complex forms of the usual variables used in GR. The NP formalism is itself a special case of the tetrad formalism, where the tensors of the theory are projected onto a complete vector basis at each point in spacetime. Usually this vector basis is chosen to reflect some symmetry of the spacetime, leading to simplified expressions for physical observables. In the case of the NP formalism, the vector basis chosen is a null tetrad: a set of four null vectors—two real, and a complex-conjugate pair. The two real members often asymptotically point radially inward and radially outward, and the formalism is well adapted to treatment of the propagation of radiation in curved spacetime. The Weyl scalars, derived from the Weyl tensor, are often used. In particular, it can be shown that one of these scalars— in the appropriate frame—encodes the outgoing gravitational radiation of an asymptotically flat system.

In celestial mechanics, a Kepler orbit is the motion of one body relative to another, as an ellipse, parabola, or hyperbola, which forms a two-dimensional orbital plane in three-dimensional space. A Kepler orbit can also form a straight line. It considers only the point-like gravitational attraction of two bodies, neglecting perturbations due to gravitational interactions with other objects, atmospheric drag, solar radiation pressure, a non-spherical central body, and so on. It is thus said to be a solution of a special case of the two-body problem, known as the Kepler problem. As a theory in classical mechanics, it also does not take into account the effects of general relativity. Keplerian orbits can be parametrized into six orbital elements in various ways.

Anatoly Alexeyevich Karatsuba was a Russian mathematician working in the field of analytic number theory, p-adic numbers and Dirichlet series.

In continuum mechanics, plate theories are mathematical descriptions of the mechanics of flat plates that draw on the theory of beams. Plates are defined as plane structural elements with a small thickness compared to the planar dimensions. The typical thickness to width ratio of a plate structure is less than 0.1. A plate theory takes advantage of this disparity in length scale to reduce the full three-dimensional solid mechanics problem to a two-dimensional problem. The aim of plate theory is to calculate the deformation and stresses in a plate subjected to loads.

The Reissner–Mindlin theory of plates is an extension of Kirchhoff–Love plate theory that takes into account shear deformations through-the-thickness of a plate. The theory was proposed in 1951 by Raymond Mindlin. A similar, but not identical, theory in static setting, had been proposed earlier by Eric Reissner in 1945. Both theories are intended for thick plates in which the normal to the mid-surface remains straight but not necessarily perpendicular to the mid-surface. The Reissner-Mindlin theory is used to calculate the deformations and stresses in a plate whose thickness is of the order of one tenth the planar dimensions while the Kirchhoff–Love theory is applicable to thinner plates.

Heat transfer physics describes the kinetics of energy storage, transport, and energy transformation by principal energy carriers: phonons, electrons, fluid particles, and photons. Heat is thermal energy stored in temperature-dependent motion of particles including electrons, atomic nuclei, individual atoms, and molecules. Heat is transferred to and from matter by the principal energy carriers. The state of energy stored within matter, or transported by the carriers, is described by a combination of classical and quantum statistical mechanics. The energy is different made (converted) among various carriers. The heat transfer processes are governed by the rates at which various related physical phenomena occur, such as the rate of particle collisions in classical mechanics. These various states and kinetics determine the heat transfer, i.e., the net rate of energy storage or transport. Governing these process from the atomic level to macroscale are the laws of thermodynamics, including conservation of energy.

Input-to-state stability (ISS) is a stability notion widely used to study stability of nonlinear control systems with external inputs. Roughly speaking, a control system is ISS if it is globally asymptotically stable in the absence of external inputs and if its trajectories are bounded by a function of the size of the input for all sufficiently large times. The importance of ISS is due to the fact that the concept has bridged the gap between input–output and state-space methods, widely used within the control systems community.

The Kaniadakis Weibull distribution is a probability distribution arising as a generalization of the Weibull distribution. It is one example of a Kaniadakis κ-distribution. The κ-Weibull distribution has been adopted successfully for describing a wide variety of complex systems in seismology, economy, epidemiology, among many others.