Related Research Articles

Computational linguistics is an interdisciplinary field concerned with the computational modelling of natural language, as well as the study of appropriate computational approaches to linguistic questions. In general, computational linguistics draws upon linguistics, computer science, artificial intelligence, mathematics, logic, philosophy, cognitive science, cognitive psychology, psycholinguistics, anthropology and neuroscience, among others.

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis.

In computing, time-sharing is the sharing of a computing resource among many users at the same time by means of multiprogramming and multi-tasking.

Linear predictive coding (LPC) is a method used mostly in audio signal processing and speech processing for representing the spectral envelope of a digital signal of speech in compressed form, using the information of a linear predictive model.

Vector quantization (VQ) is a classical quantization technique from signal processing that allows the modeling of probability density functions by the distribution of prototype vectors. It was originally used for data compression. It works by dividing a large set of points (vectors) into groups having approximately the same number of points closest to them. Each group is represented by its centroid point, as in k-means and some other clustering algorithms.

Raytheon BBN is an American research and development company, based next to Fresh Pond in Cambridge, Massachusetts, United States.

Keyword spotting is a problem that was historically first defined in the context of speech processing. In speech processing, keyword spotting deals with the identification of keywords in utterances.

The NEC μPD7720 is the name of fixed point digital signal processors from NEC. Announced in 1980, it became, along with the Texas Instruments TMS32010, one of the most popular DSPs of its day.

Automatic pronunciation assessment is the use of speech recognition to verify the correctness of pronounced speech, as distinguished from manual assessment by an instructor or proctor. Also called speech verification, pronunciation evaluation, and pronunciation scoring, the main application of this technology is computer-aided pronunciation teaching (CAPT) when combined with computer-aided instruction for computer-assisted language learning (CALL), speech remediation, or accent reduction. Pronunciation assessment does not determine unknown speech but instead, knowing the expected word(s) in advance, it attempts to verify the correctness of the learner's pronunciation and ideally their intelligibility to listeners, sometimes along with often inconsequential prosody such as intonation, pitch, tempo, rhythm, and stress. Pronunciation assessment is also used in reading tutoring, for example in products such as Microsoft Teams and from Amira Learning. Automatic pronunciation assessment can also be used to help diagnose and treat speech disorders such as apraxia.

Speaker adaptation is an important technology to fine-tune either features or speech models for mis-match due to inter-speaker variation. In the last decade, eigenvoice (EV) speaker adaptation has been developed. It makes use of the prior knowledge of training speakers to provide a fast adaptation algorithm. Inspired by the kernel eigenface idea in face recognition, kernel eigenvoice (KEV) is proposed. KEV is a non-linear generalization to EV. This incorporates Kernel principal component analysis, a non-linear version of Principal Component Analysis, to capture higher order correlations in order to further explore the speaker space and enhance recognition performance.

In applied mathematics, a bit-reversal permutation is a permutation of a sequence of items, where is a power of two. It is defined by indexing the elements of the sequence by the numbers from to , representing each of these numbers by its binary representation, and mapping each item to the item whose representation has the same bits in the reversed order.

Nelson Harold Morgan is an American computer scientist and professor in residence (emeritus) of electrical engineering and computer science at the University of California, Berkeley. Morgan is the co-inventor of the Relative Spectral (RASTA) approach to speech signal processing, first described in a technical report published in 1991.

An audio coding format is a content representation format for storage or transmission of digital audio. Examples of audio coding formats include MP3, AAC, Vorbis, FLAC, and Opus. A specific software or hardware implementation capable of audio compression and decompression to/from a specific audio coding format is called an audio codec; an example of an audio codec is LAME, which is one of several different codecs which implements encoding and decoding audio in the MP3 audio coding format in software.

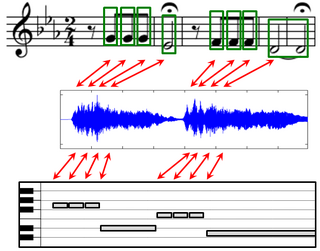

Music can be described and represented in many different ways including sheet music, symbolic representations, and audio recordings. For each of these representations, there may exist different versions that correspond to the same musical work. The general goal of music alignment is to automatically link the various data streams, thus interrelating the multiple information sets related to a given musical work. More precisely, music alignment is taken to mean a procedure which, for a given position in one representation of a piece of music, determines the corresponding position within another representation. In the figure on the right, such an alignment is visualized by the red bidirectional arrows. Such synchronization results form the basis for novel interfaces that allow users to access, search, and browse musical content in a convenient way.

V John Mathews is an Indian-American engineer and educator who is currently a Professor of Electrical Engineering and Computer Science (EECS) at the Oregon State University, United States.

Steven Glenn Johnson is an American mathematician known for being a co-creator of the FFTW library for software-based fast Fourier transforms and for his work on photonic crystals. He is professor of Applied Mathematics and Physics at MIT where he leads a group on Nanostructures and Computation.

Voice computing is the discipline that develops hardware or software to process voice inputs.

Namrata Vaswani is an Indian-American electrical engineer known for her research in compressed sensing, robust principal component analysis, signal processing, statistical learning theory, and computer vision. She is a Joseph and Elizabeth Anderlik Professor in Electrical and Computer Engineering at Iowa State University, and a professor of mathematics at Iowa State.

Madeleine Ashcraft Bates is a researcher in natural language processing who worked at BBN Technologies in Cambridge, Massachusetts from the early 1970s to the late 1990s. She was president of the Association for Computational Linguistics in 1985, and co-editor of the book Challenges in Natural Language Processing (1993).

References

- ↑ Harper, Mary. "Data Resources to Support the Babel Program Intelligence Advanced Research Projects Activity" (PDF). Retrieved 26 July 2017.

- ↑ "Babel". IARPA. Retrieved 26 July 2017.

- ↑ T. Alumäe et al., "The 2016 BBN Georgian telephone speech keyword spotting system," 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, 2017, pp. 5755-5759, doi: 10.1109/ICASSP.2017.7953259.

- ↑ J. Cui et al., "Knowledge distillation across ensembles of multilingual models for low-resource languages," 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, 2017, pp. 4825-4829, doi: 10.1109/ICASSP.2017.7953073.

- ↑ Gales M.J.F., Knill K.M., Ragni A. (2017) Low-Resource Speech Recognition and Keyword-Spotting. In: Karpov A., Potapova R., Mporas I. (eds) Speech and Computer. SPECOM 2017. Lecture Notes in Computer Science, vol 10458. Springer, Cham. https://doi.org/10.1007/978-3-319-66429-3_1

- ↑ P. Golik, Z. Tüske, K. Irie, E. Beck, R. Schlüter, and H. Ney. The 2016 RWTH Keyword Search System for Low-Resource Languages. In International Conference Speech and Computer (SPECOM), Lecture Notes in Computer Science, Subseries Lecture Notes in Artificial Intelligence, volume 10458, pages 719-730, Hatfield, UK, September 2017.

- ↑ "History of the Kaldi project" . Retrieved 26 July 2017.