Related Research Articles

Fuzzy logic is a form of many-valued logic in which the truth value of variables may be any real number between 0 and 1. It is employed to handle the concept of partial truth, where the truth value may range between completely true and completely false. By contrast, in Boolean logic, the truth values of variables may only be the integer values 0 or 1.

In computational science, particle swarm optimization (PSO) is a computational method that optimizes a problem by iteratively trying to improve a candidate solution with regard to a given measure of quality. It solves a problem by having a population of candidate solutions, here dubbed particles, and moving these particles around in the search-space according to simple mathematical formulae over the particle's position and velocity. Each particle's movement is influenced by its local best known position, but is also guided toward the best known positions in the search-space, which are updated as better positions are found by other particles. This is expected to move the swarm toward the best solutions.

Stochastic resonance (SR) is a phenomenon in which a signal that is normally too weak to be detected by a sensor can be boosted by adding white noise to the signal, which contains a wide spectrum of frequencies. The frequencies in the white noise corresponding to the original signal's frequencies will resonate with each other, amplifying the original signal while not amplifying the rest of the white noise – thereby increasing the signal-to-noise ratio, which makes the original signal more prominent. Further, the added white noise can be enough to be detectable by the sensor, which can then filter it out to effectively detect the original, previously undetectable signal.

The expression computational intelligence (CI) usually refers to the ability of a computer to learn a specific task from data or experimental observation. Even though it is commonly considered a synonym of soft computing, there is still no commonly accepted definition of computational intelligence.

A recurrent neural network (RNN) is one of the two broad types of artificial neural network, characterized by direction of the flow of information between its layers. In contrast to the other type, the uni-directional feedforward neural network, it is a bi-directional artificial neural network, meaning that it allows the output from some nodes to affect subsequent input to the same nodes. Their ability to use internal state (memory) to process arbitrary sequences of inputs makes them applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition. The term "recurrent neural network" is used to refer to the class of networks with an infinite impulse response, whereas "convolutional neural network" refers to the class of finite impulse response. Both classes of networks exhibit temporal dynamic behavior. A finite impulse recurrent network is a directed acyclic graph that can be unrolled and replaced with a strictly feedforward neural network, while an infinite impulse recurrent network is a directed cyclic graph that cannot be unrolled.

In the field of artificial intelligence, the designation neuro-fuzzy refers to combinations of artificial neural networks and fuzzy logic.

A memetic algorithm (MA) in computer science and operations research, is an extension of the traditional genetic algorithm (GA) or more general evolutionary algorithm (EA). It may provide a sufficiently good solution to an optimization problem. It uses a suitable heuristic or local search technique to improve the quality of solutions generated by the EA and to reduce the likelihood of premature convergence.

Dr. Lawrence Jerome Fogel was a pioneer in evolutionary computation and human factors analysis. He is known as the inventor of active noise cancellation and the father of evolutionary programming. His scientific career spanned nearly six decades and included electrical engineering, aerospace engineering, communication theory, human factors research, information processing, cybernetics, biotechnology, artificial intelligence, and computer science.

Computational neurogenetic modeling (CNGM) is concerned with the study and development of dynamic neuronal models for modeling brain functions with respect to genes and dynamic interactions between genes. These include neural network models and their integration with gene network models. This area brings together knowledge from various scientific disciplines, such as computer and information science, neuroscience and cognitive science, genetics and molecular biology, as well as engineering.

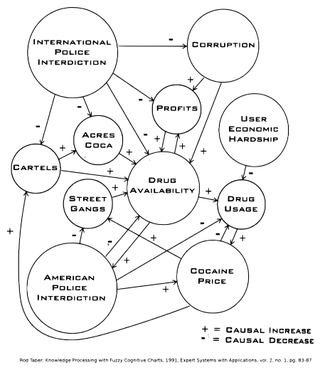

A fuzzy cognitive map (FCM) is a cognitive map within which the relations between the elements of a "mental landscape" can be used to compute the "strength of impact" of these elements. Fuzzy cognitive maps were introduced by Bart Kosko. Robert Axelrod introduced cognitive maps as a formal way of representing social scientific knowledge and modeling decision making in social and political systems, then brought in the computation.

Bidirectional associative memory (BAM) is a type of recurrent neural network. BAM was introduced by Bart Kosko in 1988. There are two types of associative memory, auto-associative and hetero-associative. BAM is hetero-associative, meaning given a pattern it can return another pattern which is potentially of a different size. It is similar to the Hopfield network in that they are both forms of associative memory. However, Hopfield nets return patterns of the same size.

The IEEE Systems, Man, and Cybernetics Society is a professional society of the IEEE. It aims "to serve the interests of its members and the community at large by promoting the theory, practice, and interdisciplinary aspects of systems science and engineering, human-machine systems, and cybernetics".

In computer science, an evolving intelligent system is a fuzzy logic system which improves the own performance by evolving rules. The technique is known from machine learning, in which external patterns are learned by an algorithm. Fuzzy logic based machine learning works with neuro-fuzzy systems.

An adaptive neuro-fuzzy inference system or adaptive network-based fuzzy inference system (ANFIS) is a kind of artificial neural network that is based on Takagi–Sugeno fuzzy inference system. The technique was developed in the early 1990s. Since it integrates both neural networks and fuzzy logic principles, it has potential to capture the benefits of both in a single framework.

An artificial neural network's learning rule or learning process is a method, mathematical logic or algorithm which improves the network's performance and/or training time. Usually, this rule is applied repeatedly over the network. It is done by updating the weights and bias levels of a network when a network is simulated in a specific data environment. A learning rule may accept existing conditions of the network and will compare the expected result and actual result of the network to give new and improved values for weights and bias. Depending on the complexity of actual model being simulated, the learning rule of the network can be as simple as an XOR gate or mean squared error, or as complex as the result of a system of differential equations.

Fusion adaptive resonance theory (fusion ART) is a generalization of self-organizing neural networks known as the original Adaptive Resonance Theory models for learning recognition categories across multiple pattern channels. There is a separate stream of work on fusion ARTMAP, that extends fuzzy ARTMAP consisting of two fuzzy ART modules connected by an inter-ART map field to an extended architecture consisting of multiple ART modules.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

Javier Andreu-Perez is a British computer scientist and a Senior Lecturer and Chair in Smart Health Technologies at the University of Essex. He is also associate editor-in-chief of Neurocomputing for the area of Deep Learning and Machine Learning. Andreu-Perez research is mainly focused on Human-Centered Artificial Intelligence (HCAI). He also chairs a interdisciplinary lab in this area, HCAI-Essex.

Fuzzy differential inclusion is the extension of differential inclusion to fuzzy sets introduced by Lotfi A. Zadeh.

Jerry M. Mendel is an engineer, academic, and author. He is professor emeritus of Electrical and Computer Engineering at the University of Southern California.

References

- ↑ "INNS Award Recipients". www.inns.org. Retrieved 2023-07-27.

- ↑ "Kosko receives Hebb Award from International Neural Network Society". www.usc.edu. Retrieved 2023-07-27.

- ↑ Adam, John A. (February 1996). "Bart Kosko: This optimistic engineer seeks to give machines a higher IQ—both in his neural and fuzzy systems work and in his prolific science fiction". IEEE Spectrum. 33 (2): 58–62. doi:10.1109/6.482276. S2CID 1761292.

- ↑ "Liberty Magazine – November 2003".

- ↑ "Now Hear This!". Wired.

- ↑ Kosko, Bart (January 1986). "Fuzzy cognitive maps". International Journal of Man-Machine Studies. 24 (1): 65–75. doi:10.1016/S0020-7373(86)80040-2.

- ↑ Julie, Dickerson; Kosko, Bart (May 1994). "Virtual Worlds as Fuzzy Cognitive Maps". Presence: Teleoperators Virtual Environments. 3 (2): 173–189. doi:10.1162/pres.1994.3.2.173. S2CID 61432716.

- 1 2 Kosko, Bart (December 1986). "Fuzzy entropy and conditioning". Information Sciences. 40 (2): 165–174. doi:10.1016/0020-0255(86)90006-X.

- 1 2 Kosko, Bart (August 1998). "Global stability of generalized additive fuzzy systems". IEEE Transactions on Systems, Man, and Cybernetics - Part C: Applications and Reviews. 28 (3): 441–452. doi:10.1109/5326.704584.

- ↑ Kosko, Bart (November 1994). "Fuzzy systems as universal approximators". IEEE Transactions on Computers. 43 (11): 1329–1333. doi:10.1109/12.324566.

- ↑ Kosko, Bart (1995). "Optimal fuzzy rules cover extrema". International Journal of Intelligent Systems. 10 (2): 249–255. doi:10.1002/int.4550100206. S2CID 205966636.

- ↑ Mitaim, Sanya; Kosko, Bart (August 2001). "The shape of fuzzy sets in adaptive function approximation". IEEE Transactions on Fuzzy Systems. 9 (4): 249–255. doi:10.1109/91.940974.

- ↑ Lee, Ian; Kosko, Bart; Anderson, W. French (November 2005). "Modeling Gunshot Bruises in Soft Body Armor with an Adaptive Fuzzy System". IEEE Transactions on Systems, Man, and Cybernetics - Part B: Cybernetics. 35 (6): 1374–1390. doi:10.1109/TSMCB.2005.855585. PMID 16366262. S2CID 1405236.

- 1 2 Kosko, Bart (March 1990). "Unsupervised learning in noise". IEEE Transactions on Neural Networks. 1 (1): 44–57. doi:10.1109/72.80204. PMID 18282822. S2CID 14223472.

- ↑ Kosko, Bart (March 1988). "Bidirectional associative memories". IEEE Transactions on Systems, Man, and Cybernetics. 18 (1): 49–60. doi:10.1109/21.87054.

- ↑ Mitaim, Sanya; Kosko, Bart (November 1998). "Adaptive stochastic resonance". Proceedings of the IEEE. 86 (11): 2152–2183. doi:10.1109/5.726785.

- ↑ Kosko, Bart; Mitaim, Sanya (July 2003). "Stochastic resonance in noisy threshold neurons". Neural Networks. 16 (5–6): 755–761. doi:10.1016/S0893-6080(03)00128-X. PMID 12850031.

- ↑ Brandon, Franzke; Kosko, Bart (October 2011). "Noise can speed convergence in Markov chains". Physical Review E. 84 (4): 041112. Bibcode:2011PhRvE..84d1112F. doi:10.1103/PhysRevE.84.041112. PMID 22181092.