In mathematical physics and mathematics, the Pauli matrices are a set of three 2 × 2 complex matrices which are Hermitian and unitary. Usually indicated by the Greek letter sigma, they are occasionally denoted by tau when used in connection with isospin symmetries. They are

In probability theory and statistics, the multivariate normal distribution, multivariate Gaussian distribution, or joint normal distribution is a generalization of the one-dimensional (univariate) normal distribution to higher dimensions. One definition is that a random vector is said to be k-variate normally distributed if every linear combination of its k components has a univariate normal distribution. Its importance derives mainly from the multivariate central limit theorem. The multivariate normal distribution is often used to describe, at least approximately, any set of (possibly) correlated real-valued random variables each of which clusters around a mean value.

In probability theory, Chebyshev's inequality guarantees that, for a wide class of probability distributions, no more than a certain fraction of values can be more than a certain distance from the mean. Specifically, no more than 1/k2 of the distribution's values can be more than k standard deviations away from the mean. The rule is often called Chebyshev's theorem, about the range of standard deviations around the mean, in statistics. The inequality has great utility because it can be applied to any probability distribution in which the mean and variance are defined. For example, it can be used to prove the weak law of large numbers.

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. In the broadest sense correlation is any statistical association, though it commonly refers to the degree to which a pair of variables are linearly related. Familiar examples of dependent phenomena include the correlation between the height of parents and their offspring, and the correlation between the price of a good and the quantity the consumers are willing to purchase, as it is depicted in the so-called demand curve.

The great-circle distance, orthodromic distance, or spherical distance is the shortest distance between two points on the surface of a sphere, measured along the surface of the sphere. The distance between two points in Euclidean space is the length of a straight line between them, but on the sphere there are no straight lines. In spaces with curvature, straight lines are replaced by geodesics. Geodesics on the sphere are circles on the sphere whose centers coincide with the center of the sphere, and are called great circles.

In statistics, the Bhattacharyya distance measures the similarity of two probability distributions. It is closely related to the Bhattacharyya coefficient which is a measure of the amount of overlap between two statistical samples or populations. Both measures are named after Anil Kumar Bhattacharya, a statistician who worked in the 1930s at the Indian Statistical Institute.

The Einstein–Hilbert action in general relativity is the action that yields the Einstein field equations through the principle of least action. With the (− + + +) metric signature, the gravitational part of the action is given as

In mathematical statistics, the Kullback–Leibler divergence,, is a measure of how one probability distribution is different from a second, reference probability distribution. Applications include characterizing the relative (Shannon) entropy in information systems, randomness in continuous time-series, and information gain when comparing statistical models of inference. In contrast to variation of information, it is a distribution-wise asymmetric measure and thus does not qualify as a statistical metric of spread – it also does not satisfy the triangle inequality. In the simple case, a relative entropy of 0 indicates that the two distributions in question are identical. In simplified terms, it is a measure of surprise, with diverse applications such as applied statistics, fluid mechanics, neuroscience and machine learning.

Directional statistics is the subdiscipline of statistics that deals with directions, axes or rotations in Rn. More generally, directional statistics deals with observations on compact Riemannian manifolds.

In the mathematical description of general relativity, the Boyer–Lindquist coordinates are a generalization of the coordinates used for the metric of a Schwarzschild black hole that can be used to express the metric of a Kerr black hole.

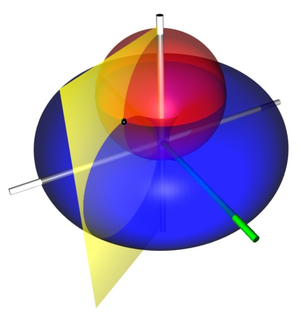

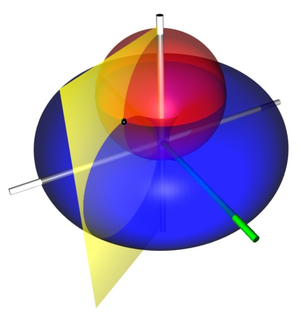

Toroidal coordinates are a three-dimensional orthogonal coordinate system that results from rotating the two-dimensional bipolar coordinate system about the axis that separates its two foci. Thus, the two foci and in bipolar coordinates become a ring of radius in the plane of the toroidal coordinate system; the -axis is the axis of rotation. The focal ring is also known as the reference circle.

In quantum mechanics, notably in quantum information theory, fidelity is a measure of the "closeness" of two quantum states. It expresses the probability that one state will pass a test to identify as the other. The fidelity is not a metric on the space of density matrices, but it can be used to define the Bures metric on this space.

In quantum information theory, quantum relative entropy is a measure of distinguishability between two quantum states. It is the quantum mechanical analog of relative entropy.

In probability and statistics, the Hellinger distance is used to quantify the similarity between two probability distributions. It is a type of f-divergence. The Hellinger distance is defined in terms of the Hellinger integral, which was introduced by Ernst Hellinger in 1909.

The Maxwell–Bloch equations, also called the optical Bloch equations describe the dynamics of a two-state quantum system interacting with the electromagnetic mode of an optical resonator. They are analogous to the Bloch equations which describe the motion of the nuclear magnetic moment in an electromagnetic field. The equations can be derived either semiclassically or with the field fully quantized when certain approximations are made.

In quantum information theory, the classical capacity of a quantum channel is the maximum rate at which classical data can be sent over it error-free in the limit of many uses of the channel. Holevo, Schumacher, and Westmoreland proved the following least upper bound on the classical capacity of any quantum channel :

In queueing theory, a discipline within the mathematical theory of probability, a heavy traffic approximation is the matching of a queueing model with a diffusion process under some limiting conditions on the model's parameters. The first such result was published by John Kingman who showed that when the utilisation parameter of an M/M/1 queue is near 1 a scaled version of the queue length process can be accurately approximated by a reflected Brownian motion.

The min-entropy, in information theory, is the smallest of the Rényi family of entropies, corresponding to the most conservative way of measuring the unpredictability of a set of outcomes, as the negative logarithm of the probability of the most likely outcome. The various Rényi entropies are all equal for a uniform distribution, but measure the unpredictability of a nonuniform distribution in different ways. The min-entropy is never greater than the ordinary or Shannon entropy and that in turn is never greater than the Hartley or max-entropy, defined as the logarithm of the number of outcomes with nonzero probability.

Generalized relative entropy is a measure of dissimilarity between two quantum states. It is a "one-shot" analogue of quantum relative entropy and shares many properties of the latter quantity.

In mathematics and theoretical computer science, analysis of Boolean functions is the study of real-valued functions on or from a spectral perspective. The functions studied are often, but not always, Boolean-valued, making them Boolean functions. The area has found many applications in combinatorics, social choice theory, random graphs, and theoretical computer science, especially in hardness of approximation, property testing, and PAC learning.