The topic of this article may not meet Wikipedia's general notability guideline .(July 2016) |

This article may rely excessively on sources too closely associated with the subject , potentially preventing the article from being verifiable and neutral.(July 2016) |

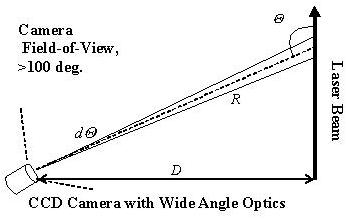

The CLidar is a scientific instrument used for measuring particulates (aerosols) in the lower atmosphere. CLidar stands for camera lidar, which in turn is a portmanteau of "light" and "radar". It is a form of remote sensing and used for atmospheric physics.