Related Research Articles

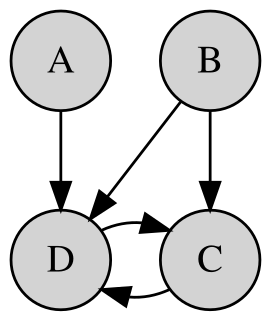

A Bayesian network, Bayes network, belief network, decision network, Bayes(ian) model or probabilistic directed acyclic graphical model is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). Bayesian networks are ideal for taking an event that occurred and predicting the likelihood that any one of several possible known causes was the contributing factor. For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases.

Machine Learning (ML) is the study of Computer algorithms improve automatically through experience. It is seen as a subset of artificial intelligence. Machine learning algorithms build a mathematical model based on sample data, known as "training data", in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as email filtering and computer vision, where it is difficult or infeasible to develop conventional algorithms to perform the needed tasks.

Algorithmic learning theory is a mathematical framework for analyzing machine learning problems and algorithms. Synonyms include formal learning theory and algorithmic inductive inference. Algorithmic learning theory is different from statistical learning theory in that it does not make use of statistical assumptions and analysis. Both algorithmic and statistical learning theory are concerned with machine learning and can thus be viewed as branches of computational learning theory.

Ray Solomonoff was the inventor of algorithmic probability, his General Theory of Inductive Inference, and was a founder of algorithmic information theory. He was an originator of the branch of artificial intelligence based on machine learning, prediction and probability. He circulated the first report on non-semantic machine learning in 1956.

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a graph expresses the conditional dependence structure between random variables. They are commonly used in probability theory, statistics—particularly Bayesian statistics—and machine learning.

Generative music is a term popularized by Brian Eno to describe music that is ever-different and changing, and that is created by a system.

Probabilistic latent semantic analysis (PLSA), also known as probabilistic latent semantic indexing is a statistical technique for the analysis of two-mode and co-occurrence data. In effect, one can derive a low-dimensional representation of the observed variables in terms of their affinity to certain hidden variables, just as in latent semantic analysis, from which PLSA evolved.

Conditional random fields (CRFs) are a class of statistical modeling method often applied in pattern recognition and machine learning and used for structured prediction. Whereas a classifier predicts a label for a single sample without considering "neighboring" samples, a CRF can take context into account. To do so, the prediction is modeled as a graphical model, which implements dependencies between the predictions. What kind of graph is used depends on the application. For example, in natural language processing, linear chain CRFs are popular, which implement sequential dependencies in the predictions. In image processing the graph typically connects locations to nearby and/or similar locations to enforce that they receive similar predictions.

Grammar induction is the process in machine learning of learning a formal grammar from a set of observations, thus constructing a model which accounts for the characteristics of the observed objects. More generally, grammatical inference is that branch of machine learning where the instance space consists of discrete combinatorial objects such as strings, trees and graphs.

A semantic reasoner, reasoning engine, rules engine, or simply a reasoner, is a piece of software able to infer logical consequences from a set of asserted facts or axioms. The notion of a semantic reasoner generalizes that of an inference engine, by providing a richer set of mechanisms to work with. The inference rules are commonly specified by means of an ontology language, and often a description logic language. Many reasoners use first-order predicate logic to perform reasoning; inference commonly proceeds by forward chaining and backward chaining. There are also examples of probabilistic reasoners, including non-axiomatic reasoning systems, and probabilistic logic networks.

Structured prediction or structured (output) learning is an umbrella term for supervised machine learning techniques that involves predicting structured objects, rather than scalar discrete or real values.

Joshua Brett Tenenbaum is Professor of Cognitive Science and Computation at the Massachusetts Institute of Technology. He is known for contributions to mathematical psychology and Bayesian cognitive science. Tenenbaum previously taught at Stanford University, where he was the Wasow Visiting Fellow from October 2010 to January 2011.

Probabilistic programming (PP) is a programming paradigm in which probabilistic models are specified and inference for these models is performed automatically. It represents an attempt to unify probabilistic modeling and traditional general purpose programming in order to make the former easier and more widely applicable. It can be used to create systems that help make decisions in the face of uncertainty.

Inductive programming (IP) is a special area of automatic programming, covering research from artificial intelligence and programming, which addresses learning of typically declarative and often recursive programs from incomplete specifications, such as input/output examples or constraints.

Stan is a probabilistic programming language for statistical inference written in C++. The Stan language is used to specify a (Bayesian) statistical model with an imperative program calculating the log probability density function.

This glossary of artificial intelligence is a list of definitions of terms and concepts relevant to the study of artificial intelligence, its sub-disciplines, and related fields. Related glossaries include Glossary of computer science, Glossary of robotics, and Glossary of machine vision.

PyMC3 is a Python package for Bayesian statistical modeling and probabilistic machine learning which focuses on advanced Markov chain Monte Carlo and variational fitting algorithms. It is a rewrite from scratch of the previous version of the PyMC software. Unlike PyMC2, which had used Fortran extensions for performing computations, PyMC3 relies on Theano for automatic differentiation and also for computation optimization and dynamic C compilation.. From version 3.8 PyMC3 relies on ArviZ to handle plotting, diagnostics, and statistical checks. PyMC3 and Stan are the two most popular probabilistic programming tools. PyMC3 is an open source project, developed by the community and fiscally sponsored by NumFocus.

In programming languages and machine learning, Bayesian program synthesis (BPS) is a program synthesis technique where Bayesian probabilistic programs automatically construct new Bayesian probabilistic programs. This approach stands in contrast to routine practice in probabilistic programming where human developers manually write new probabilistic programs.

The following outline is provided as an overview of and topical guide to machine learning. Machine learning is a subfield of soft computing within computer science that evolved from the study of pattern recognition and computational learning theory in artificial intelligence. In 1959, Arthur Samuel defined machine learning as a "field of study that gives computers the ability to learn without being explicitly programmed". Machine learning explores the study and construction of algorithms that can learn from and make predictions on data. Such algorithms operate by building a model from an example training set of input observations in order to make data-driven predictions or decisions expressed as outputs, rather than following strictly static program instructions.

Intuitive statistics, or folk statistics, refers to the cognitive phenomenon where organisms use data to make generalizations and predictions about the world. This can be a small amount of sample data or training instances, which in turn contribute to inductive inferences about either population-level properties, future data, or both. Inferences can involve revising hypotheses, or beliefs, in light of probabilistic data that inform and motivate future predictions. The informal tendency for cognitive animals to intuitively generate statistical inferences, when formalized with certain axioms of probability theory, constitutes statistics as an academic discipline.

References

- ↑ "Probabilistic Programming wiki". Archived from the original on 2008-11-18. Retrieved 2020-07-22.

- ↑ Goodman, Noah; Mansinghka, Vikash; Roy, Daniel; Bonawitz, Keith; Tenenbaum, Joshua (2008). "Church: a language for generative models" (PDF). Proc. Uncertainty in Artificial Intelligence.

| This programming-language-related article is a stub. You can help Wikipedia by expanding it. |