Related Research Articles

The client–server model is a distributed application structure that partitions tasks or workloads between the providers of a resource or service, called servers, and service requesters, called clients. Often clients and servers communicate over a computer network on separate hardware, but both client and server may reside in the same system. A server host runs one or more server programs, which share their resources with clients. A client usually does not share any of its resources, but it requests content or service from a server. Clients, therefore, initiate communication sessions with servers, which await incoming requests. Examples of computer applications that use the client–server model are email, network printing, and the World Wide Web.

The Domain Name System (DNS) is a hierarchical and distributed naming system for computers, services, and other resources in the Internet or other Internet Protocol (IP) networks. It associates various information with domain names assigned to each of the associated entities. Most prominently, it translates readily memorized domain names to the numerical IP addresses needed for locating and identifying computer services and devices with the underlying network protocols. The Domain Name System has been an essential component of the functionality of the Internet since 1985.

In telecommunication, provisioning involves the process of preparing and equipping a network to allow it to provide new services to its users. In National Security/Emergency Preparedness telecommunications services, "provisioning" equates to "initiation" and includes altering the state of an existing priority service or capability.

In computing, load balancing is the process of distributing a set of tasks over a set of resources, with the aim of making their overall processing more efficient. Load balancing can optimize the response time and avoid unevenly overloading some compute nodes while other compute nodes are left idle.

A web hosting service is a type of Internet hosting service that hosts websites for clients, i.e. it offers the facilities required for them to create and maintain a site and makes it accessible on the World Wide Web. Companies providing web hosting services are sometimes called web hosts.

Scalability is the property of a system to handle a growing amount of work by adding resources to the system.

Anycast is a network addressing and routing methodology in which a single destination IP address is shared by devices in multiple locations. Routers direct packets addressed to this destination to the location nearest the sender, using their normal decision-making algorithms, typically the lowest number of BGP network hops. Anycast routing is widely used by content delivery networks such as web and DNS hosts, to bring their content closer to end users.

A content delivery network, or content distribution network (CDN), is a geographically distributed network of proxy servers and their data centers. The goal is to provide high availability and performance by distributing the service spatially relative to end users. CDNs came into existence in the late 1990s as a means for alleviating the performance bottlenecks of the Internet as the Internet was starting to become a mission-critical medium for people and enterprises. Since then, CDNs have grown to serve a large portion of the Internet content today, including web objects, downloadable objects, applications, live streaming media, on-demand streaming media, and social media sites.

Software multitenancy is a software architecture in which a single instance of software runs on a server and serves multiple tenants. Systems designed in such manner are "shared". A tenant is a group of users who share a common access with specific privileges to the software instance. With a multitenant architecture, a software application is designed to provide every tenant a dedicated share of the instance - including its data, configuration, user management, tenant individual functionality and non-functional properties. Multitenancy contrasts with multi-instance architectures, where separate software instances operate on behalf of different tenants.

Edge computing is a distributed computing paradigm that brings computation and data storage closer to the sources of data. This is expected to improve response times and save bandwidth. Edge computing is an architecture rather than a specific technology, and a topology- and location-sensitive form of distributed computing.

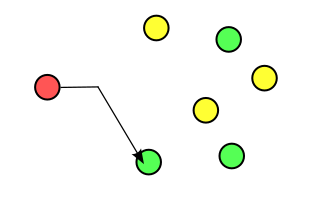

Fast flux is a domain name system (DNS) based evasion technique used by cyber criminals to hide phishing and malware delivery websites behind an ever-changing network of compromised hosts acting as reverse proxies to the backend botnet master—a bulletproof autonomous system. It can also refer to the combination of peer-to-peer networking, distributed command and control, web-based load balancing and proxy redirection used to make malware networks more resistant to discovery and counter-measures.

Cloud computing is the on-demand availability of computer system resources, especially data storage and computing power, without direct active management by the user. Large clouds often have functions distributed over multiple locations, each of which is a data center. Cloud computing relies on sharing of resources to achieve coherence and typically uses a "pay as you go" model, which can help in reducing capital expenses but may also lead to unexpected operating expenses for users.

Cloud testing is a form of software testing in which web applications use cloud computing environments to simulate real-world user traffic.

Linode, LLC is an American cloud hosting provider that focuses on providing Linux powered virtual machines to support a wide range of applications. It was acquired by Akamai Technologies on March 21, 2022.

Cloud computing architecture refers to the components and subcomponents required for cloud computing. These components typically consist of a front end platform, back end platforms, a cloud based delivery, and a network. Combined, these components make up cloud computing architecture.

In computing, Hazelcast IMDG is an open source in-memory data grid based on Java. It is also the name of the company developing the product. The Hazelcast company is funded by venture capital and headquartered in Palo Alto, California.

A distributed file system for cloud is a file system that allows many clients to have access to data and supports operations on that data. Each data file may be partitioned into several parts called chunks. Each chunk may be stored on different remote machines, facilitating the parallel execution of applications. Typically, data is stored in files in a hierarchical tree, where the nodes represent directories. There are several ways to share files in a distributed architecture: each solution must be suitable for a certain type of application, depending on how complex the application is. Meanwhile, the security of the system must be ensured. Confidentiality, availability and integrity are the main keys for a secure system.

Kubernetes is an open-source container orchestration system for automating software deployment, scaling, and management. Originally designed by Google, the project is now maintained by the Cloud Native Computing Foundation.

Autoscaling, also spelled auto scaling or auto-scaling, and sometimes also called automatic scaling, is a method used in cloud computing that dynamically adjusts the amount of computational resources in a server farm - typically measured by the number of active servers - automatically based on the load on the farm. For example, the number of servers running behind a web application may be increased or decreased automatically based on the number of active users on the site. Since such metrics may change dramatically throughout the course of the day, and servers are a limited resource that cost money to run even while idle, there is often an incentive to run "just enough" servers to support the current load while still being able to support sudden and large spikes in activity. Autoscaling is helpful for such needs, as it can reduce the number of active servers when activity is low, and launch new servers when activity is high. Autoscaling is closely related to, and builds upon, the idea of load balancing.

Oracle Cloud is a cloud computing service offered by Oracle Corporation providing servers, storage, network, applications and services through a global network of Oracle Corporation managed data centers. The company allows these services to be provisioned on demand over the Internet.

References

- 1 2 Chee, Brian J.S. (2010). Cloud Computing: Technologies and Strategies of the Ubiquitous Data Center. CRC Press. ISBN 9781439806173.

- ↑ Xu, Cheng-Zhong (2005). Scalable and Secure Internet Services and Architecture. CRC Press. ISBN 9781420035209.

- 1 2 "Research Report - In Demand – The Culture of Online Service Provision". Citrix. 14 October 2013. Archived from the original on 23 January 2014. Retrieved 30 January 2014.

- 1 2 Furht, Borko (2010). Handbook of Cloud Computing. Springer. ISBN 9781441965240.

- ↑ Nolle, Tom. "Designing public cloud applications for a hybrid cloud future". Tech Target. Retrieved 30 January 2014.

- 1 2 3 Randles, Martin, David Lamb, and A. Taleb-Bendiab. "A comparative study into distributed load balancing algorithms for cloud computing." Advanced Information Networking and Applications Workshops (WAINA), 2010 IEEE 24th International Conference on. IEEE, 2010.

- ↑ Ferris, James Michael. "Methods and systems for load balancing in cloud-based networks." U.S. Patent Application 12/127,926.

- 1 2 Wang, S. C.; Yan, K. Q.; Liao, W. P.; Wang, S. S. (2010), "Towards a load balancing in a three-level cloud computing network", Proceedings of the 3rd International Conference on Computer Science and Information Technology (ICCSIT), IEEE: 108–113, ISBN 978-1-4244-5537-9

- ↑ Kansal, Nidhi Jain, and Inderveer Chana. "Cloud load balancing techniques: A step towards green computing." IJCSI International Journal of Computer Science Issues 9.1 (2012): 1694-0814.

- ↑ Wee, Sewook, and Huan Liu. "Client-side load balancer using cloud." Proceedings of the 2010 ACM Symposium on Applied Computing. ACM, 2010.